By Ashvin Roharia

“With the help of Edge Impulse, they were able to collect keyword data, train an industrial-grade ML model, and deploy it into their own custom workflow within just months.”

When HP, one of the best-known brands in business and personal productivity hardware, set out to build their next generation of wireless headset hardware, they looked for a functionality that had never been done before. The resolution? A cutting edge voice-control feature, built directly into their flagship headsets, to allow users the ability to answer or decline phone calls from their connected devices.

Using a keyword-detecting AI algorithm running directly on-device, without any cloud interfacing at all, this feature is the culmination of months of collaboration between HP and Edge Impulse to train, build, and deploy, en masse, not just a novel function that responds to the two keywords, but works with eleven different languages.

These headsets — the Poly Voyager Free 60 earbuds, Poly Voyager Surround 80 and 85 headsets, and Poly Voyager Legend 50 headsets — are now available for sale globally, with the voice-command functionality fully accessible. Let’s take a deeper look at how this came together and made its way to the world.

A couple years ago, HP set out to improve the clunky way users have to interact with incoming calls through the traditional headset user experience, where responding to calls would require a physical button press on a hard-to-spot part of the headset, or necessitated utilizing the phone itself. This could be disruptive, especially when hands are tied up with typing or writing.

The HP team came up with a great solution for this — voice control. But to implement voice control, the team faced another challenge: How to efficiently enable the headset to respond to voice commands?

Implementing voice-commands requires the use of AI algorithms that detect specified, spoken keywords.

The traditional solution for this type of use case is to record audio using an onboard microphone, send that audio data up to a cloud, where server-based algorithms would detect if the keyword was said (known as keyword spotting, or KWS), then send the output response back to the device.

However, HP opted for a new solution: running a keyword detection algorithm directly on the headset itself, without any data transmission. This onboard, edge AI processing offers many benefits from the traditional method:

(HP also notes that on the security side, they do not scrape any customer data, nor do they ever listen to customer calls.)

HP used Edge Impulse’s platform to give their engineers the tools to fast-track their model development and deployment phase. In fact, with the help of Edge Impulse, they were able to collect keyword data, train an industrial-grade ML model, and deploy it into their own custom workflow within just months.

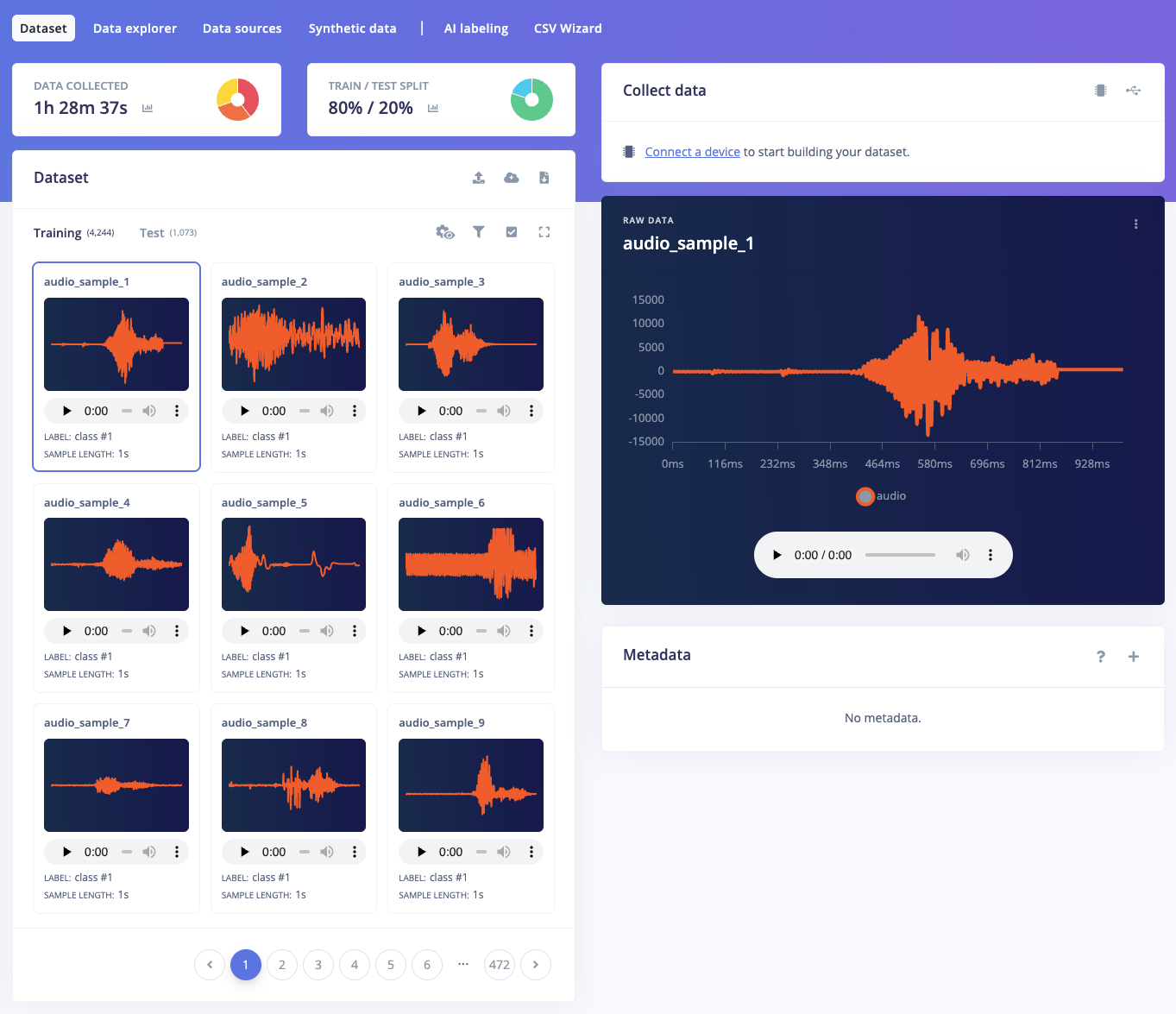

The first step in building an AI solution is collecting data. HP generated and labeled the KWS dataset themselves. They were able to use tools such as Edge Impulse’s Keyword Collector web application to utilize the power of the crowd for data collection. This web application records your audience’s keyword samples and automatically splits the full recording into one-second sub-samples after identifying when each keyword stops and ends via audio signal processing techniques. This tool can be easily distributed to crowds using a QR code where the URL can contain useful data such as the desired keyword label, length in time, and audio frequency.

With Edge Impulse’s tools and outsourcing data collection, HP was able to collect labeled audio keyword data.

This data was then stored in an AWS S3 bucket, which allows for direct integration into Edge Impulse. This lets users to quickly and easily import data and update models when new data is loaded into the S3 account.

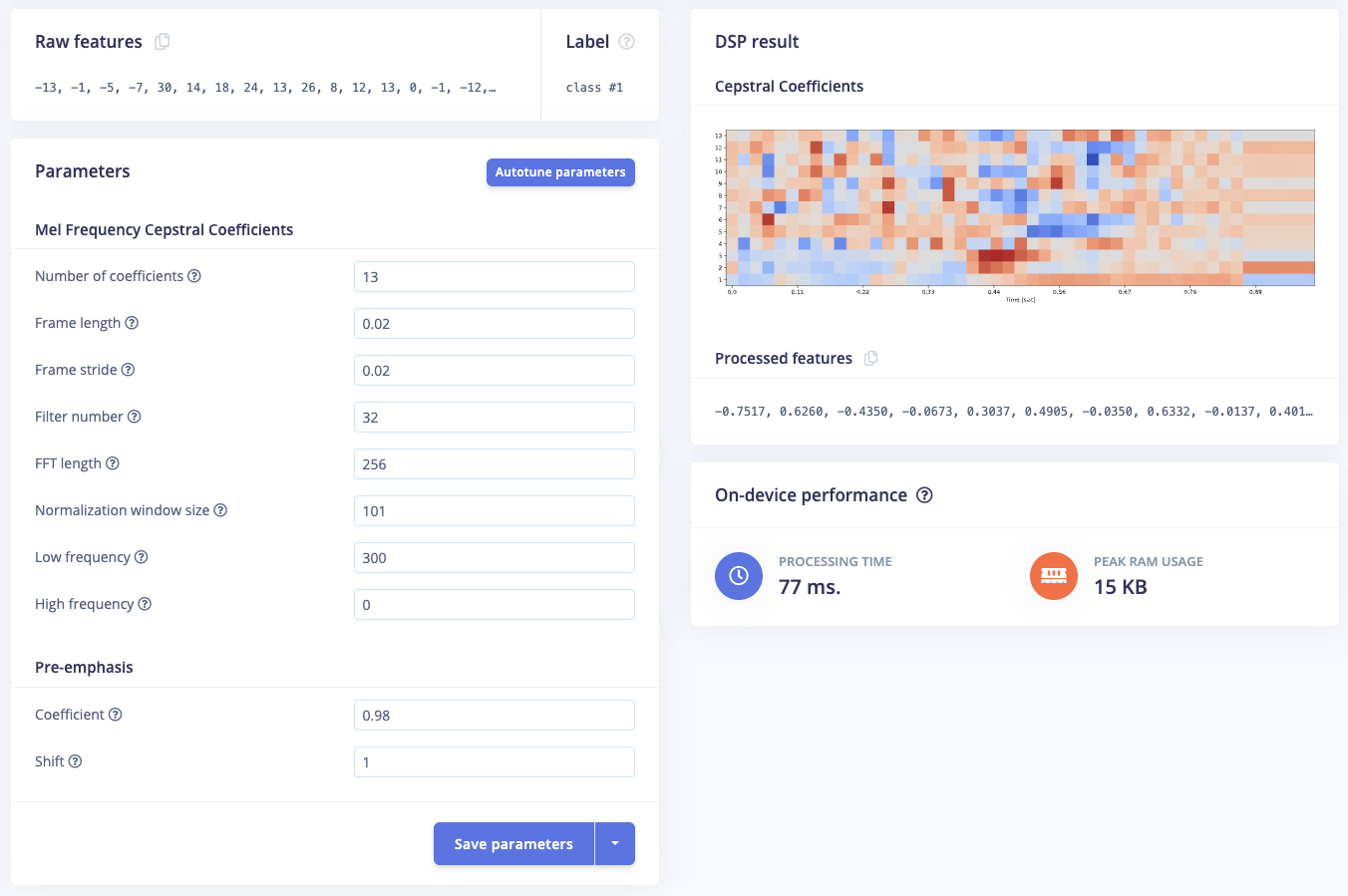

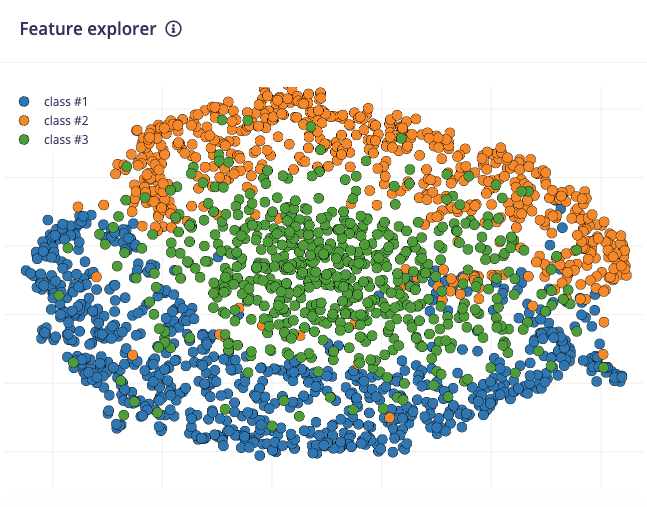

Once a dataset is collected, it needs to be processed to allow useful details to be determined. Edge Impulse offers pre-made processing blocks for feature extraction such as the Audio MFE and Audio MFCC blocks. However, HP needed something a bit more custom for their hardware. They were able to create a custom processing block that was optimized for their hardware. The block is used to extract time and frequency features from the signal and performs well for speech recognition, in multiple languages. Once this custom processing block was imported into HP’s Edge Impulse organization, anyone on their ML team could use that block, either running it locally or in the cloud within Edge Impulse. This enabled easy collaboration between different engineers working on different projects for each of the KWS languages.

After the features were extracted from the dataset, HP needed to create a neural network classifier. A neural network classifier will take some input data, and output a probability score that indicates how likely it is that the input data belongs to a particular class. HP utilized Edge Impulse infrastructure to train models using GPUs to quickly iterate through different sets of data, processing blocks, and neural network architectures. Within weeks, they generated models for all of multiple languages, most of them having over 98% accuracy and all fitting well within the latency and RAM and FLASH usage requirements.

Edge Impulse’s tool called Performance Calibration was also used to test, fine-tune, and simulate running the KWS models using continuous real-world and synthetically generated streams of data. This gave HP a specific post-processing configuration to tailor the model to minimize either false activations (False Alarm Rate — FAR) or false rejections (False Rejection Rate — FRR).

Once HP’s team was confident that the model performance metrics looked good on paper, it was time to try it out on-device. They generated a TensorFlow Lite model in Edge Impulse and integrated it within their own codebase. Once the firmware engineers had tested the model thoroughly, all the language models were uploaded to their own public-facing app, available on the web, through mobile, or desktop and can be used to update the headset’s firmware over-the-air (OTA).

When the feature is enabled in the app, the appropriate model will be loaded to the headset via OTA, based on the language set on the device. If the user ever switches the language, the new model will be uploaded to the headset via OTA.

The integration of Edge Impulse's AI technology into HP’s earbuds and headsets represents a significant step in embedded, hands-free voice control. By leveraging on-device keyword detection, this approach provides low-latency, connectivity-independence, and high security for the interface. This innovation not only enhances user experience but also highlights the opportunity of incorporating edge AI into modern consumer electronics.

The successful deployment of this technology, enabled by Edge Impulse's robust platform, showcases the potential of AI to transform everyday devices. This collaboration sets a new standard for what is possible with voice control, promising even more advancements in the realm of smart, intuitive, and user-friendly solutions.

Author Bio:

Ashvin Roharia has over 10 years of experience in the semiconductor industry, as a firmware engineer at companies such as Intel, AMD, and Silicon Labs. He is currently a solutions engineer at Edge Impulse, where he collaborates with customers to develop edge AI applications, including HP’s keyword-detection solution. He has also implemented other customer AI solutions in computer vision and multi-sensor applications, optimized for edge hardware ranging from MCUs to CPUs to GPUs.

Ashvin holds a B.S. in Computer and Electrical Engineering from the University of Texas at Austin, where he is based, and an M.S. in Computer Science from Georgia Tech. Connect with him at forum.edgeimpulse.com.