It has been estimated that a typical American spends a full six months of their life waiting for one thing or another. That waiting could be in traffic at rush hour, at a security checkpoint at the airport, or in the checkout line at a grocery store. One thing that all of these scenarios have in common is that nobody likes them. We all hate to wait! And this is not just a commentary on human psychology — this fact has very practical implications for businesses, especially in the retail sector. A recent survey revealed that 70% of people are unwilling to wait more than fifteen minutes in a line. And this problem may grow over time, as younger respondents indicated that they were more willing to exit the line before their turn if the wait was too long.

Needless to say, having customers abandon their purchase in the checkout line is not good for the bottom line. Customer satisfaction levels also plummet when service is seen as inadequate. Many retailers are presently struggling through staffing issues that make it difficult, or impossible, to provide the level of service that is needed during peak hours. That may not always be as big of a problem as it seems, says engineer Solomon Githu. He has designed a system that helps retail checkout operations to make the most of the resources that they do have available. Using an object detection pipeline built with Edge Impulse, he has demonstrated how customers can be redirected from registers with long lines to those with a shorter wait time.

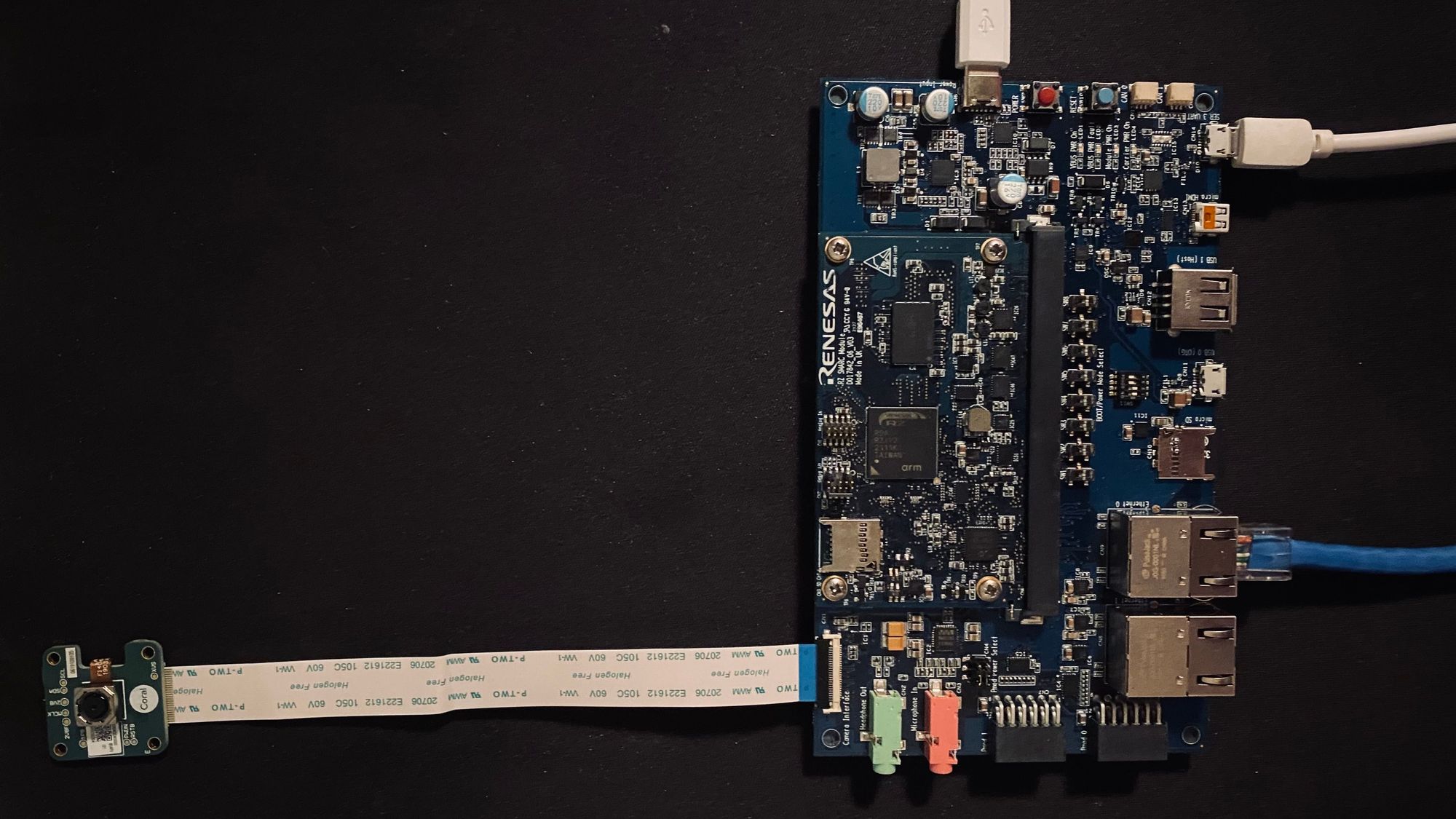

Githu’s idea was to leverage the security cameras that already exist in most checkout areas. By feeding those images into a machine learning model, he reasoned that it would be possible to count the number of people in each checkout line. With this critical information, it would be a relatively simple matter of writing a bit of logic to determine the optimal balance of customers at each register. The powerful Renesas RZ/V2L Evaluation Kit was chosen for this computer vision task. This board offers a dual Arm Cortex-A55 CPU and a Cortex-M33 CPU along with 2 GB of SDRAM and 64 GB of eMMC storage. The built-in DRP-AI accelerator provides considerable speed-ups when dealing with computer vision applications. To prove the concept, a five-megapixel Google Coral Camera was selected to capture images, but ultimately this could be replaced by an existing surveillance system.

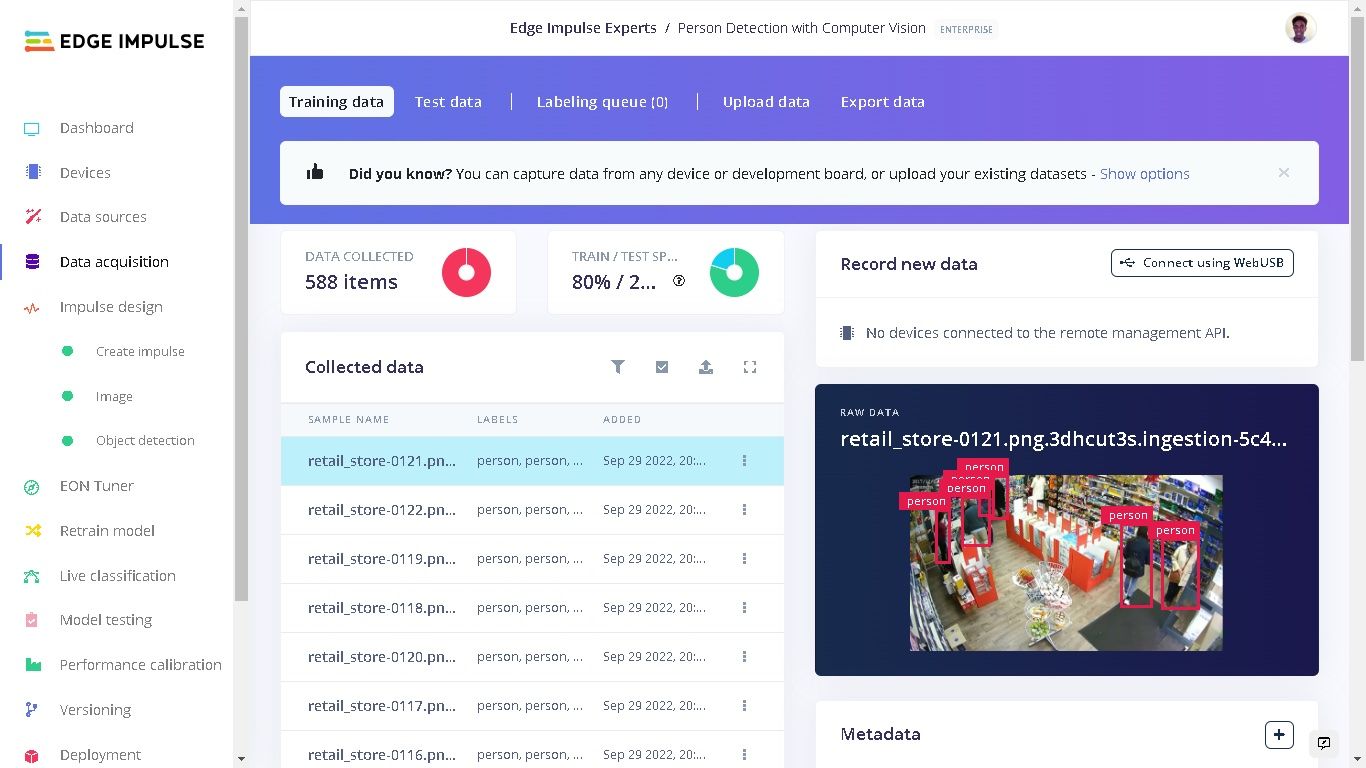

As a prerequisite to building the model, a training dataset needed to be created. Images of people were extracted from a number of public datasets from Kaggle and other sources. The images were taken from retail settings, and also a variety of other environments to make the model as well-generalized as possible. In total, 588 images were prepared for training, and another 156 images were prepared for testing purposes. The final set of images was uploaded to Edge Impulse Studio using the data acquisition tool.

The model will also need to know where the people are in each image, and that means bounding boxes need to be defined for each person in each image. Yes, all 750 of them. Talk about waiting! Fortunately though, Edge Impulse has an AI-assisted labeling tool that does most of the work for you. It automatically draws the boxes, then it is only necessary to make an occasional adjustment. Githu noted that this tool accurately labeled about 90% of samples.

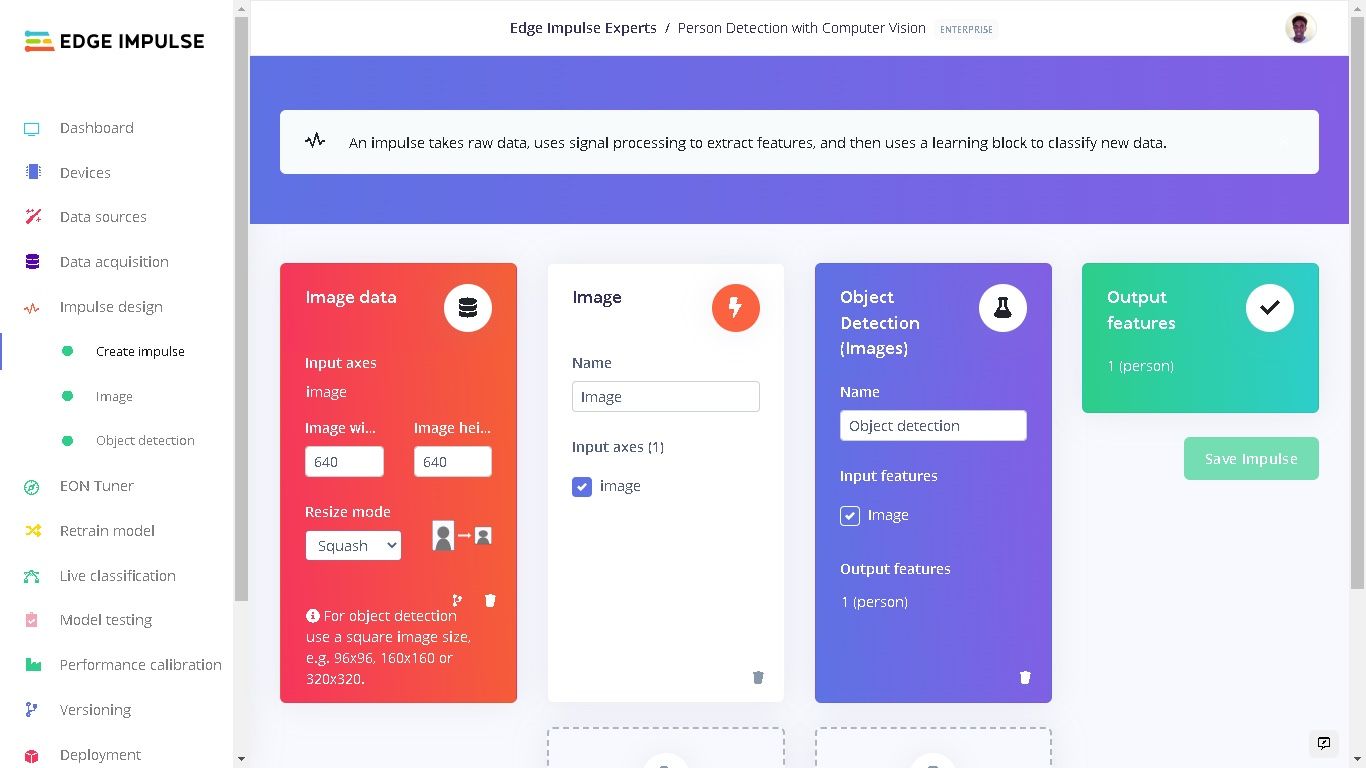

An impulse was created that extracts features from the 640 x 640 pixel input images, then feeds those features into an object detection neural network. Githu already had a YOLOv5 model that he wanted to use for this project, so he used Edge Impulse Studio’s bring your own model feature. This allowed him to leverage the knowledge already contained in this existing model, then further train it to perform the person detection task at hand. With the pipeline being ready to go, the training process was kicked off with the click of a button.

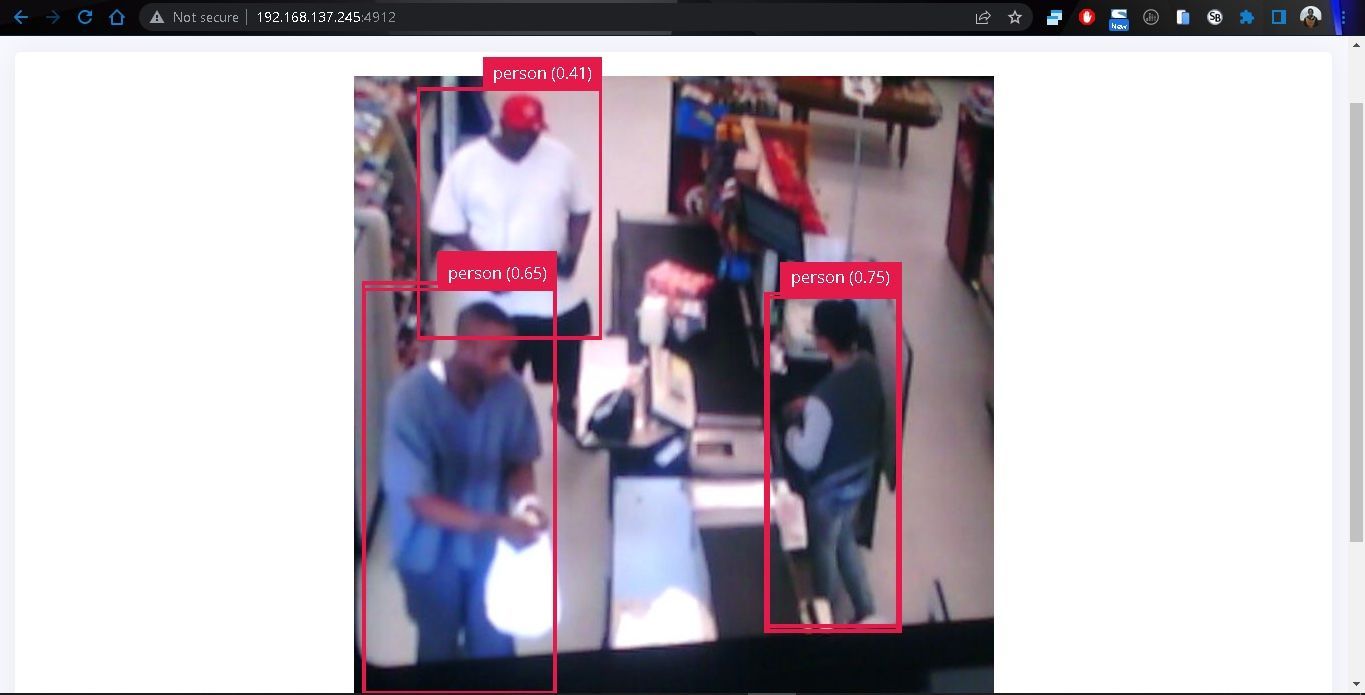

After training had completed, the model testing tool was used to check the accuracy of the model against a dataset that had not been included in the training process. This revealed that an accuracy of better than 85% had been achieved, which is more than sufficient for a prototype. As a further check, the live classification tool was launched, which made it possible to examine exactly what the model was labeling as people. Githu found the results to be just as expected, so the model was ready to be deployed to the physical hardware.

The Renesas RZ/V2L Evaluation Kit is fully supported by Edge Impulse, so deployment was as easy as installing the Edge Impulse CLI. Doing so connects the board to an Edge Impulse Studio project, and enables the trained model to be downloaded locally. The CLI also comes with an application that can display inference results in a web browser in real-time. Githu made use of this tool to get the machine learning pipeline up and running quickly.

As a finishing touch on the project, a web application was developed that uses the inference results to count the number of people in each checkout line at a retail store. Busy lines are identified with a red indicator, and short lines with a green indicator. This information could be used to help move customers around to most efficiently use the resources available.

From his past work in the field, Githu is well aware that computer vision is difficult to get right, and these applications are challenging to implement. He noted, however, that by using Edge Impulse Studio, it was surprisingly easy. The point-and-click interface and extensive documentation made collecting data and training the model a simple task. See what else Githu had to say about the platform — and how it simplified creating the prototype — in his project documentation.

Want to see Edge Impulse in action? Schedule a demo today.