Running machine learning (ML) models on microcontrollers is one of the most exciting developments of the past years, allowing small battery-powered devices to detect complex motions, recognize sounds, or find anomalies in sensor data. To deploy these models with the lowest power consumption possible we’ve partnered with Eta Compute to bring support for Edge Impulse to their ECM3532 Tensai SoC and their Eta Compute ECM3532 AI Sensor development board.

The ECM3532 is a very exciting SoC for ML applications. It combines both a general-purpose Cortex-M3 microcontroller and a CoolFlux DSP to speed up machine learning operations; and it supports Continuous Voltage Frequency Scaling, which allows the clock rate and voltage to be scaled at runtime to maximize energy efficiency. This combination yields significant power savings over other MCUs. This makes it possible to continuously run algorithms like gesture recognition, vibration analysis, or keyword spotting in less than 1 mW, and image recognition in just a few mW.

But hardware is only a small part of your machine learning model. Building a quality dataset, extracting the right features, and then training and deploying these models is still complicated. To make that easier we’ve added full support for both the chip family and for the ECM3532 AI Sensor development board to Edge Impulse.

Using Edge Impulse you can now quickly collect real-world sensor data, train ML models on this data in the cloud, and then deploy the model back to your AI Sensor. From there you can integrate the model into your applications with a single function call. Your sensors are then a whole lot smarter, being able to make sense of complex events in the real world. The built-in examples allow you to collect data from the accelerometer and the microphone, but it’s easy to integrate other sensors with a few lines of code.

How to get started

Excited? This is how you build your first machine learning model with the Eta Compute ECM3532 AI Sensor:

- Get an ECM3532 AI Sensor Board. You can either apply to win a FREE board by registering to our workshop (see below), or buy the board on Digikey.

- Connect the AI Sensor to Edge Impulse.

- Follow one of the tutorials on:

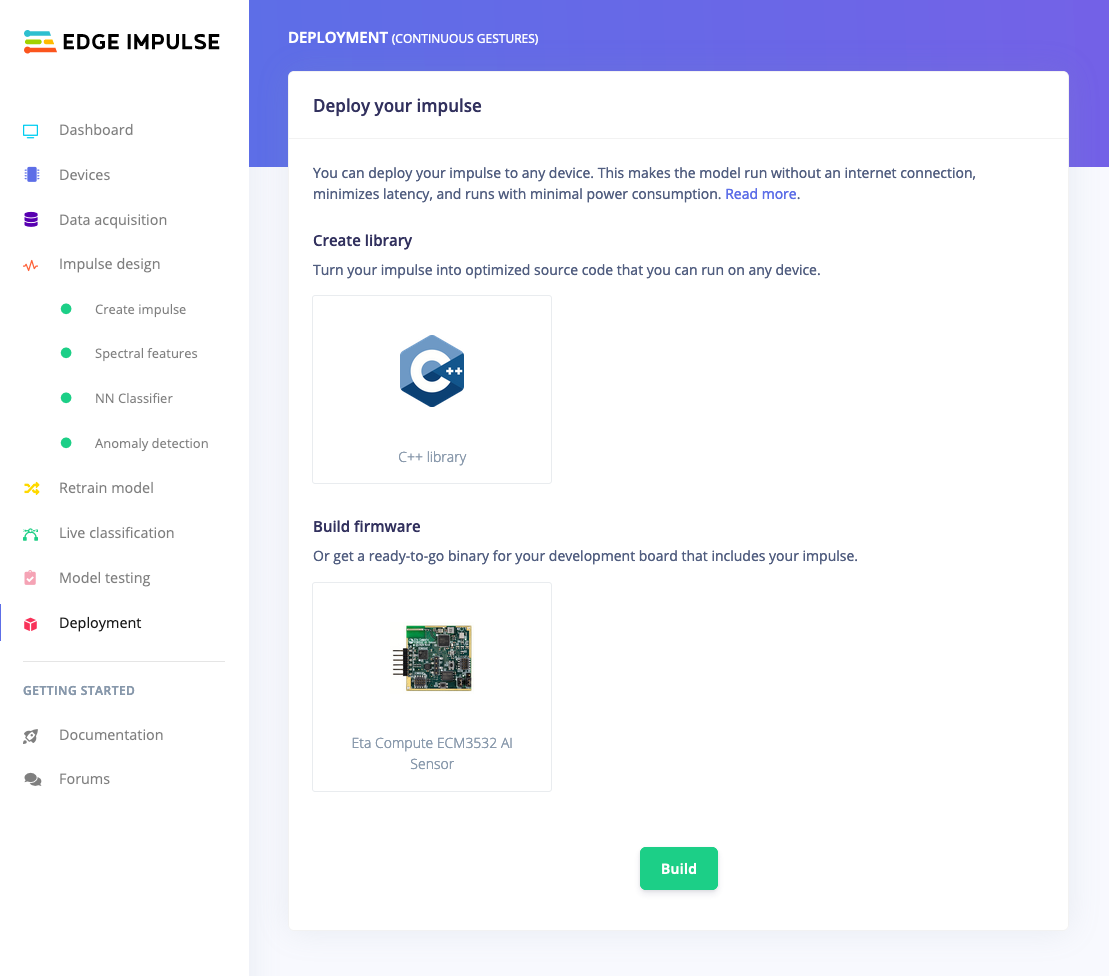

- Go to the Deployment tab in Edge Impulse, and build a new binary that includes your machine learning model for the AI Sensor; or export as a C++ library and integrate the model in your own firmware.

Voila! You now have an efficient machine learning model running with minimal power consumption.

Looking forward

This marks the first release for the ECM3532 in Edge Impulse, with full support for the DSP (lowering latency and power consumption even more) coming later this summer. We’re very excited to see a new generation of MCUs and SoCs being released that have ML co-processors. This is a great step forward for the embedded industry, being able to sense increasingly complex sensor events at the very edge, at the lowest power consumption possible.

Want to learn more? We’re hosting a technical workshop with Eta Compute on building your first ML model using the ECM3532 AI Sensor on July 14th - and we’re giving away 50 development kits. Registration for free here!

--

Jan Jongboom is the CTO and co-founder of Edge Impulse.