Staffing shortages have caused many serious problems in numerous professions throughout the past year, and healthcare has been no exception. This area has been especially hard hit with respect to staffing of nurses — the U.S. Bureau of Labor Statistics reported that 275,000 additional nurses will be needed by the end of the decade. No doubt about it, this is not just a problem for large healthcare systems to deal with; it causes significant problems for patients as well. It is feared that dangerous patient care practices and poor outcomes will become more common if this situation is not remedied soon.

Addressing the root causes behind staffing shortages is a complicated affair and may take many years to affect real change. But this is not a problem that can wait that long for a solution, so what do we do now? One way that we may be able to fill the gap is through clever uses of technology that help current staff members to be more efficient, and effectively do the job of more than one person. Machine learning enthusiast Adam Milton-Barker reasoned that if healthcare providers had fewer distractions, they might be able to accomplish more real work during their shifts. Towards this end, he developed a proof of concept system that was designed to reduce time wasted making unnecessary rounds, instead directing the attention of hospital staff to where it is actually needed.

With the help of Edge Impulse, Milton-Barker developed a machine learning algorithm that can recognize the keywords “doctor," “nurse," and “help” in spoken language. By optimizing this classification pipeline for edge computing devices, it was possible to run it on a small, low-power, inexpensive microcontroller that has the potential to be installed in every room of a healthcare facility that is used to house patients. With such a device installed in each room, requests for assistance can be passively detected, then wirelessly transmitted to a central server. By monitoring these requests, staff can be dispatched exactly where they are needed, when they are needed.

As the hardware platform for this device that is designed to minimize time wasted by staff and maximize positive patient outcomes, Milton-Barker chose the Arduino Nano 33 BLE Sense development board. A 32-bit Arm Cortex M4 MCU running at 64 MHz and 256 KB of SRAM come standard on this tiny board. Wireless communications are provided via a Bluetooth Low Energy radio that is ideal for communicating inference results from the machine learning algorithm. In addition to a slew of other sensors, there is also a microphone, which makes the Nano 33 BLE Sense an all-in-one solution for this project.

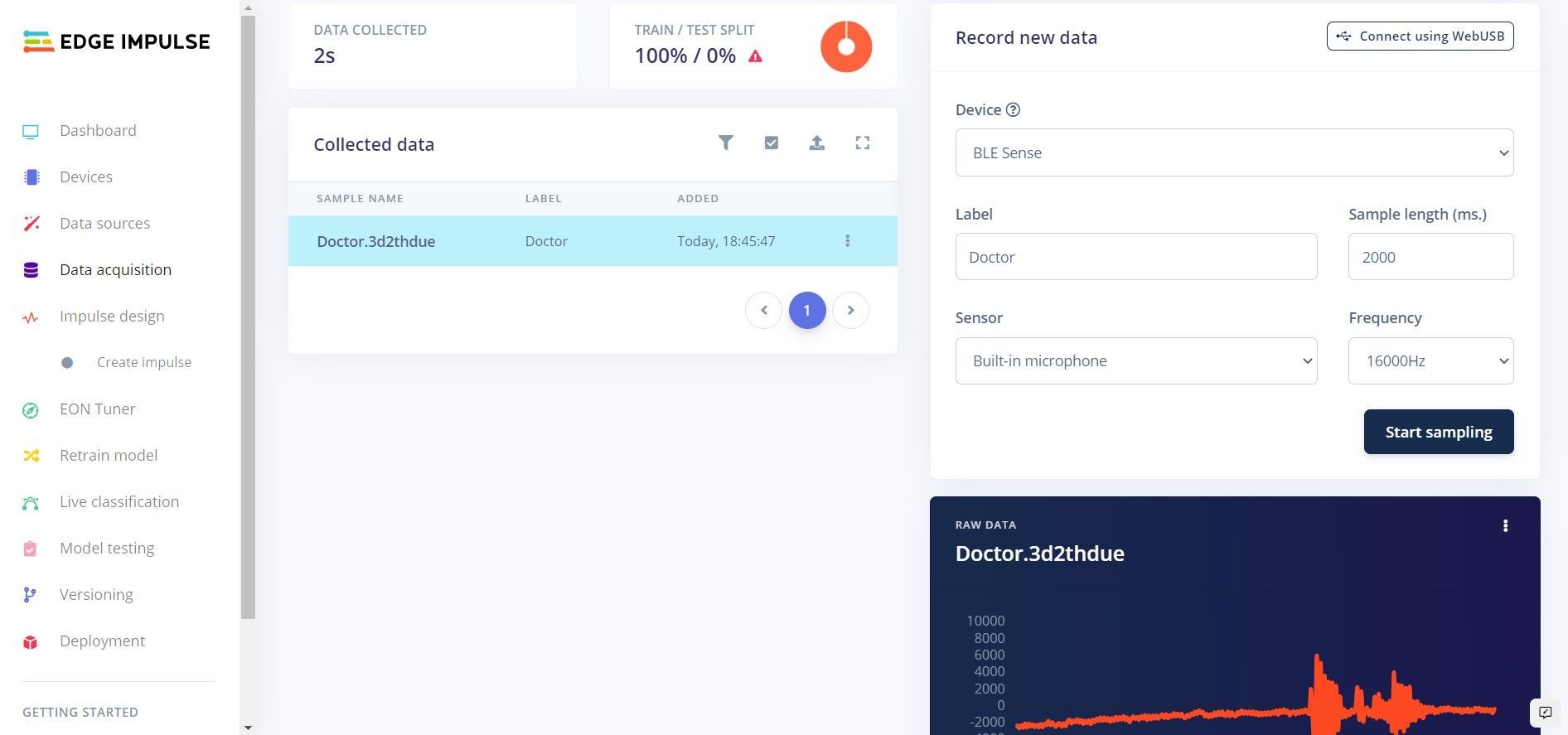

To teach the algorithm to understand the keywords, Milton-Barker recorded a custom dataset. He accomplished this by first linking the Arduino to his Edge Impulse project, which allowed him to record utterances with the onboard microphone and have them automatically uploaded to Edge Impulse. A total of 150 repetitions of each keyword were spoken during the data collection process. To make the model more robust, a “noise” class was also recorded and combined with data from the Microsoft Scalable Noisy Speech Dataset. As a final step, random speech samples were extracted from this same Microsoft speech dataset and used to create an “unknown” class.

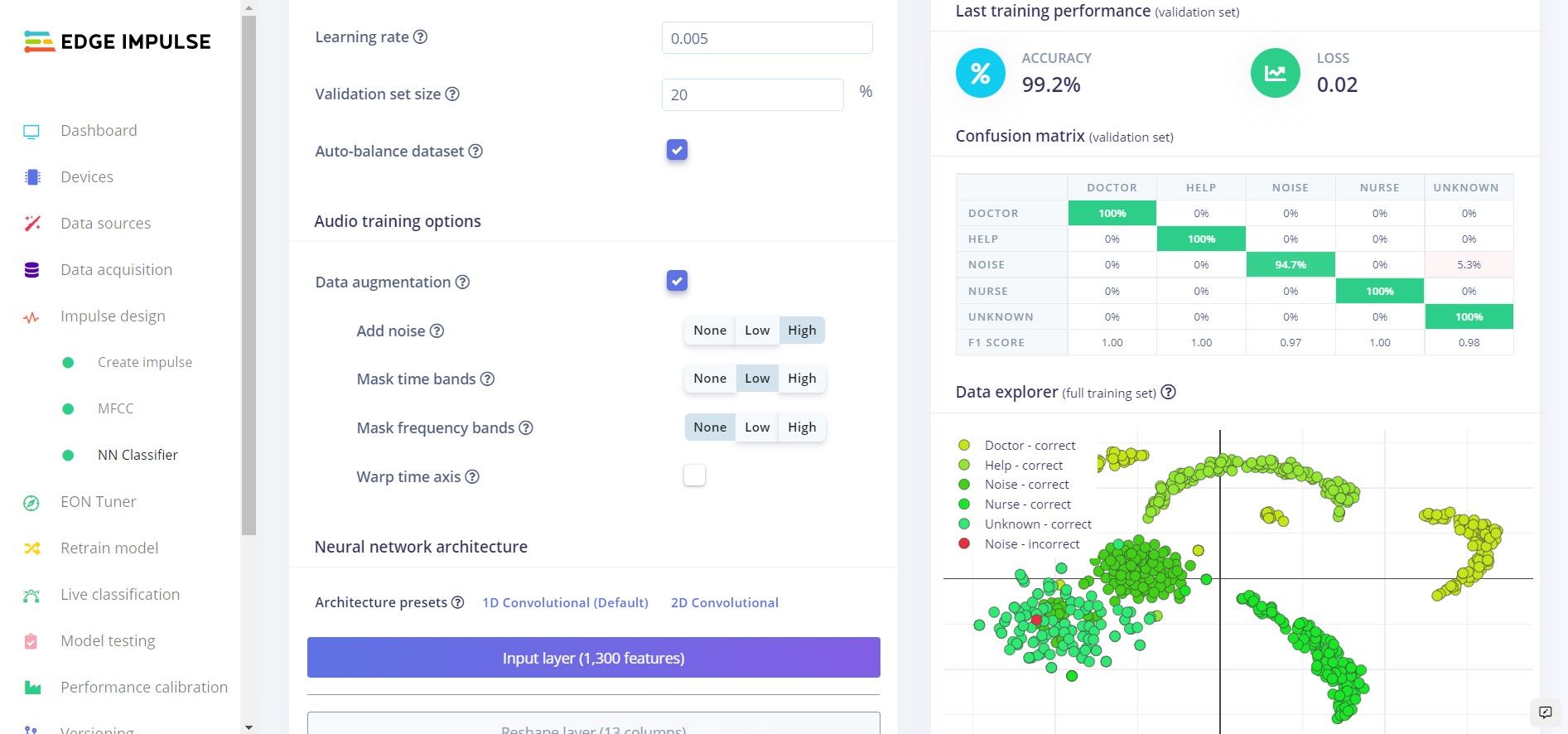

Now for the easy part — an impulse was created in Edge Impulse Studio consisting of an MFCC preprocessing block that is optimized for extracting the most relevant features from audio samples. This serves to reduce the computational overhead of subsequent steps and enable them to run on smaller hardware platforms, like microcontrollers. The features were fed into a neural network classifier, which will do the work of recognizing which of the known classes a new audio sample belongs to. The training process was initiated with the click of a button, and afterwards metrics were presented to give Milton-Barker an idea of how well the model was performing. With the average training classification accuracy coming in at 99.2%, the model was looking excellent. This result was validated using the live classification tool, which allowed for direct, on-device testing in real-time.

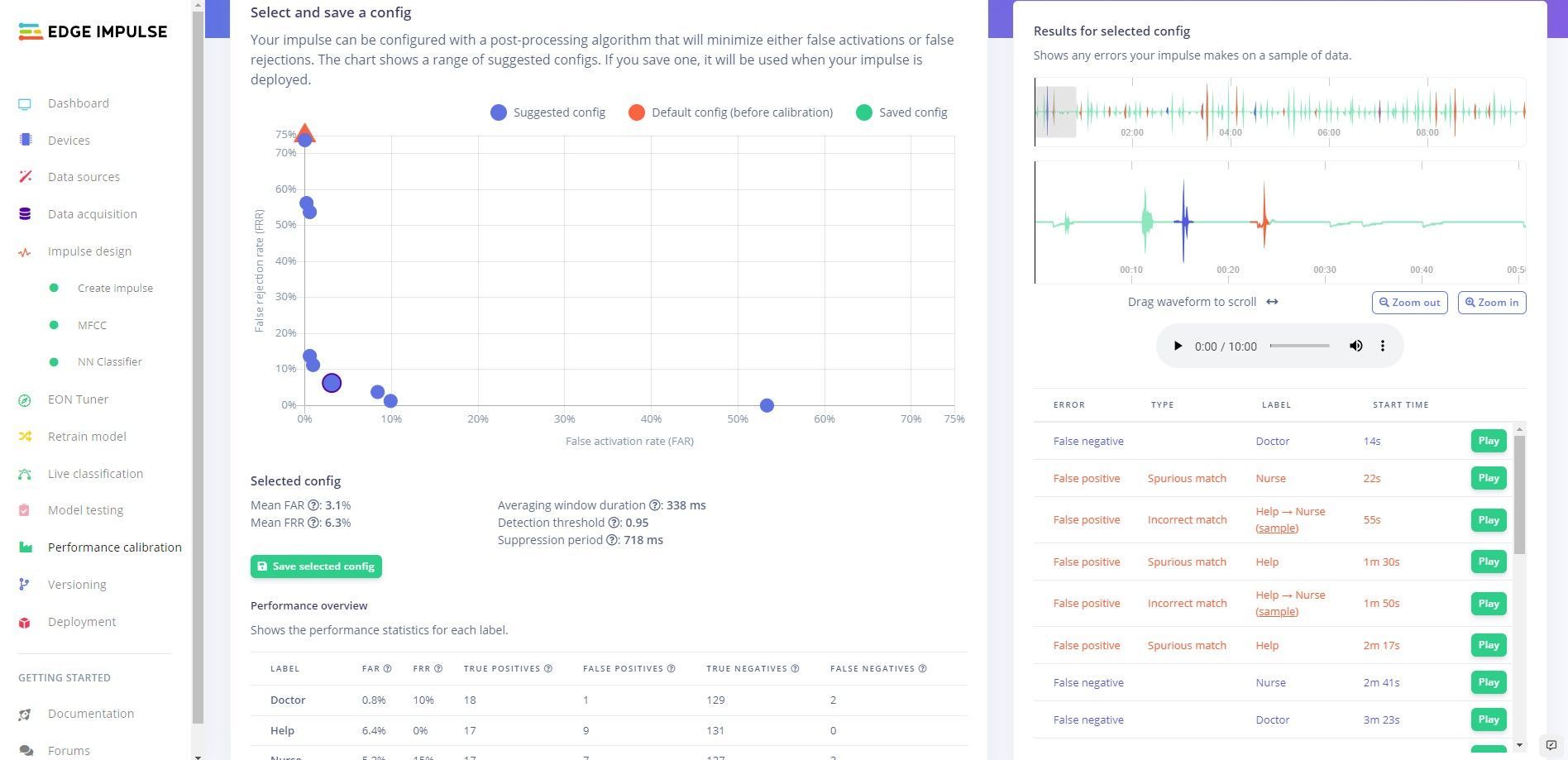

When it comes to healthcare applications, close might not be good enough. So the Performance Calibration tool was used to further test the model by assessing how it will perform under real world use with streaming audio data. The results of this testing helped with the selection of an appropriate post-processing algorithm that fine-tunes the final result. Adjustable parameters can tune the post-processing algorithm to control the trade-off between the false acceptance rate and false rejection rate. In short, the Performance Calibration tool makes a good model perform even better.

Having rigorously optimized the algorithm for performance on edge devices and for classification accuracy, everything was set for deployment to the physical hardware. Milton-Barker chose to do this by exporting the full analysis pipeline as an Arduino library. This offers the flexibility to insert one’s own logic into the code, for example, it would be possible to trigger an arbitrary action when a certain inference result occurs.

The final device was capable of detecting the predefined keywords from a continuous stream of real-time audio with a high degree of accuracy. The classification result, along with a unique identifier tied to each device, was broadcast wirelessly via Bluetooth such that a central server could record it. This is all the information required to know that a patient in a particular room is requesting help of a specific type. Not bad for an afternoon’s worth of work and less than a hundred dollars in parts.

Using the information in the documentation it would be a simple task to add to the set of recognized keywords, or to adapt the methods for an entirely different application area. And as a shortcut to get you on your way quickly, you can clone Milton-Barker’s Edge Impulse project.

Want to see Edge Impulse in action? Schedule a demo today.