Smart wearable technology is transforming the way we live and interact with the world. From smartwatches and fitness trackers to augmented reality glasses and smart clothing, wearable devices are changing the way we do just about everything that we do. Whether they are giving us insights to improve our health, keeping us safe from harm, enhancing our productivity, or just serving to entertain us, these gadgets are rapidly becoming indispensable to millions of people around the globe.

With processing units and sensors of all sorts shrinking down to ever smaller sizes as their costs simultaneously drop, it is becoming practical to incorporate them into many more products. As a case in point, consider Justin Lutz’s intelligent hiking jacket. Hiking may not be the sort of pastime one would typically associate with technology — I mean, it is all about being out in the wilderness and enjoying nature, right? Well, yes, but you would not want to get caught out in a storm, would you? And during the course of a hike, you might see something that you want to revisit later, or tell a friend about so they can also see it.

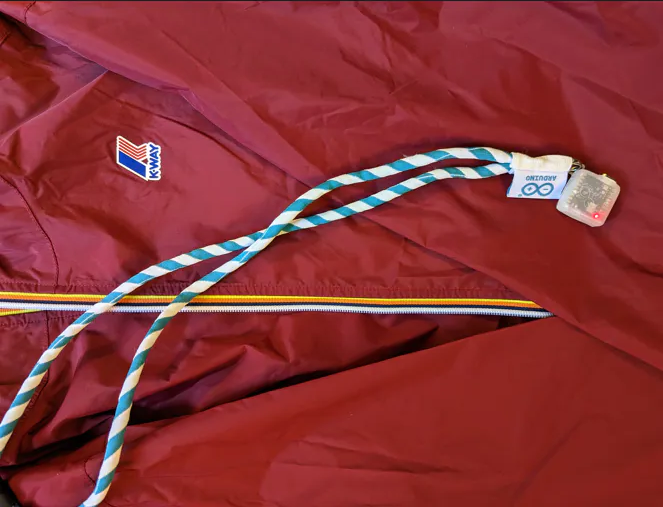

Lutz’s jacket can help with both of these things, and it does so transparently, so that there is nothing to get between you and the great outdoors. So that he could hike in style, Lutz chose a K-Way jacket equipped with an Arduino Nicla Sense ME development board as the platform for his prototype device. The Nicla Sense ME is a tiny board, which is ideal when you want the technology to just disappear into the background. It also requires very little power for operation, making it a good choice for on-the-go applications.

The Nicla Sense ME is loaded with sensors, and two of them were leveraged to bring Lutz’s plan to fruition. First, the accelerometer was used to collect motion data, which was then fed into a machine learning model created with Edge Impulse Studio that can recognize hand gestures. By performing a specific gesture, the jacket will remember the GPS coordinates of the present location for later. Also, the barometer was put to work to keep watch for nasty weather. When it looks like you might be at risk of getting stormed on, the jacket will let you know that you might want to make your way back to your car.

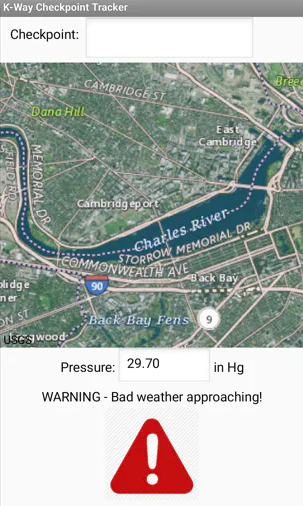

The Arduino was used in concert with a smartphone via a wireless Bluetooth Low Energy connection. When the wearer draws a letter “C” (for “checkpoint") in the air, the phone’s GPS receiver is leveraged to determine the precise location and plot it on a map application for later use. Similarly, when the barometric pressure drops below a predefined threshold, that fact is reported to the phone so that it can alert the wearer of the jacket that a storm’s a-brewing.

The Nicla Sense ME has all the power it needs to run a highly-optimized machine learning algorithm developed using Edge Impulse with its 64 MHz Arm Cortex-M4 processor. The 64 KB of RAM is also up to the task, but when you need to do multiple things at the same time, like run a machine learning algorithm and operate a Bluetooth transceiver, it might be just a bit too thin at times. Since Lutz wanted to do exactly these two things, he ran into some issues here, but he came across a project by a brilliant engineer named Nick Bild (hey, that name sounds familiar!) that helped him to run everything at the same time.

With the plan hammered out, it was time to collect some training data to teach the gesture recognition model to know what to look for. So, a very DIY wristband was created using the included Arduino lanyard (ideally, this would be accomplished with a pouch on the sleeve to slide the Nicla Sense ME into) so that the accelerometer could capture arm movements. Then, samples of standing still, walking, and drawing the letter “C” in the air were collected during a hike. These samples were then all uploaded to Edge Impulse Studio using the data acquisition tool. About 15 minutes of data was captured in total.

An impulse was created using Edge Impulse Studio’s slick web-based interface. This defines how the sensor data will be processed from start to finish. In this case, it was preprocessed to split it into two second chunks, then a spectral analysis step extracts frequency and power characteristics from the signal. This is great for extracting the most important features from repetitive signals, such as you might see with accelerometer data. Finally, a neural network classifier was used to translate motion data into hand gesture classifications.

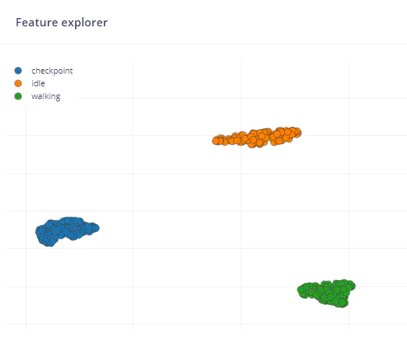

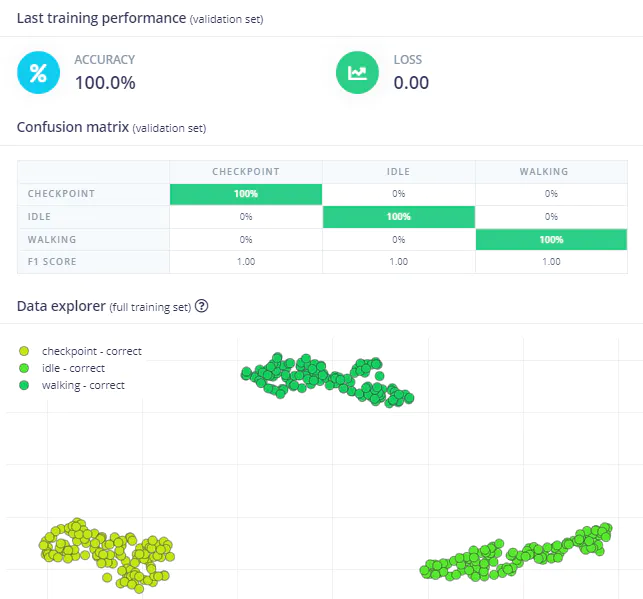

To get an idea of how informative the training data is, and how likely it is to produce an accurate model, the Feature Explorer tool was utilized. This showed that there was excellent separation between the data points that comprised each class. With no apparent data problems detected, the training process was initiated with a simple button click. After about a minute, training had completed, and metrics were presented to help assess how good of a job the model was doing.

The training accuracy was reported as having reached 100%, which is of course as good as it can get. This is not entirely surprising given what was observed in the Feature Explorer, but nevertheless, it is still a good idea to also use the more stringent model testing tool to validate that result and increase your confidence that the model did not overfit to the training data. This tool also reported an average accuracy of 100%, so the only thing left to do was deploy the pipeline to the Arduino and go for a hike.

Well, almost all that there was to do, that is. Lutz also developed an Android application for smartphones that will track any checkpoints that a user requests it to via a hand gesture. It is also used to provide alerts when bad weather is on the way. But when that was done, the deployment was just a matter of a few button clicks to download the full machine learning pipeline as an Arduino library that can be flashed to the hardware using the Arduino IDE.

Lutz’s proof of concept hiking jacket is a great demonstration of how transparent technologies can really enhance the pastimes that we already enjoy. Are you ready to go for a hike? Better check out the project documentation first — those look like storm clouds to me.

Want to see Edge Impulse in action? Schedule a demo today.