The aphorism “you don't know what you've got until it's gone” may have inspired countless poems and ballads, but if that happens to also be your guiding principle in managing your organization’s inventory, you are headed for a world of pain. While it may not be the most exciting part of a business, tracking inventory is crucial as it ensures the efficient management of stock levels, minimizes costs, and maximizes profitability. Good practices enable companies to maintain optimal stock levels, preventing both overstocking and stockouts. Overstocking ties up capital and incurs additional storage costs, while stockouts can lead to lost sales and dissatisfied customers. Furthermore, precise inventory management enables better forecasting and planning, helping businesses respond promptly to market demand and trends.

Established businesses that have always managed inventory manually may be reluctant to adopt an automated solution because of the large upfront costs and potential for downtime while the new system is being installed. Electrical engineer and machine learning enthusiast Christopher Mendez has recently demonstrated that these concerns may be misplaced, however. By using Edge Impulse, Mendez showed that a highly accurate vision-based inventory tracking system can be produced very inexpensively, and without disrupting any existing business processes.

At the core of the proof of concept device developed by Mendez is a BrainChip Akida PCIe Board. An AKD1000 Neuromorphic Hardware Accelerator powers this board, which enables it to run even complex machine learning algorithms on the edge while slowing sipping power. For general computational tasks and capturing images, Mendez also included a Raspberry Pi 5 single board computer and a Raspberry Pi Camera Module in the build. And to demonstrate a simple way that the current state of a product inventory can be presented to users, he also connected a small RGB LED matrix to the device.

Using this hardware, Mendez planned to use an object detection algorithm to locate specific items, track their positions, and maintain a count of how many there are — all in real-time. The exact items that are being tracked can of course be customized to each individual use case, but for demonstration purposes, Mendez chose to focus on a tray of terminal blocks. Using information from the object detector, the LED matrix pixels will light up to indicate which cells in the tray are populated. Furthermore, additional information can be made available in a web-based interface, which is a more realistic option for a real-world inventory system.

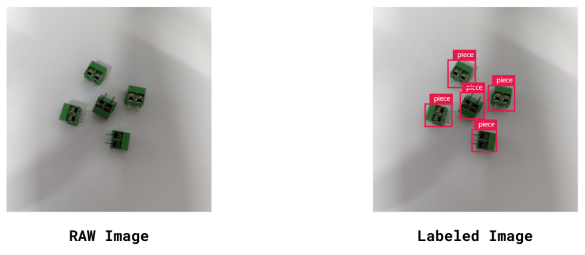

Before that final system was ready for use, there was a bit of work that needed to be completed. First, Mendez set up and configured all of the hardware. With that out of the way, it was time to collect a dataset that would ultimately be used to teach the machine learning algorithm to recognize what terminal blocks look like. This was done by using a cellphone camera to capture images, which were then uploaded to Edge Impulse. By using the Labeling Queue tool, bounding boxes were drawn around each terminal block to help the algorithm recognize them. The Labeling Queue gives an AI-powered assist that helps speed this task along by helping to position the bounding boxes automatically.

Once the annotation is complete, the next step is to create an impulse. This defines each step of the data processing pipeline, from collecting a new data point to making a prediction with the machine learning algorithm. For this application, the first step resizes the images captured by the camera — this has the effect of reducing the computational resources that are needed downstream. Next, the most important features from the raw data are extracted before being forwarded into Edge Impulse’s own FOMO object detection algorithm, which has been optimized for use on Akida hardware accelerators. The output of the model is a list of detected objects and their locations in the image.

After setting a few model hyperparameters, Mendez initiated the training process with the click of a button. When complete, a confusion matrix was displayed to help assess the performance of the model. It was quite easy to interpret the results in this case — an F1 score of 100 percent had been achieved. That means all objects were detected, and there were no false positives.

Mendez has provided a GitHub repository where source code for this project can be downloaded. Once that code is paired with a MetaTF format file downloaded from Edge Impulse that contains the trained model, local inferences can be run on the Raspberry Pi equipped with an Akida PCIe Board. Mendez has written multiple implementations of the software, some with additional optimizations or larger bounding boxes drawn in the display, and some that use a USB webcam instead of the Camera Module 3. There is really no limit to the possible customizations, so the same pipeline could run on a different platform if desired.

With the BrainChip Akida Neuromorphic Hardware Accelerator, it was found that the system could run inferences at a whopping 56 frames per second! Not bad at all for a computer vision pipeline running on edge computing hardware. Moreover, this required less than 100 mW of power consumption.

If you are in the market for an inventory control system, getting started is really quite simple. First, clone the public Edge Impulse project, collect some images of the items that you want to keep track of, then read through the project write-up to assist you in putting it all together.