Did you know that you can deploy your impulse to almost any platform using our C++ Inferencing SDK library?

The easiest way to get started with embedded machine learning (ML) on Edge Impulse is to use one of our fully supported development boards. Such boards include command-line interface (CLI) integration so you can easily capture data and deploy trained models in a few clicks. They allow you to construct ML pipelines with minimal effort!

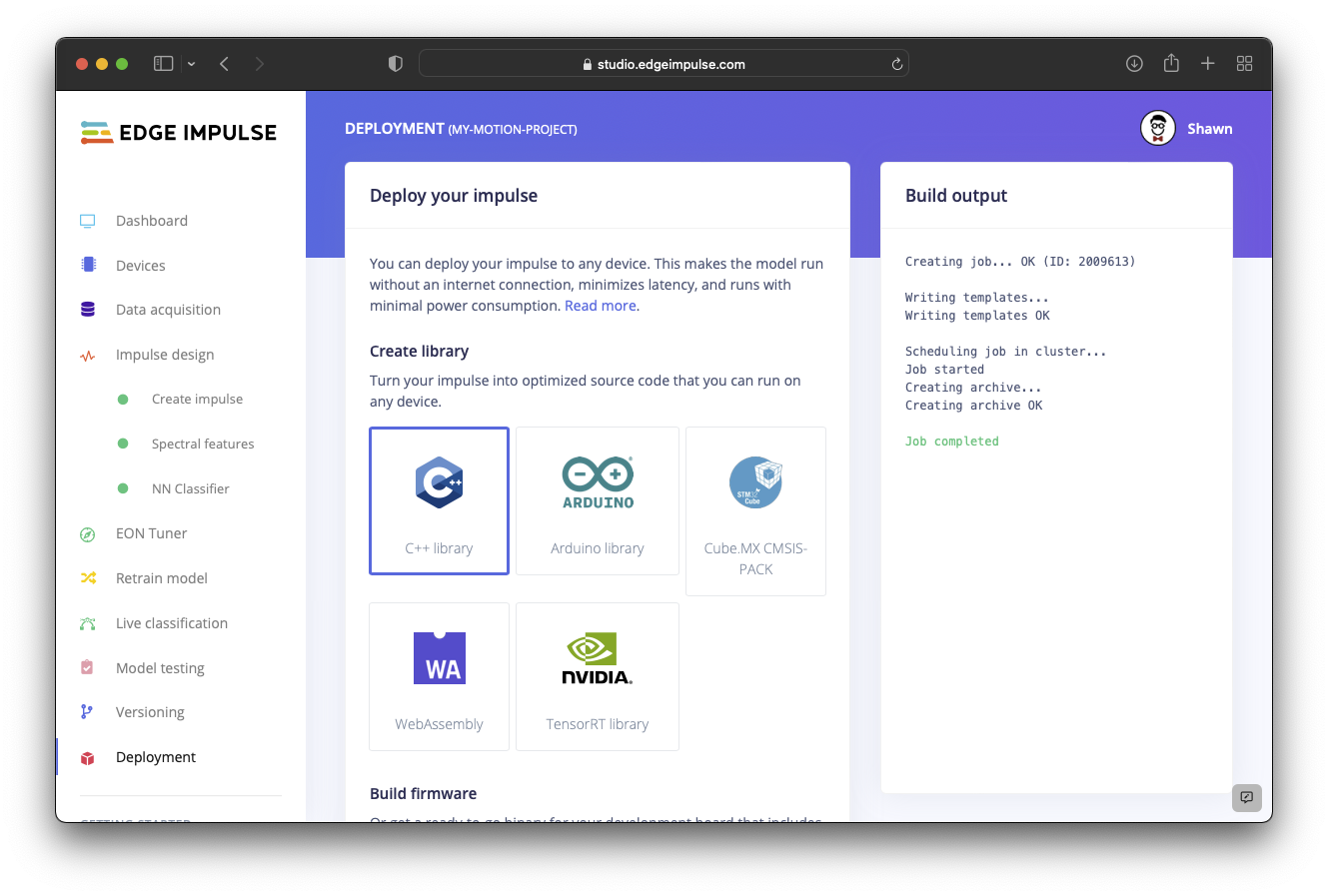

However, we fully understand that you may not want to use a ready-made development board. Part of the fun of embedded systems in being able to choose your own hardware! For a while, Edge Impulse has allowed users to download a trained machine learning model wrapped in an impulse (which includes all of the preprocessing/DSP code) as part of a C++ library.

Based on various feedback from you, our community, we realized that this feature was obscured or not highlighted very well. It also requires a slightly more advanced understanding of C++ and development environments to use. So, we created a number of resources to help you bring your edge ML projects to life with this library.

Just let me see the guides already!

- Deploy your model as a C++ library

- Running your impulse locally

- C++ inference SDK library API reference

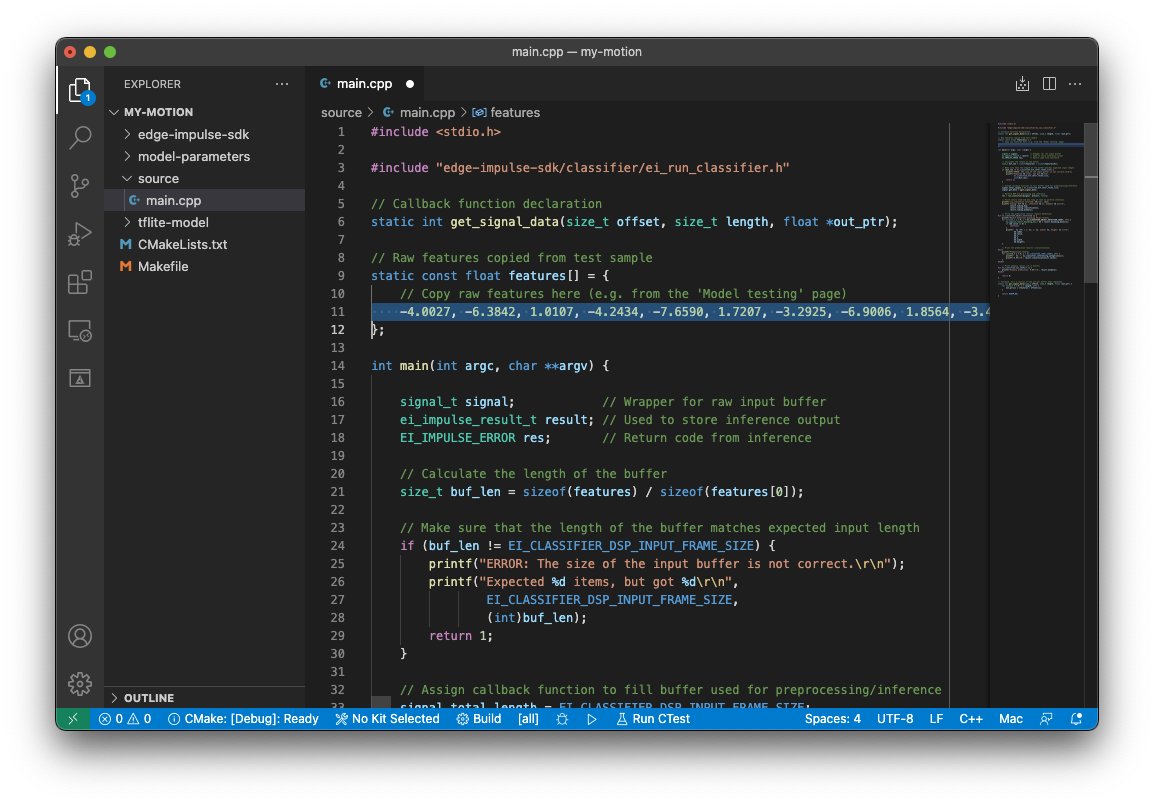

The first is a tutorial that walks you through the process of downloading the C++ library from an existing Edge Impulse library and setting up a local build environment on a Linux, Mac, or Windows machine to compile it. You will see how to include the library in your application and how to create a Makefile to link the necessary libraries.

We understand that not every development environment relies on GNU Make. So, the guide walks you through the important parts of the Makefile so you can get an idea of which header and source files you need to include in your own build system.

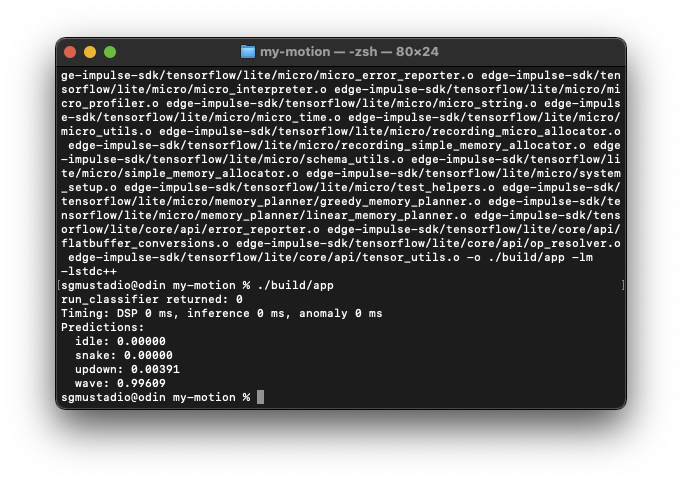

To keep things simple, we paste in static features from a test sample. This process allows you to see how inference works without needing to connect a real sensor. You’ll build the project with Make and a C++ compiler, run the application locally on your computer, and print the results of the impulse.

We also have a series of tutorials that guide you through the process of using your impulse on a variety of platforms. We cover many of the popular development environments, such as Arduino, mbed, TI, STM32, etc.

Finally, we put together an API reference to help you see all of the available public functions, macros, and structures you can use when working with the C++ library. The C++ library can be daunting at first, but we hope that this reference guide will help you see how the various commands operate.

As long as you have access to a C++ compiler (and your hardware has the resources necessary to run the impulse), you can port your machine learning code to any device you wish!

Let us know if you run into any problems with the C++ library (or if you just want to share your cool project) on the forums.