In just a few years, the Raspberry Pi has become one of the most popular Single Board Computers, allowing users to run an entire Operating System in a small form factor and for a very low price. Around the same time, Docker was released to the public, bringing major evolutions to the design of web applications by introducing containers and microservices to a large developers’ community.

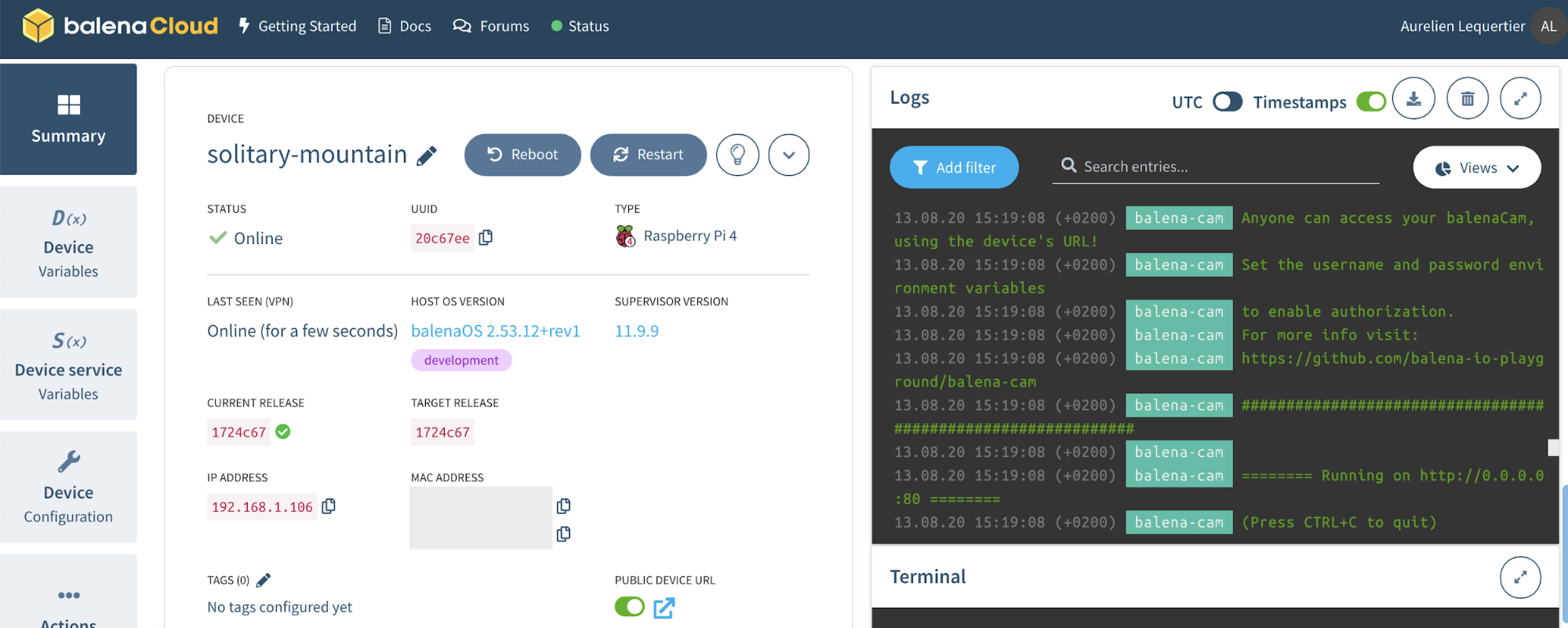

Putting it all together and to simplify the deployment of IoT applications on Single Board Computers, Balena introduced a platform to allow developers to run and deploy container-based applications on their favorite hardware. Balena built its own Operating System (balenaOS), the balenaEngine to manage containers running on a device and a web platform (balenaCloud) to control the fleet of connected devices. Usually having an IoT application running in one or a few devices is simple, however, when you need to scale the number of devices it is not that simple without a fleet management tool.

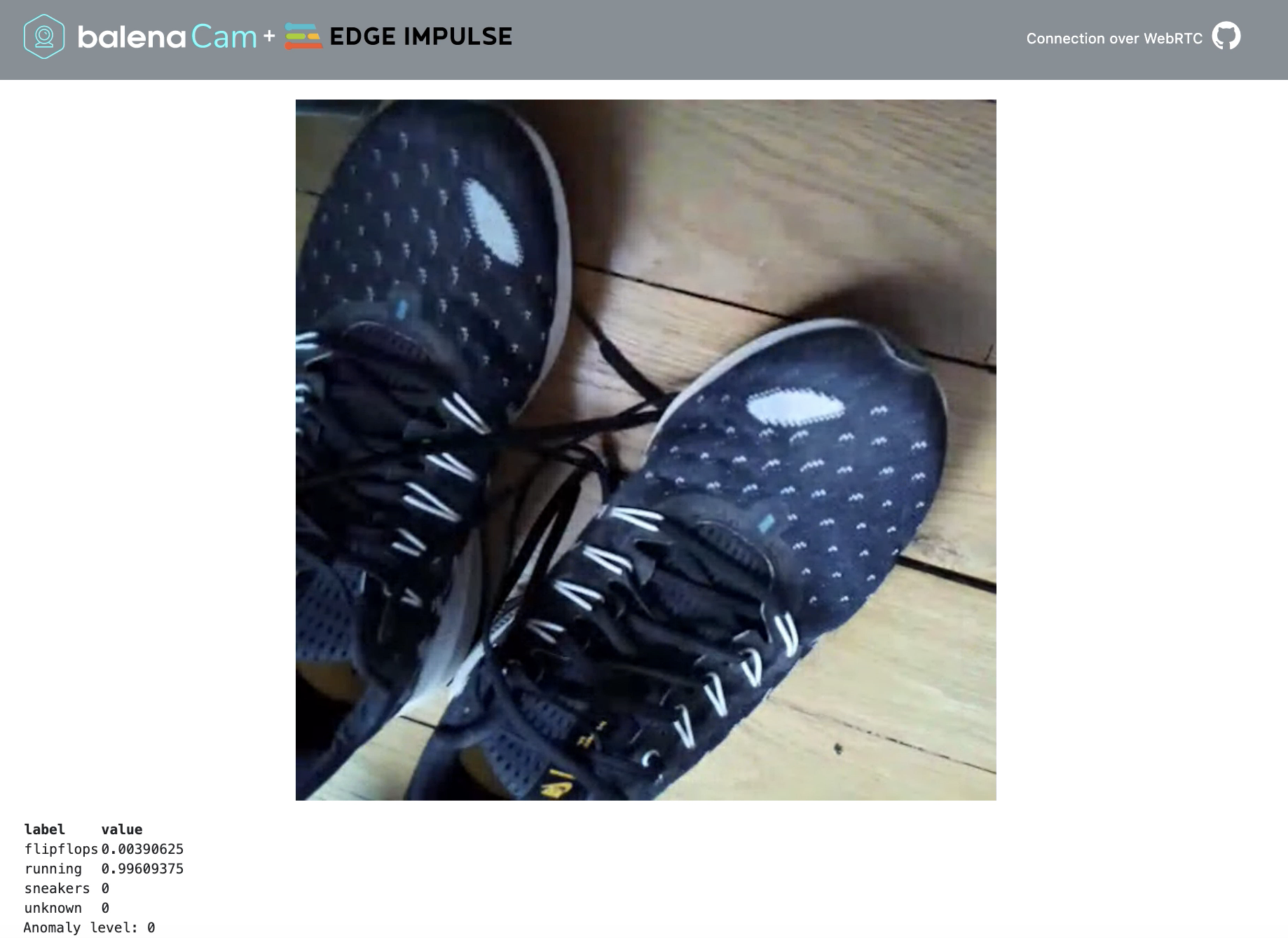

In this tutorial, we created an image classification system for Raspberry Pi users that can distinguish between different types of shoes: flip flops, sneakers, or running shoes. Only a few tens of image samples were required to train the model with more than 80% accuracy, leveraging our (at Edge Impulse) new computer vision feature. The image classification system runs on a Raspberry Pi 4 with a camera module attached to it (Pi Camera v2), and it relies on Balena for deploying our container-based application.

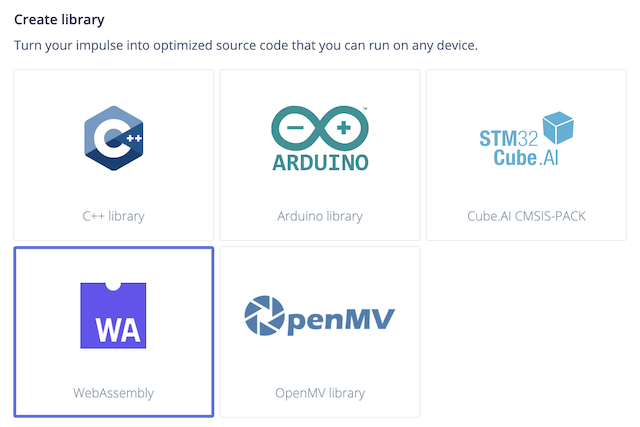

Our Raspberry Pi application streams a live feed and displays classification results on a webpage. We have used the great BalenaCam project as a starting point, allowing users to live stream the camera feed on a webpage. To take advantage of the microservices’ feature of Balena, we decided to run our image classifier in a different container. Our inference engine is deployed in a WebAssembly package (read more about WebAssembly in our documentation) and interpreted by a Node.js server.

The 2 containers - balena-cam and edgeimpulse-inference - communicate with each other through a websocket. We did some modifications to the original balena-cam python web server to support image classification. First we capture a frame from the video feed every second (independently from the live feed) and convert it to a 96x96px image, this is the optimal image size for our computer vision feature. Then we encode it to base64 and send it to the edgeimpulse-inference service. The classification results are returned by the inference engine and displayed on the webpage.

One of the great benefits of using Balena is that deploying a software upgrade is a breeze, the platform automatically pushes updates to your fleet of devices. In our example, the edgeimpulse-inference container automatically imports the WebAssembly package on boot using the Edge Impulse API. If we decide to improve our image classification model or even completely switch the image categories to run a new use case, we simply need to restart the edgeimpulse-inference container through BalenaCloud to update our model.

Acknowledgements: thanks a lot to Marc Pous for kicking off our discussion on running computer vision with Edge Impulse and Balena and for the great help setting up the dockerized version of the application.