TinyML enables developers to run Machine Learning models on embedded devices with scarce resources, allowing users to detect complex motions, recognize sounds, classify images or find anomalies in sensor data. Sounds complicated? Doesn’t have to be! In this blog post we’ll show you how you can use Edge Impulse - a free, online platform for building TinyML projects - together with PlatformIO to capture training data, train a machine learning model, and then run this model on your development board.

For this post, we used the Arduino Nano 33 BLE Sense development board and its on-board accelerometer, but you can follow along with most development boards that are supported in PlatformIO as long as you attach an external accelerometer to it (and already have the drivers to read from the accelerometer.

In this article, we will show you how to directly integrate Edge Impulse within your PlatformIO development environment. You will learn how to collect raw data from your board and forward it to your Edge Impulse project as well as deploying the C++ inference library back to your device. In this example, we will use an Arduino Nano 33 BLE Sense board with its accelerometer.

To get started:

- Sign up for an Edge Impulse account.

- Install the Edge Impulse CLI - we’ll use this to send data from your development board to Edge Impulse.

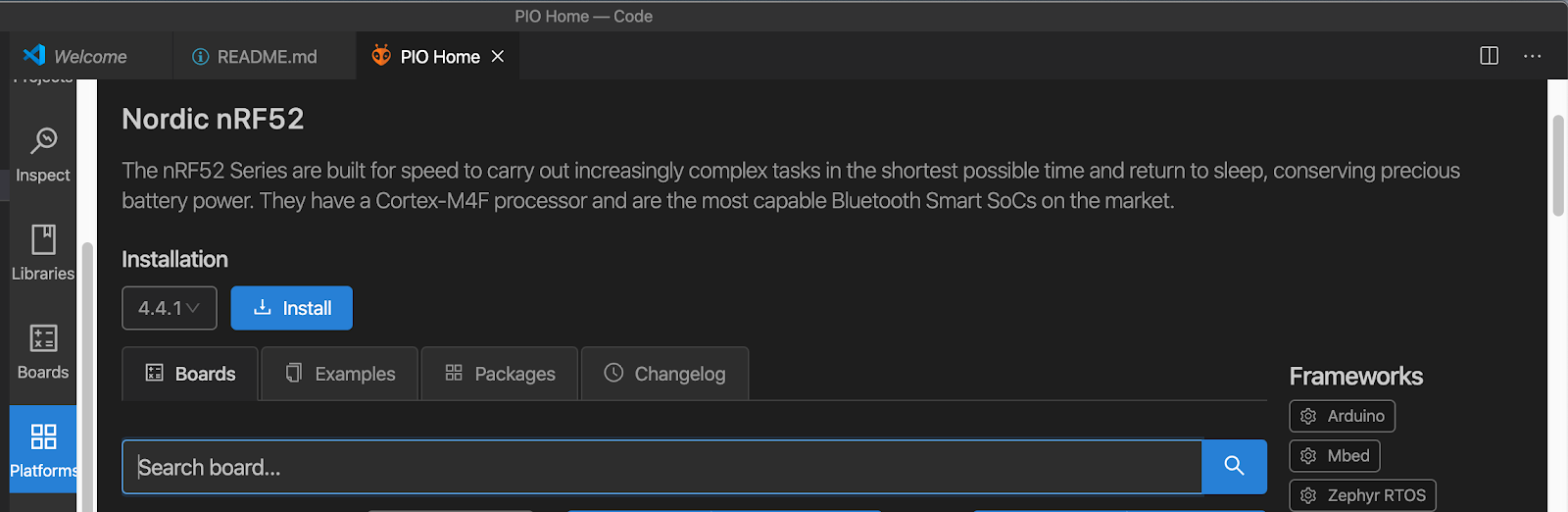

- Make sure you have your target platform set-up under PlatformIO IDE, in our case Nordic nRF52:

Forwarding accelerometer data to your Edge Impulse project

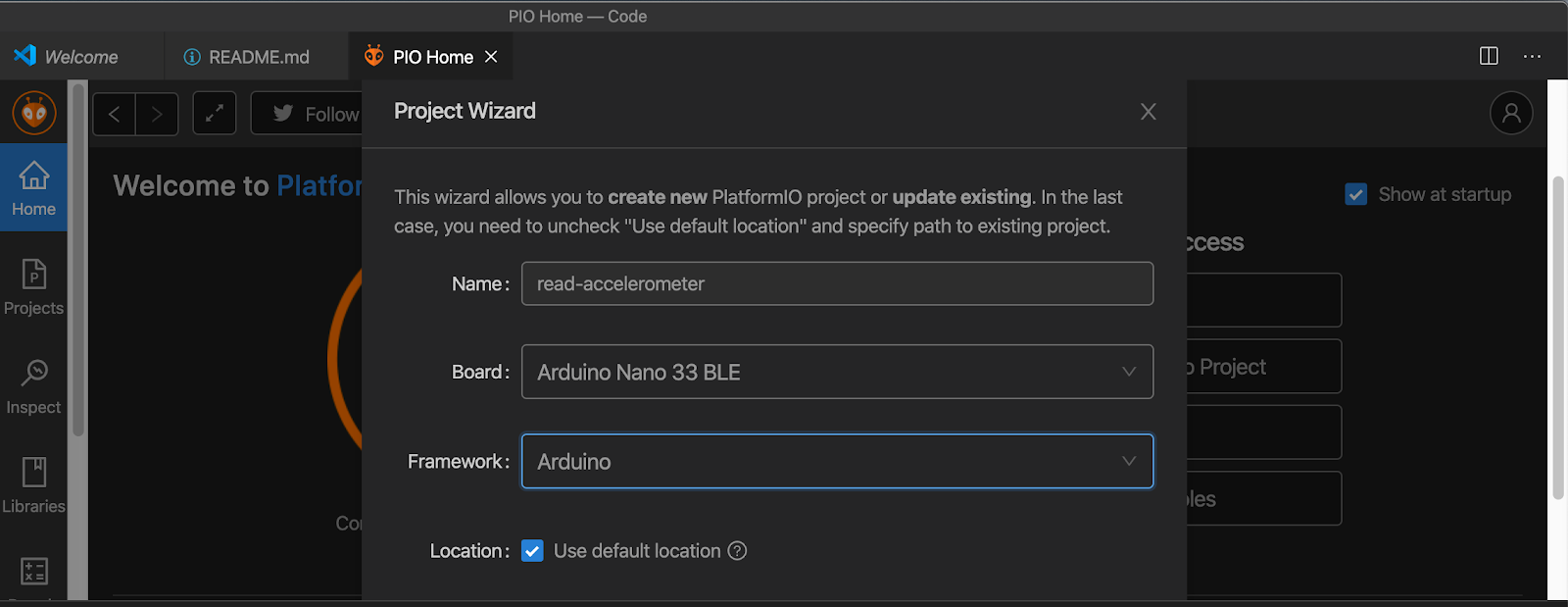

- Start by creating a new PlatformIO project to collect data:

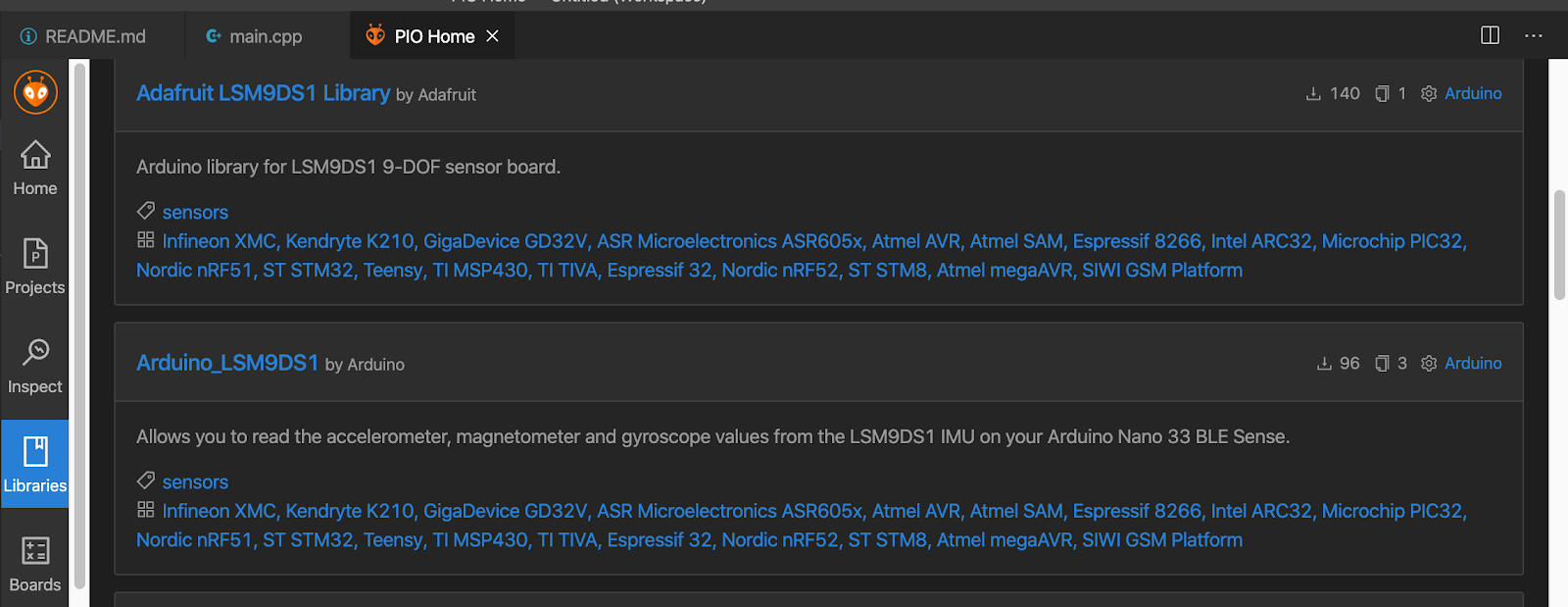

- Depending on your board, you may need to add a library for your sensor. In our case we add the Arduino_LSM9DS1 to our project:

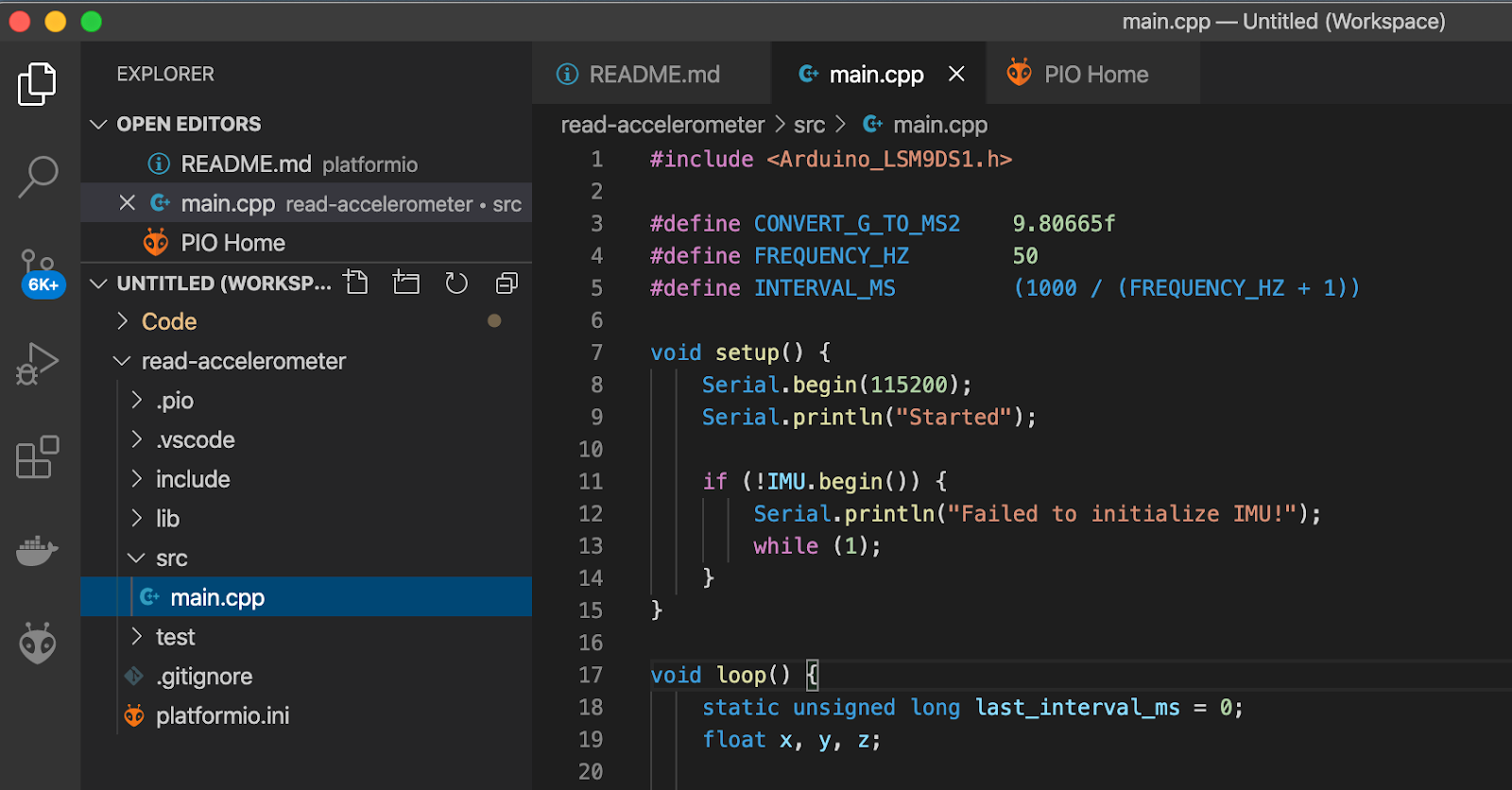

- We use the following code to capture accelerometer raw samples and print them out on the serial output. You can find more details on the protocol following the Data Forwarder guide.

#include <Arduino_LSM9DS1.h>

#define CONVERT_G_TO_MS2 9.80665f

#define FREQUENCY_HZ 50

#define INTERVAL_MS (1000 / (FREQUENCY_HZ + 1))

void setup() {

Serial.begin(115200);

Serial.println("Started");

if (!IMU.begin()) {

Serial.println("Failed to initialize IMU!");

while (1);

}

}

void loop() {

static unsigned long last_interval_ms = 0;

float x, y, z;

if (millis() > last_interval_ms + INTERVAL_MS) {

Paste this code or your custom one to the main.cpp file of your PlatformIO project:

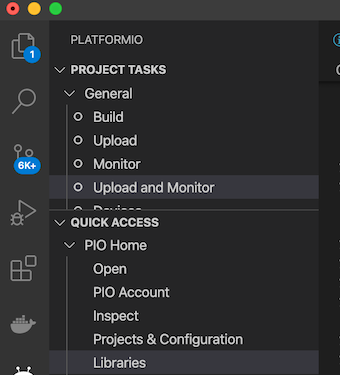

- You can then Build and Upload the firmware to your board.

- Run edge-impulse-data-forwarder to select your Edge Impulse project and configure your sensor’s information:

$ edge-impulse-data-forwarder --clean

? To which project do you want to add this device? platformio-accelero

[SER] Detecting data frequency...

[SER] Detected data frequency: 50Hz

? 3 sensor axes detected (example values: [-2.25,7.61,5.32]). What do you want to call them? Separate the names with ’,’: accX, accY, accZ

? What name do you want to give this device? Arduino BLE

[WS ] Device "Arduino BLE" is now connected to project "platformio-accelero"

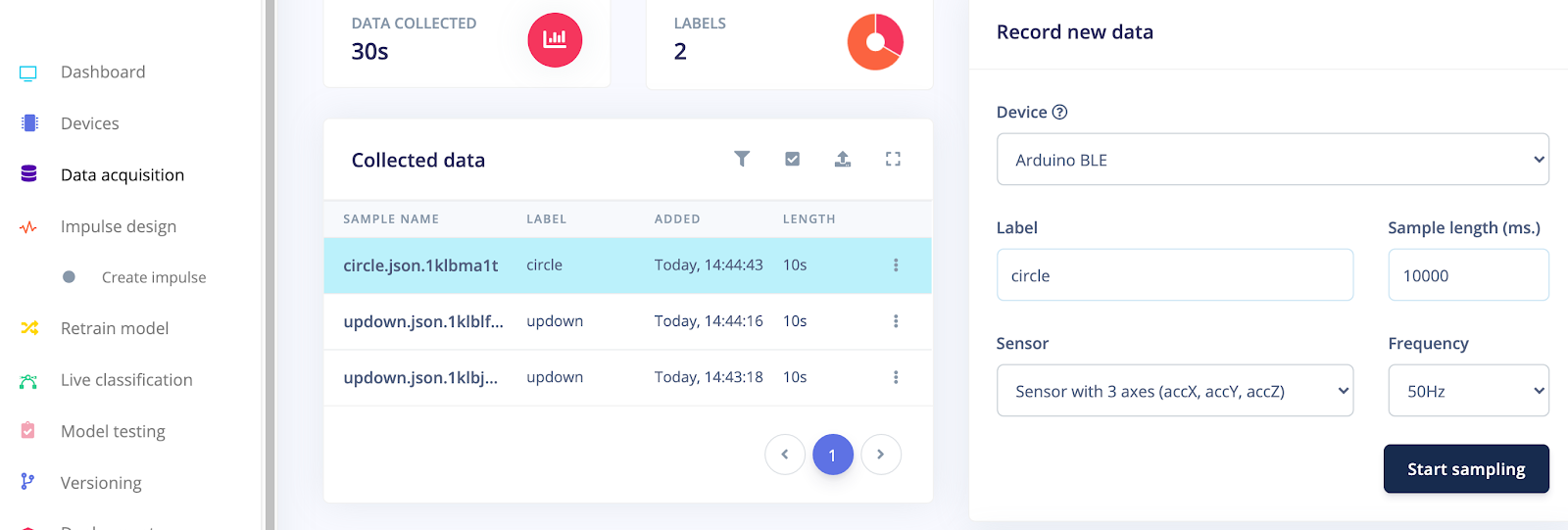

[WS ] Go to https://studio.edgeimpulse.com/studio/9659/acquisition/training to build your machine learning model!- Finally head to your Edge Impulse project, and click Data acquisition. Your development board is now visible under ‘Record new data’.:

- Now on to the fun part: collecting data and building your model. Follow [this tutorial](https://docs.edgeimpulse.com/docs/continuous-motion-recognition) to capture ~10 minutes of data from the accelerometer over four different gestures

Running inferencing on your board

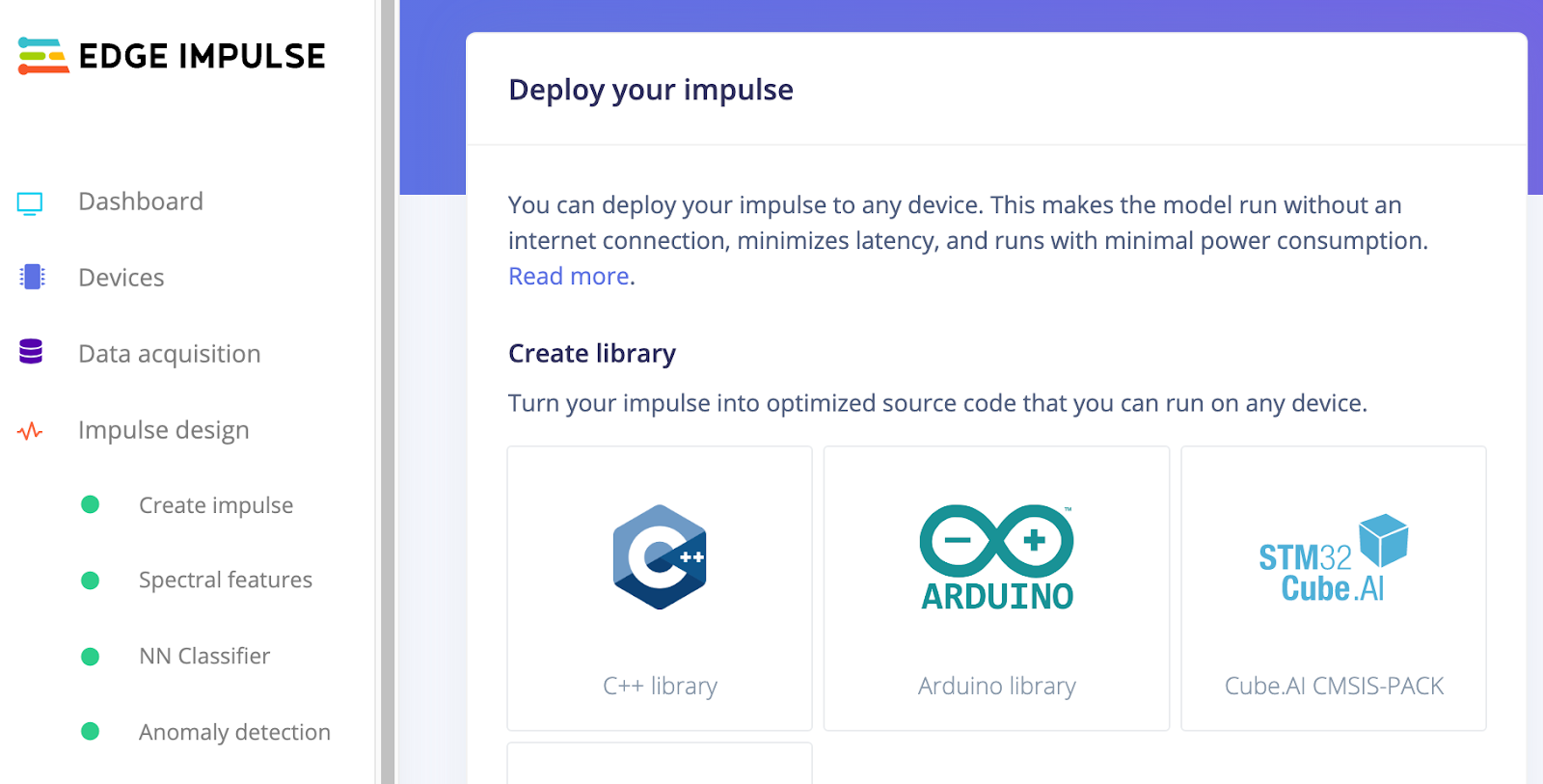

With the model finished we can now deploy the model back to your development board.

- Select and Build the C++ or Arduino library from the Deployment section depending on your target:

- Once the zip archive has been downloaded, navigate to your project root directory and install the library using PlatformIO CLI, i.e:

pio lib install ei-platformio-accelero-arduino-1.0.20.zip

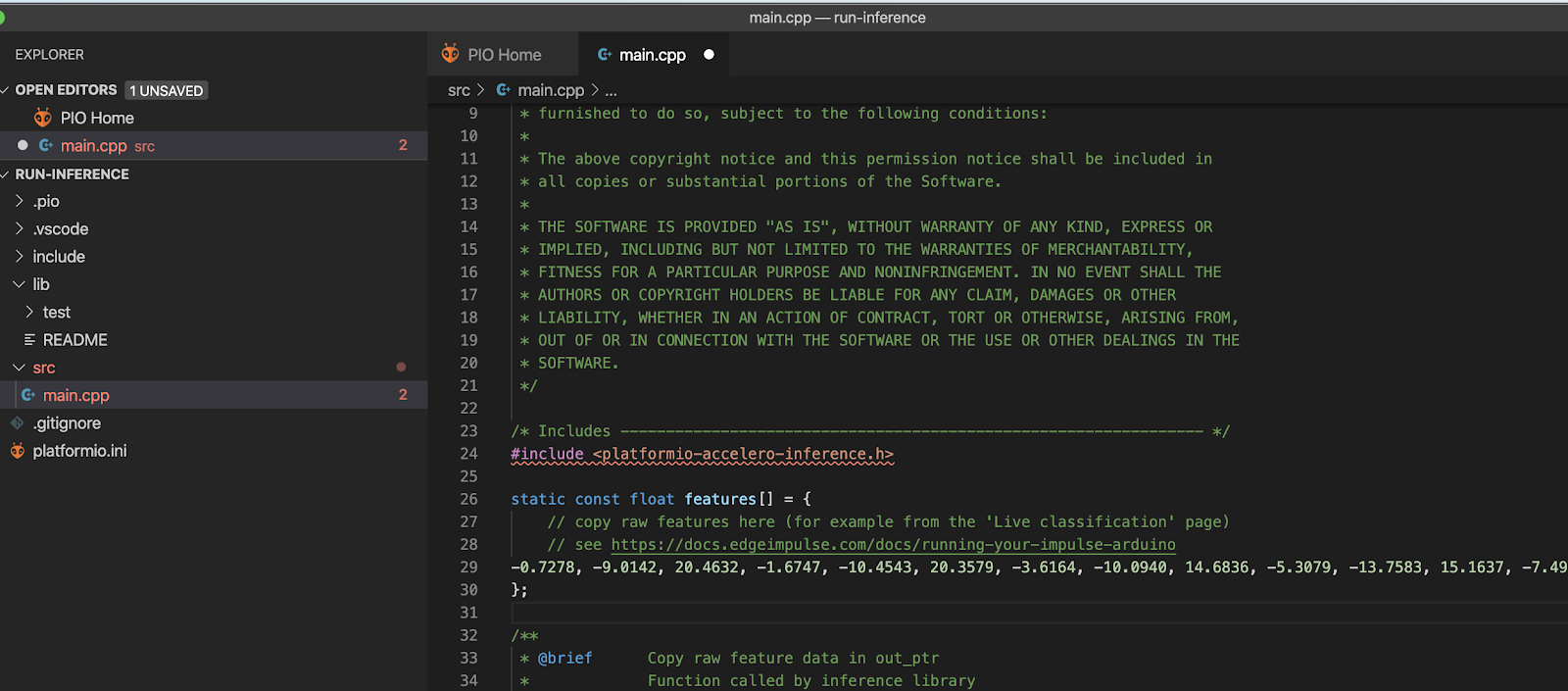

- Create a new PlatformIO project. If your target is an Arduino board, select the static_buffer.ino example from the zip archive and paste the content into the main.cpp file. For other targets such as mbed or generic C++, you can check the guide on Running your impulse locally to get a main.cpp example.

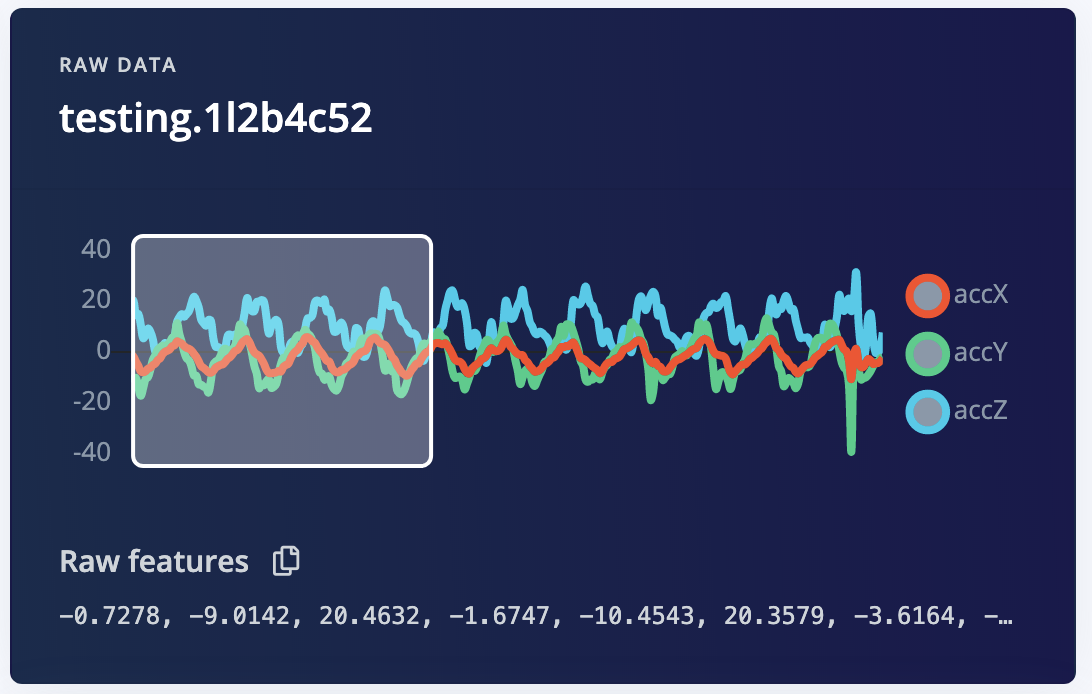

- In the main.cpp, fill in the features[] array with raw features from your sensors.

You can get an example from the Live classification page if your board is connected to your Edge Impulse project:

Or directly from your board, below is a code example to extract raw features from your accelerometer and save them into a buffer (based on Arduino nano_ble33_sense_accelerometer.ino sketch):

// Allocate a buffer here for the values we’ll read from the IMUfloat buffer[EI_CLASSIFIER_DSP_INPUT_FRAME_SIZE] = { 0 };

You can then paste the raw features into the main.cpp:

- Finally select Upload and Monitor to upload the firmware and display results in the terminal.

--- Miniterm on /dev/cu.usbmodem142201 9600,8,N,1 ---

--- Quit: Ctrl+C | Menu: Ctrl+T | Help: Ctrl+T followed by Ctrl+H ---

Edge Impulse standalone inferencing (Arduino) run_classifier returned: 0

Predictions (DSP: 21 ms., Classification: 0 ms., Anomaly: 1 ms.):

[0.00000, 0.99609, 0.00000, 159.501]

Edge Impulse standalone inferencing (Arduino) run_classifier returned: 0

Predictions (DSP: 21 ms., Classification: 0 ms., Anomaly: 1 ms.):

[0.00000, 0.99609, 0.00000, 159.501]Congrats! You have built your first TinyML model. And you don’t have to stop here. You can apply TinyML to many other places, from sound recognition https://docs.edgeimpulse.com/docs/audio-classification to sight https://docs.edgeimpulse.com/docs/image-classification and even smell https://twitter.com/kartben/status/125879179307381555.

Built something interesting? Got questions? Let us know in the Edge Impulse forum. We can’t wait to see what you’ll build! ????

---

Aurelien Lequertier, Lead User Success Engineer at Edge Impulse