In today’s fast-paced retail industry, keeping accurate inventory counts is becoming an increasingly challenging task for businesses. The rise of e-commerce, increased consumer demand, and global supply chain logistics are all contributing factors that are making it harder for businesses to keep track of their stock levels.

One of the biggest challenges in retail, warehouse, and manufacturing inventory management is the potential for human error. Employees may make mistakes when counting inventory, leading to discrepancies in stock levels. This can lead to overstocking or stockouts, resulting in lost sales and wasted resources.

Another major challenge is the constant turnover of inventory. Businesses that sell fast-moving consumer goods, such as electronics or fashion, must constantly restock their shelves to keep up with consumer demand. This makes it difficult to accurately track inventory levels and predict future demand.

A third challenge is the lack of real-time inventory visibility. Many businesses still rely on manual methods, such as paper-based inventory sheets, to keep track of stock levels. This can lead to delays in identifying discrepancies or errors, and can make it difficult for managers to make informed decisions about inventory management.

All of these problems stem from the fact that inventory management is still a slow, laborious, manual counting process for many businesses. When the problem is an inefficient, manual process, Solomon Githu is a firm believer that the solution is almost always machine learning. He recently took the problem of maintaining an accurate inventory head-on with a project that uses computer vision and machine learning to automate the job and ensure accurate, up-to-the-minute counts. The system can be deployed in a warehouse, on the shelves of a retail store, or anywhere else it is needed.

Githu was determined to not only make the device simple to use, but also to make it easy to create, so that it can be deployed far and wide. For this reason, he chose to leverage the Edge Impulse machine learning development platform to create the analysis pipeline and deploy it to the hardware. Using this platform, the plan was to develop an object detection model, because that would allow any number of items to be identified in an image — like all of the items available for sale on a set of shelves, for example.

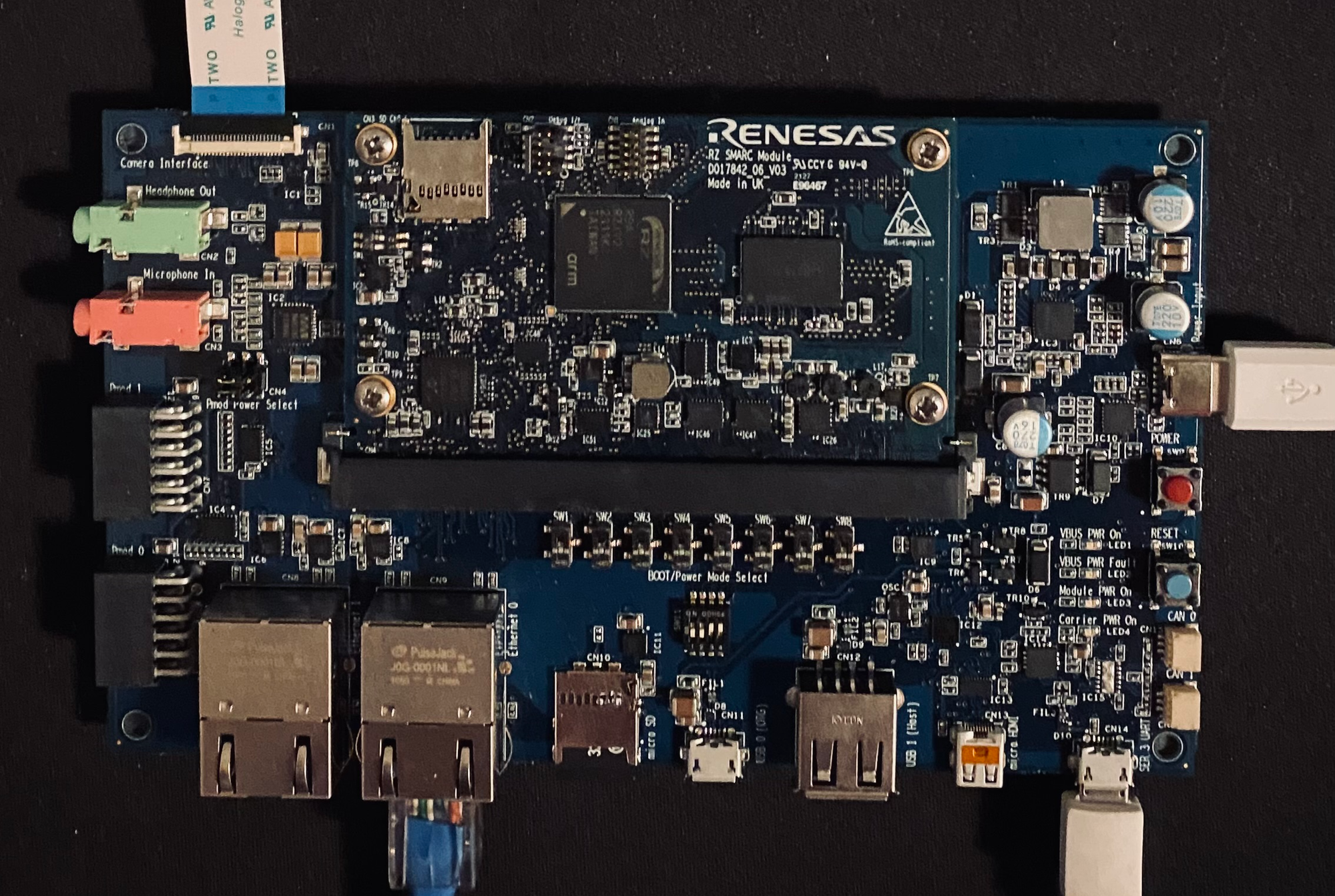

The powerful Renesas RZ/V2L Evaluation Kit was chosen to power this device, because it is ideal for computer vision applications. It sports a dual-core Arm Cortex-A55 processor running at 1.2 GHz and 2 GB of SDRAM to power through image analysis algorithms. It also features the DRP-AI AI accelerator that offers both high performance and low power consumption when running machine learning algorithms. With the 5-megapixel Google Coral Camera included in the kit, all the necessary hardware is included in one box.

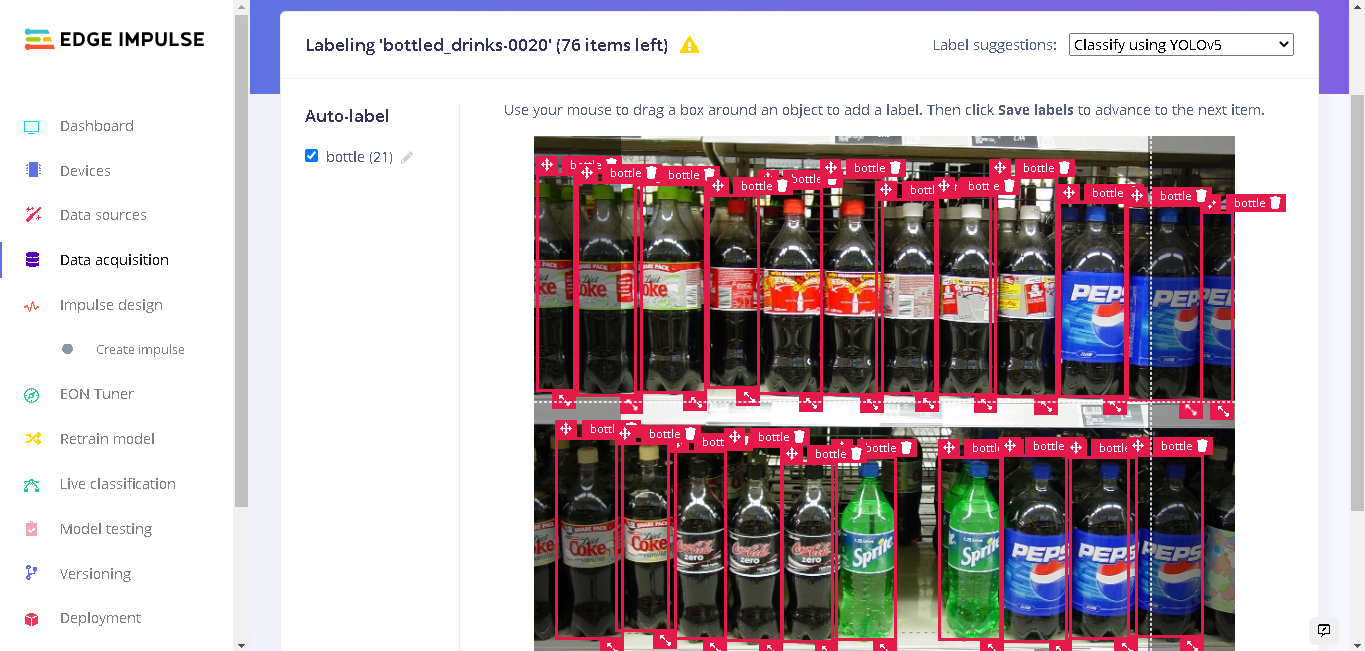

A public dataset was tracked down that focuses on object detection in densely packed scenes. From this dataset, images were extracted that fell into two broad categories — bottles and boxed/carton drinks. Just under 200 images were collected in total. Before the solution could be deployed in the real world, a much larger dataset, containing many more items, would need to be identified, but the dataset collected by Githu is sufficient to prove the concept.

All of the images were uploaded to an Edge Impulse Studio project using the data acquisition tool. Before an object recognition model can learn to recognize the objects in an image, the objects first need to be identified by drawing bounding boxes around them. Even for a relatively small dataset such as this one, that can get tiring very quickly. Fortunately, the data labeling tool offers several options for AI-powered assistance in drawing these boxes. Githu selected the YOLOv5 option, which enables the tool to suggest where the bounding boxes should be, and what the label of each object should be. With this boost, it is typically only necessary to make an occasional adjustment to the suggestions.

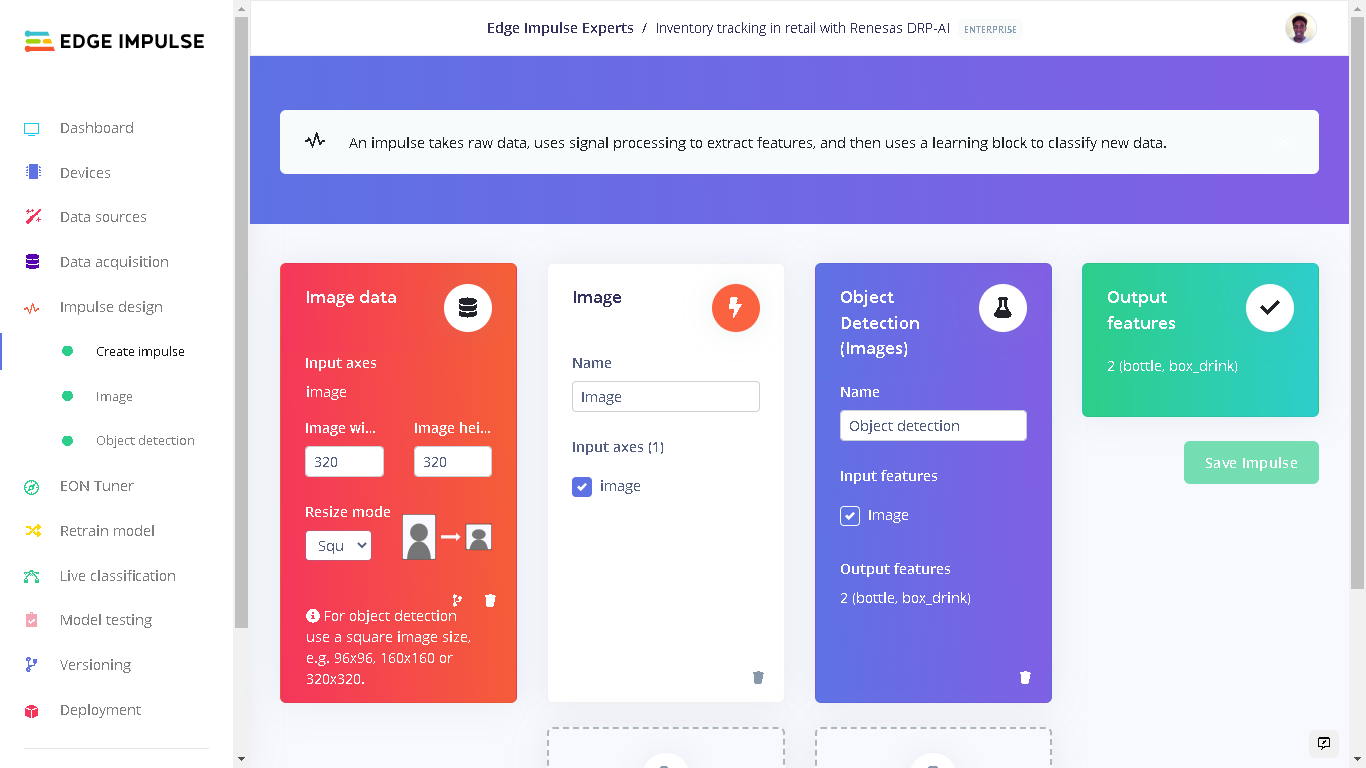

Next up was the design of the impulse, which defines the machine learning analysis pipeline. The first step reduces the input image size to 320 x 320 pixels, which is relatively large for edge applications, but given the power of the Renesas RZ/V2L Evaluation Kit, that will not be a problem. Next, the most significant features are calculated and forwarded into an object detection neural network. A pretrained YOLOv5 model was selected as the starting point to leverage the knowledge that it already contains, then it was further trained to recognize bottles and boxed drinks.

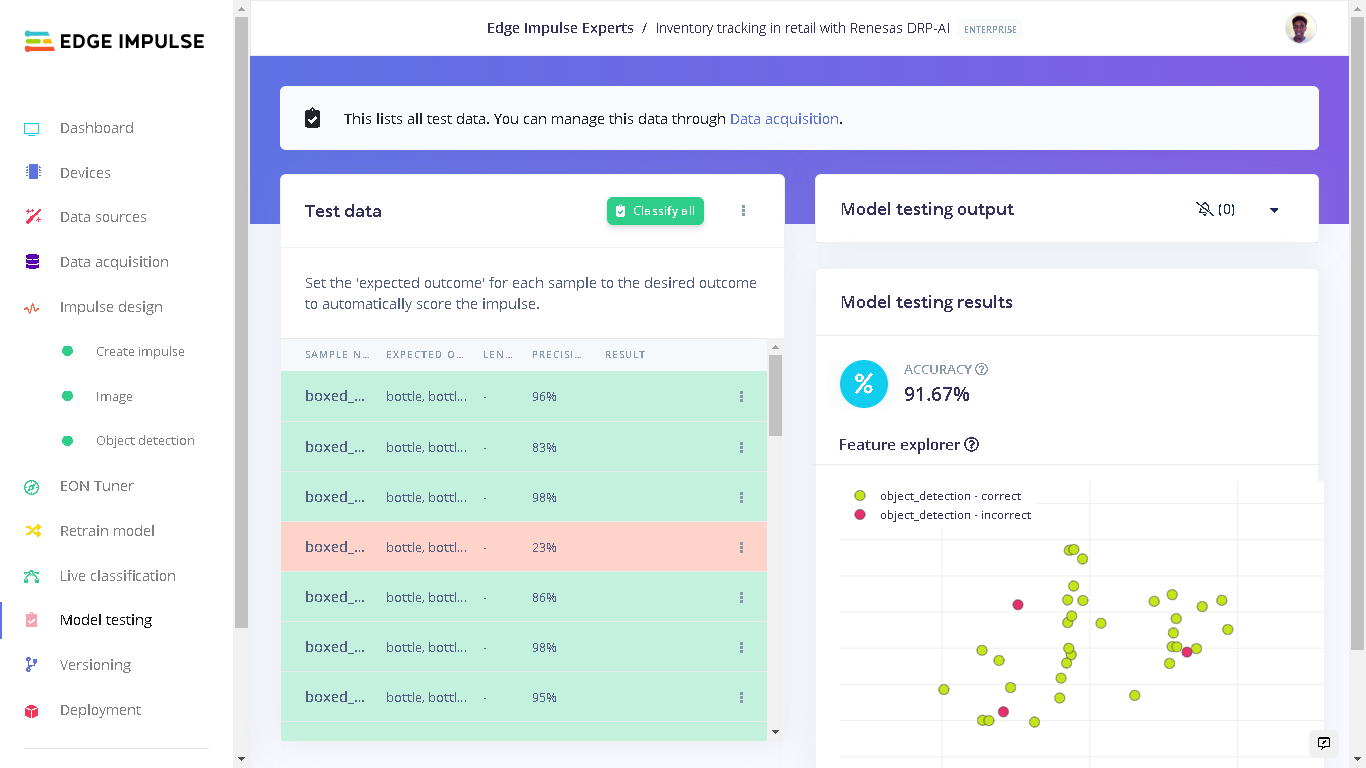

YOLOv5 models typically require thousands of training images to achieve a high level of accuracy, so it was a pleasant surprise to see that a precision score of over 89% was achieved with only 145 training images. That excellent result was confirmed by the model testing tool, which only considers samples that were not a part of the training process. This validation reported a precision score of 91.67%. A larger batch of data would be expected to further improve these results, but this level of precision is more than good enough to prove that the methods work.

The Renesas RZ/V2L is a Linux-based computer (Edge Impulse has a guide to create a Linux image), so the Edge Impulse CLI can be installed directly on it. With the CLI installed, it only takes a few commands to deploy the entire machine learning analysis pipeline to the hardware. Doing so removes any requirements for wireless connectivity or cloud computing resources.

Githu wrote a simple web application to demonstrate the abilities of the object detection model. The application displays an image of the items in front of it in real-time, and also a count of each item that is present. As items are added or removed, the count is updated immediately.

Using the YOLOv5 model, it was discovered that the system was operating at about four frames per second. This is quite acceptable for an inventory counting device, but for other applications might be a bit too slow. Githu ran some additional tests using Edge Impulse’s FOMO object detection algorithm, and found that it ran far faster, so that is something to keep in mind if you have the need for speed.

Inventory management may or may not be of interest to you, but chances are that you have an idea for something that you would like to automate. The methods described in the project documentation can be adapted to many different scenarios, so it is worth a read, whatever your goals may be.

Want to see Edge Impulse in action? Schedule a demo today.