Recently I had an hour between meetings and noticed the Himax WE-I development board sat on top of my docking station. I had put it thereafter I received the board to ensure I did not lose it, as it is compact.

The Himax board contains both an ASIC and a DSP along with 640 by 480 VGS image sensors, a 3-axis accelerometer and 2 Microphones (left and right). I had been previously talking to a client about using Machine Learning to detect and classify object orientation on a production line. Knowing that Edge Impulse supported this board, I wondered how quickly I could create an ML system that would be able to detect the orientation of an object. In this case a can of coke, which I also happened to have on my desk.

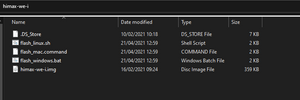

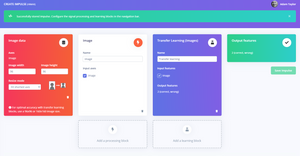

The first step in the process was to create a new project, which targeted the Himax WE-I. As the board is supported by Edge Impulse the first step was to download the Himax WE-I application, which supports gathering data directly into the edge impulse project.

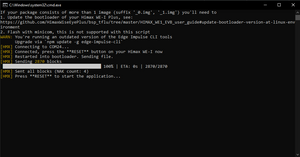

Once the application has been downloaded, we need to flash that to the Himax device, connecting the device over USB to the development machine and run the bat / sh /command file depending on your development system.

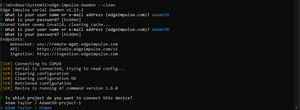

Once the Himax has the data-gathering application installed the next step is to connect the edge impulse deamon such that we can gather data samples into the project.

In edge impulse studio, I had created a new project. To connect the edge impulse deamon to the project I started it with the –clean option to allow me to select the target edge impulse project.

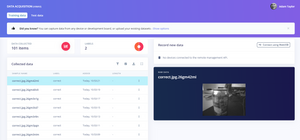

Once the deamon is running we can capture several images as the training set. For this application, I collected 50 images of the object correctly orientated, and 50 with it incorrectly orientated. As I took the images, I moved the Himax board about to ensure each picture was slightly different from the previous.

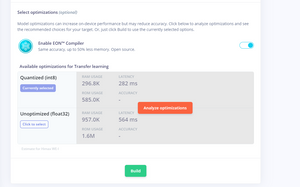

With a simple data set of 100 images, the next stage is to define the impulse. In the impulse, I enabled the images to be 96x96, and then a simple transfer learning algorithm to do the classification.

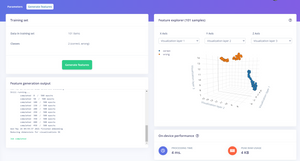

With the impulse defined and the data gathered the next step was to generate the features from the training set. I was moving quite fast in this application, so I did not segment a training validation set.

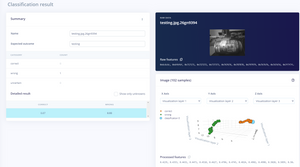

From the output of the training set, it can be seen that the correct and incorrect orientations are nicely separated and grouped.

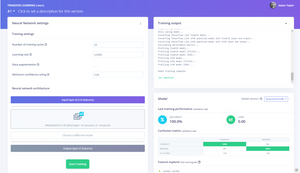

The next stage is to then train the neural network, the output of this stage looks good as the images are correctly classified as right or wrong.

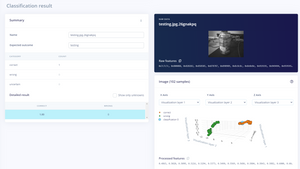

Of course, once the network is trained, we want to try classifying live images from the board. We can do this using the Himax board to capture new images and feed them back into the impulse. Doing this with the object-orientated correctly and incorrectly showed a good detection working with live images.

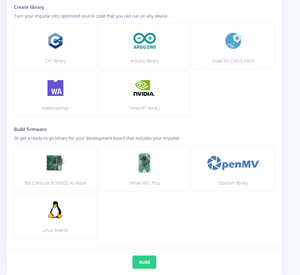

The final stage is to generate the deployable code for the Himax -WE Plus.

This image can now be flashed to the device and used to clarify images correctly or incorrectly. All told I think it took me longer to write this blog than it did to create the application!

This article was first published by Adam Taylor on adiuvoengineering.com.