Developing a high-quality dataset for object detection typically involves significant data collection and labeling. This can be particularly difficult for industrial applications such as production-line monitoring or stock keeping. Shutting your production line to install cameras is costly and a hard sell in the early stages of a project. It’s also tricky to expand your dataset with new items while in production. Finally, relying on images from the production environment for your training dataset requires intensive data labeling. We’ve recently shown how zero-shot and generative AI models can help with automating this labeling task, but for some use-cases there is a much simpler, more scalable approach available.

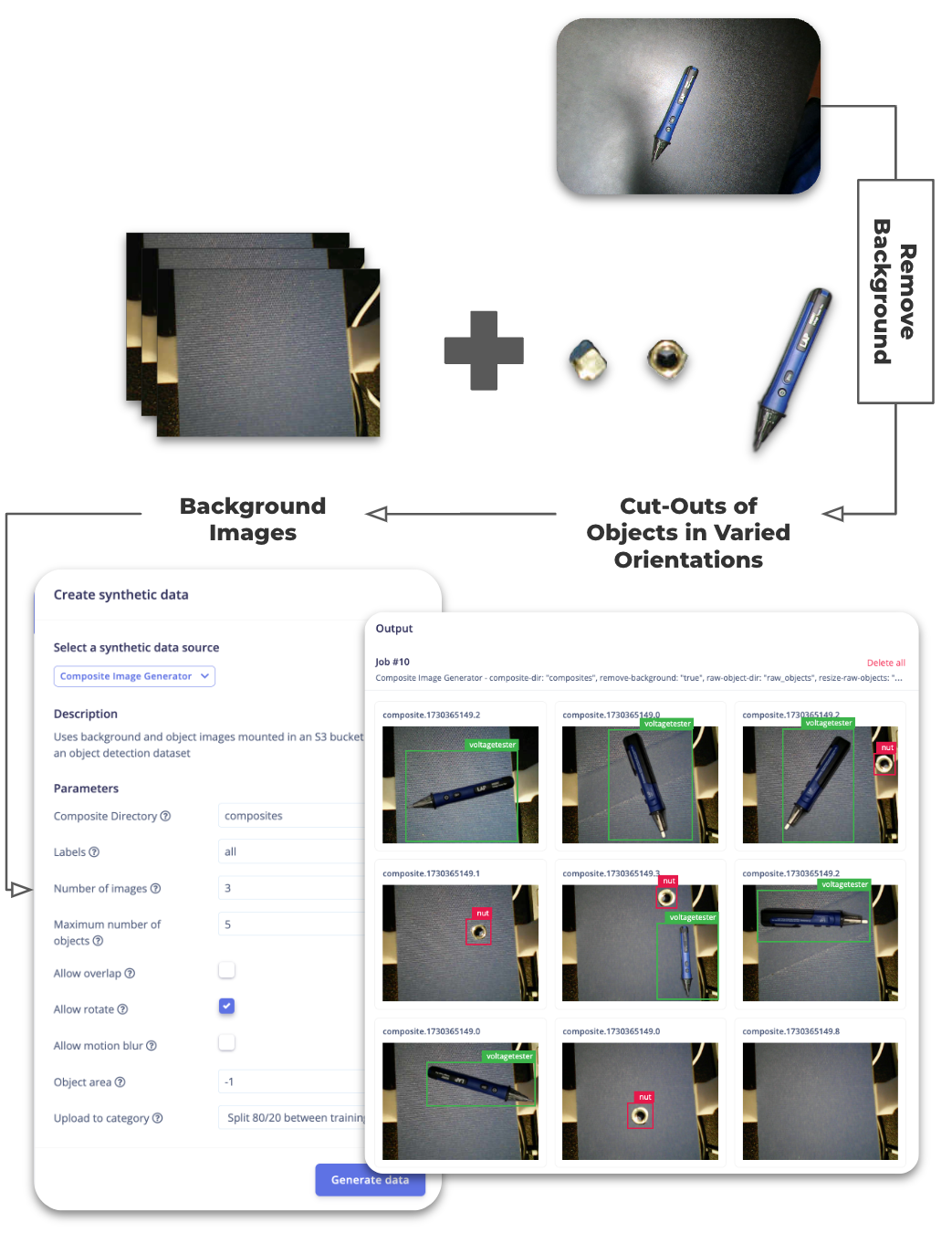

You can quickly generate labeled object detection images by compositing object cutouts onto background images, creating realistic scenarios without extensive on-site data capture. We’ve explored this technique in the past as a Python notebook, but with the new Synthetic Data Blocks, which are now available in Edge Impulse, we have built an example Synthetic Data Block to perform the same task. Here’s a video overview of the process and how it works:

Let’s explore the core features, setup, and customization options for using the Composite Image Generator to create datasets tailored to your project’s needs.

How the Composite Image Generator Works

The Composite Image Generator combines background images with object cutouts, creating composites that simulate real-world scenarios. This transformation block is designed to allow maximum flexibility over how objects appear within the images, making it ideal for training robust object detection models.

Here’s how it works:

- Upload Background and Object Images: Start with folders containing background images (such as conveyor belts) and object images (parts, tools, etc.).

- Upload Raw Objects & Remove Background: If you have images of your object on a plain background, this block can use rembg to automatically remove the background and crop down the image to just your object.

- Define Parameters and Generate Data: The tool lets you control key parameters like the maximum number of objects per image, allowed overlap, and object rotation. You can also introduce image effects like motion blur and lens warping to better match your production environment. This is discussed in more detail below.

- Review and Validate: Synthetic images with bounding boxes are automatically generated, enabling you to review the dataset in Edge Impulse Studio for immediate feedback on data quality.

Adding Visual Effects to your Synthetic Data

It is important that your training dataset matches the production environment as closely as possible. Keeping this in mind while generating synthetic data can lead to a much more realistic and usable dataset. In this block we’ve added a couple of examples which illustrate this point.

Motion Blur

In this example use-case we’re generating data for a conveyor belt tracking system. In production the conveyor belt will be moving, this will have an impact on our dataset because moving objects often have motion blur. This can be easily simulated and added into the synthetic data. In this block you can add motion blur of random strengths to your images in a particular direction:

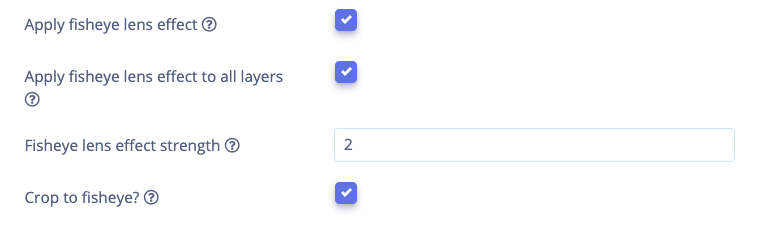

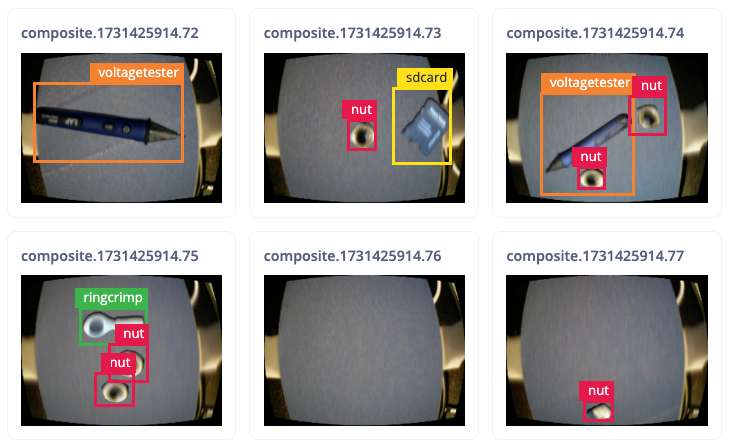

Fisheye Lens Effects

Wide-Angle or Fisheye lenses can be used to increase the field of view of your camera. This is useful for covering more area with a single device, but can lead to problems when training an object detection model. These lenses change the shape of objects at the edge of their field of view; if the model has not seen this before it may struggle to detect these warped objects. This is often a problem when using synthetic data, or public datasets which don’t contain fisheye images.

When generating synthetic data we can apply these lens effects to mimic the production environment. In this block a fisheye lens effect can be applied to either the whole image or just the objects (in case your backgrounds are already warped). The block also automatically adjusts the bounding boxes of warped objects.

Using the Composite Image Generator in Edge Impulse (Enterprise Only)

To start generating synthetic data in Edge Impulse, follow these steps:

- Clone the Block Repository: Head to our GitHub to download the source code for this project

- Add your own source images: Put your backgrounds and cut-out objects into the

compositesfolder. If you want to remove backgrounds automatically from your object images, place them in theraw_objectsfolder. - Initialize a New Block: Use

$ edge-impulse-blocks initto initialize a new block with custom settings. - Push Your Block: After setting up your parameters, push the new block to Edge Impulse using

$ edge-impulse-blocks push. - Set Up a Project: In your Enterprise project, go to

Data acquisition > Synthetic data, and select the Composite Image Generator. - Configure Your Parameters: Adjust settings like the number of images, object placement area, and other parameters.

- Generate Data: Click “Generate data” to create synthetic images. You’ll see the generated images displayed in your dataset panel for quick evaluation. These will automatically be added to your project!

This setup allows you to generate a full dataset within minutes, tailored to your exact specifications. For further customization, you can modify and redeploy the Composite Image Generator block with the Edge Impulse CLI. This enables custom transformation blocks, which can be used to add unique features, adjust label structures, or add other visual effects to your images.

Real-World Impact of Synthetic Dataset Generation

Using the Composite Image Generator can significantly accelerate the model training process. Instead of spending days capturing and labeling data, engineers can generate a large, labeled dataset in minutes, test models rapidly, and adjust data generation parameters as needed.

Edge Impulse’s own testing has shown that synthetic data from the Composite Image Generator is effective for training models that achieve high accuracy on object detection tasks. Combining the synthetic data with tools like the EON Tuner allows you to further optimize models for edge deployment, achieving reliable performance even on constrained hardware.

Start Building with the Composite Image Generator Today

The Composite Image Generator is available to all Professional and Enterprise users. Explore the code and customization options on the GitHub repository to see how it fits into your workflow.

Get started now to transform your object detection model development by quickly generating synthetic data for improved accuracy, agility, and performance on the edge. Visit Edge Impulse to explore Enterprise access or start a free trial.