We are excited to introduce a new addition to our AI labeling feature, powered by Google's advanced Gemini models. Google Gemini is a family of multimodal large language models that excel in understanding and generating human-like text and interpreting various data types, including images. This integration complements our existing OWL-ViT zero-shot object detection labeling block, and enhances our ability to detect objects within images and automatically add bounding boxes, making the labeling process faster and more efficient.

Zero-shot object detection explained

Zero-shot object detection allows AI models to identify and label objects in images using textual descriptions alone, eliminating the need for prior training on specific datasets. This capability lets you detect and label objects in images by simply describing them, offering flexibility and adaptability for various applications.

Key features

Easy integration

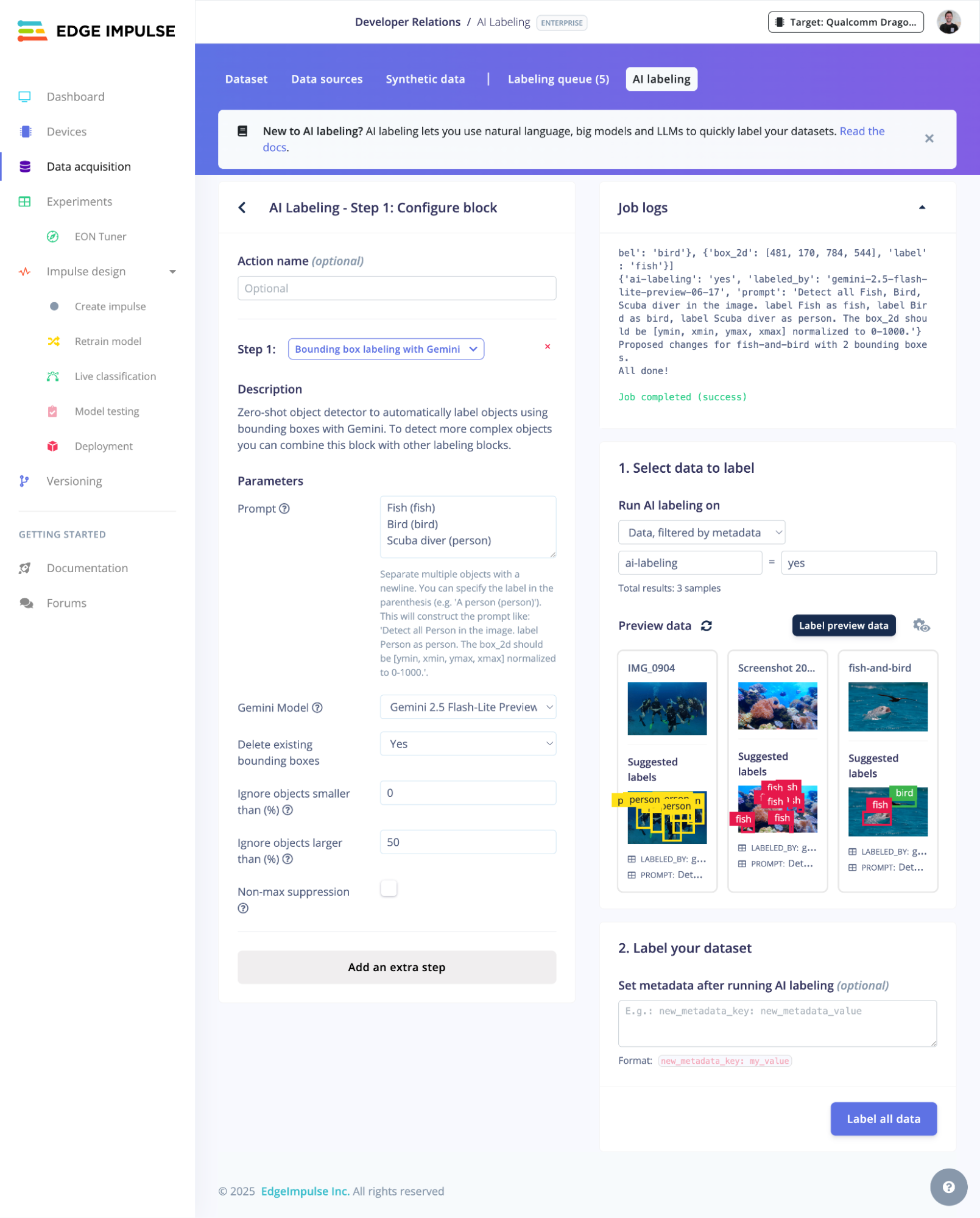

The new AI labeling block integrates smoothly with existing workflows. Whether you are starting a new project or updating an existing one, you can easily add this block to automate the object detection and labeling process alongside the OWL-ViT block.

Advanced filtering options

The AI labeling block includes advanced filtering options:

- Ignore objects smaller than a specified threshold: exclude small objects irrelevant to your analysis

- Ignore objects larger than a specified threshold: focus on objects within a specific size range

- Apply non-maximum suppression (NMS): reduce overlapping bounding boxes to ensure accurate detection and labeling of each object

Support for multiple models

The AI labeling block supports various Gemini models:

Comprehensive metadata

The AI labeling block updates metadata for each labeled image, providing details about the detection and labeling process, including the model used, the prompt, and any applied filters. This ensures transparency and traceability in your object detection tasks.

How it works

- Input your prompt: describe the objects you want to detect and label in your images, for example, Fish (fish)Bird (bird)Scuba diver (person)

- Select the model you want to use.

- Configure filters: set filters to ignore objects based on size or apply NMS to reduce overlaps.

- Run the AI labeling block: the block processes your images, identifies objects based on your prompt, and automatically adds bounding boxes according to the specified filters.

- Review and use labeled data: the labeled data, complete with bounding boxes, is ready for review and can be used in subsequent workflows.

The new AI labeling block with Gemini is available for all users. This block is located under your Edge Impulse’s project Data Acquistion view, on the AI Labeling tab.

We look forward to seeing how this feature enhances your workflows and helps achieve your object detection and labeling goals more efficiently. Stay tuned for further updates and improvements.

Happy labeling!