There is a time and a place for everything. At a shooting range, where visitors are honing their skills through target practice, or in a field during hunting season, it is perfectly normal for the sound of gunshots to be ringing out. But in other settings, the sound of gunfire is a major red flag that almost certainly signals a dangerous situation is unfolding. And if the worst has come to pass, every second counts. The sooner help can arrive, the better the chance is of minimizing injury and loss of life.

Cell phones are ubiquitous, so it would be expected that there would be many calls to emergency services at such a time. But since the scene would be chaotic, and in the heat of the moment many people would have incomplete or inaccurate information, it would be difficult for first responders to sift through the noise and get a clear picture of what is actually happening in the first minutes of the incident. Rather than relying on incomplete or inaccurate accounts from nearby individuals, machine learning enthusiast Swapnil Verma had the idea to build a device that can accurately detect the sound of gunfire. Using this tool, first responders would have a high degree of certainty that gunfire had occurred, and by using multiple devices in different locations they might even gain insights about where the threat is physically located.

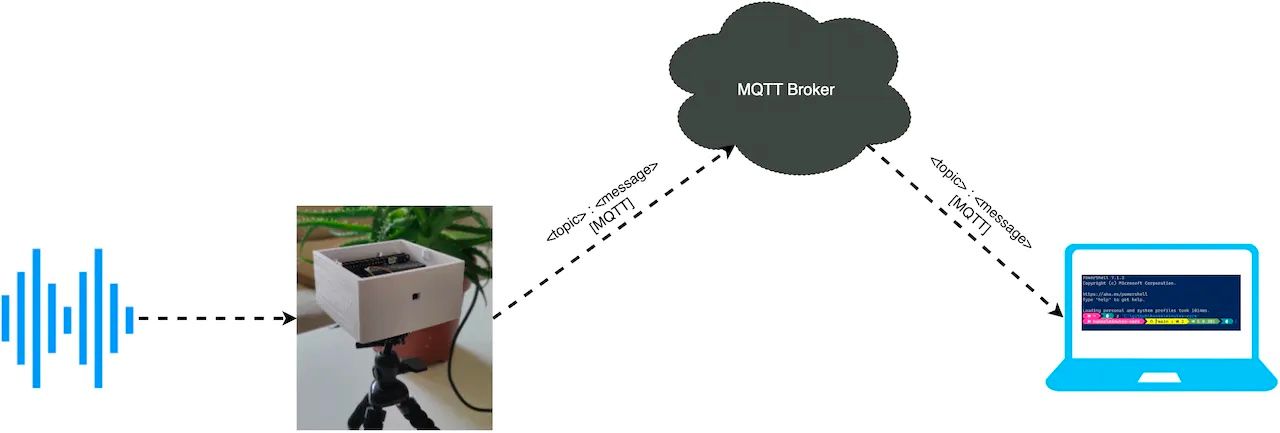

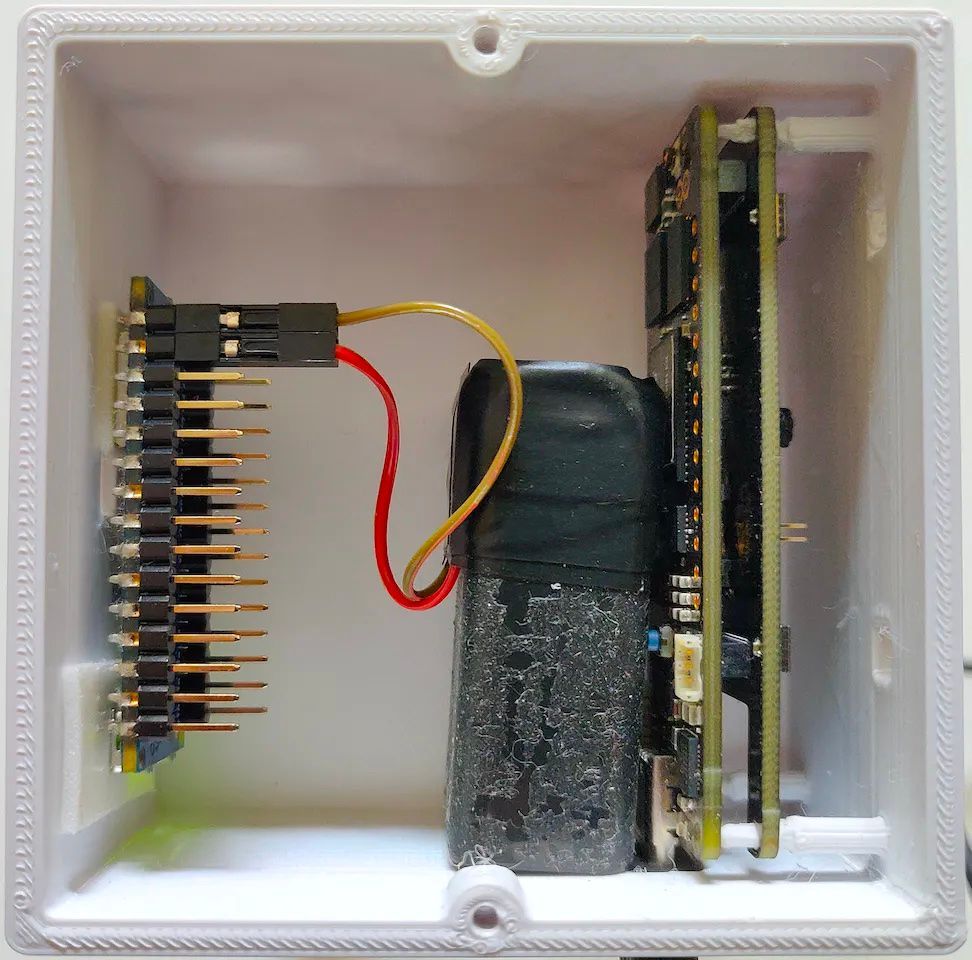

At a high level, Verma’s device would need to capture audio samples, classify the audio with a machine learning algorithm to determine if gunfire was detected, then report any positive detections to a remote cloud server. Verma also wanted the device to be inexpensive and compact so that it could be installed in as many locations as possible. Based on past experience, he knew that Arduino development boards would be ideal given these constraints. Being a firm believer that the only thing better than an Arduino is two Arduinos, Verma chose to use both the Nano 33 BLE Sense and a Portenta H7. Both come equipped with a microphone, which allowed him to create a more sensitive device that captures audio from multiple sources. Each board is also well suited for running local machine learning inferences, so they can both run a copy of the detection algorithm in parallel for the fastest detection times. Since only the Portenta H7 has a WiFi module to transfer results to the cloud, the Nano 33 BLE Sense communicates its findings via Bluetooth to the Portenta H7, which then relays all the information to the cloud server.

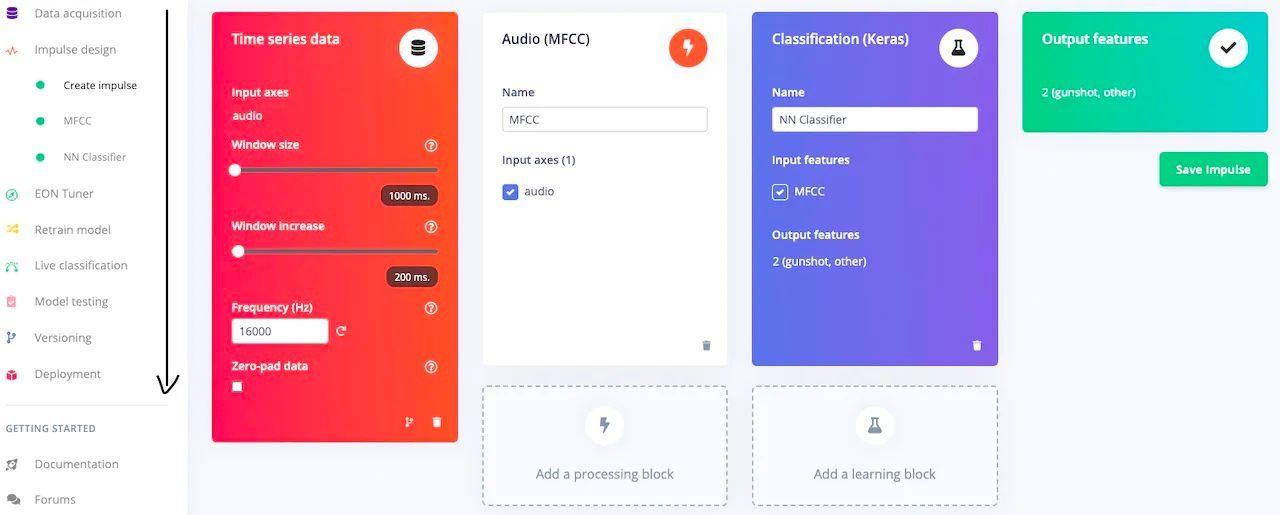

Before Verma could build the machine learning model, he would need a source of example gunfire sounds for training purposes, so he got out his collector’s edition Rambo DVDs and started recording. Well, we cannot exactly confirm that, actually... but we do know that he definitely used Kaggle’s gunshot audio dataset for positive examples, and the UrbanSound8K dataset to serve as a source of background noises. Audio files from these datasets were uploaded to Edge Impulse Studio using the data acquisition tool, which automatically assigned labels and split the data into training and testing sets.

The design of the impulse began with a Mel-frequency cepstral coefficients preprocessing step to optimize data processing for resource-constrained edge computing devices. This was followed by a convolutional neural network classifier. Using the web interface, Verma was able to add layers to the network and fine-tune the model’s hyperparameters. With the pipeline complete, the training process was kicked off for 5,000 iterations with a learning rate of 0.0005.

The training classification accuracy rate was observed to have reached a very impressive 94.5%. A further, more stringent test of the model’s performance was conducted using a dataset that was not involved in the training process. This showed an average classification accuracy rate of 91.3%. These are very good results to achieve on the first run, and with a limited dataset size. Additional training data would be expected to further improve the accuracy of the model.

One of the great strengths of the Edge Impulse platform is that the same machine learning algorithm can easily be deployed to multiple devices. Verma demonstrated this by exporting an Arduino library that could be deployed to both of the development boards he was using without modification. And that capability is not limited to devices made by the same manufacturer — the pipeline could just as easily have been deployed to a Raspberry Pi, an NVIDIA Jetson, or any number of other edge computing devices.

Verma created a 3D-printed case and assembled the finished hardware inside of it. He then ran a number of live tests in which he played various sounds on his laptop computer. When the audio played included the sound of gunfire, the neural network detected it correctly, and a message was delivered to a server in the cloud. In a real-world scenario, this alert could be sent to law enforcement and other first responders to help them to act quickly in getting the situation under control.

Verma documented his project from start to finish, so it is worth a read if you have an interest in using machine learning to classify audio. If you want to skip the reading and get right into building your own audio classification device, you can start by cloning the Edge Impulse project.

Want to see Edge Impulse in action? Schedule a demo today.