Many of our forum users have been asking how to build Edge Impulse projects for Android, being an easy edge device for anyone to get started with edge AI, as well as being a very common mobile device. Let's review how to build using C++ and Android Native Development Kit (NDK) with Edge Impulse.

Android is a great choice for mobile development, given its ease of use and the ability to distribute Android packages or APKs for a wide range of platforms, including WearOS, automotive, embedded, television, Unity, and eXtended Reality (XR). This ease of distribution is a key factor in creating an example for Android.

Choosing the native Android route opens the possibility of hardware acceleration. Although additional steps are required for the given hardware, this is the main reason for choosing Android over a JS / WASM-based cross-platform deployment that would not support hardware acceleration.

Even without acceleration, in my testing I have been impressed by the performance of our Visual Anomaly (GMM) crack detection test project on a 5-plus-year-old Google Pixel 3A, giving very usable results. Try yourself by downloading the GMM test APK, or follow our Running Inference on Android tutorial to build your own project into an APK.

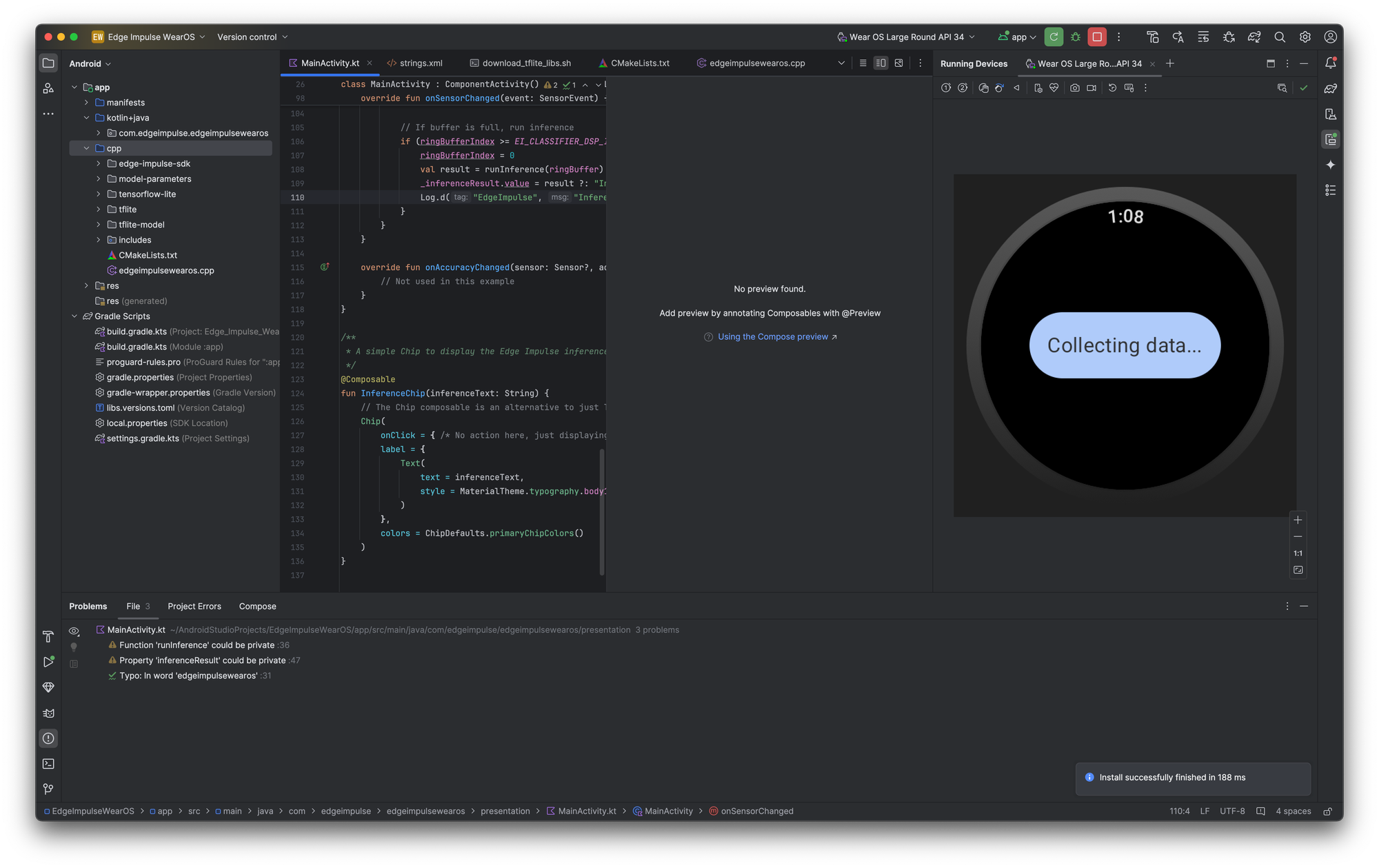

At Edge Impulse, we are committed to enabling developers to create optimized ML models for edge devices. This example uses our existing C++ library deployment option combined with the Android Native Development Kit, which gives Android and WearOS developers access to our C++ library features. Kotlin is recommended over Java for developers, according to the latest Android Studio documentation. (Please let us know if you would like to also see this in Java in the comments.)

What do I need to get started?

To get started with development, you will need some familiarity with Android Studio or have built Java/Kotlin applications previously. We have created a repository with a sample Android Studio project for vision-based projects, a WearOS sample for motion-based projects, and another sample for a static buffer which you can build on for additional time-series sensor projects for mobile and other devices.

- Android Studio

- An Edge Impulse account and project

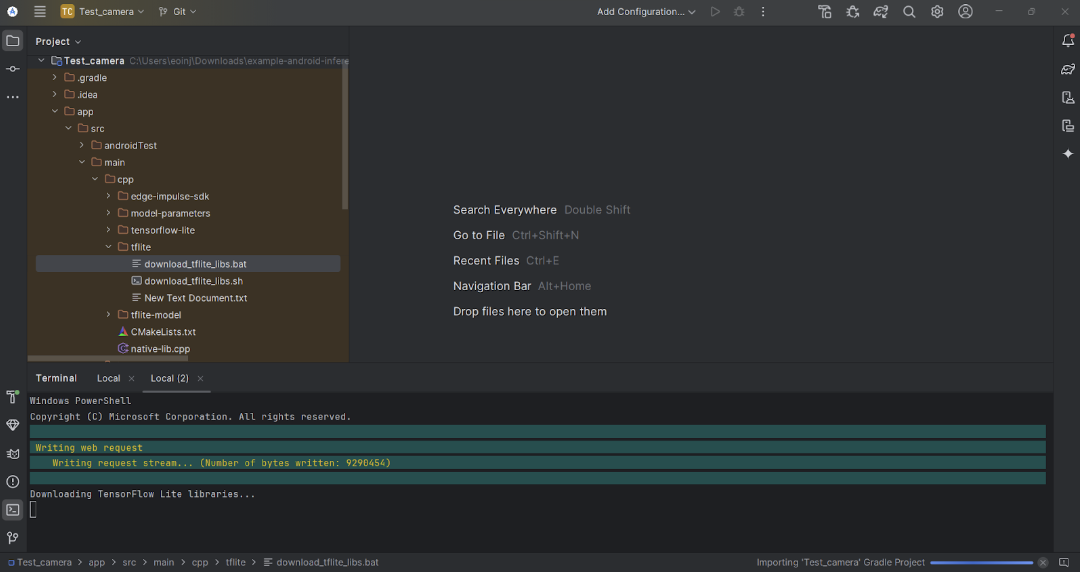

That's it; you just need to build your project for C++, take the distributed files, and paste them into the CPP folder.

Run a script to pull down the latest tflite files, and you can build your project!

Read on in our Running Inference on Android tutorial for the full steps here.

Coming soon — hardware acceleration

As stated earlier to optimize your Impulse's performance on Android devices, there are a couple of steps that you can follow; this is not included in the example repository but can be enabled at a later date. See our Running Inference on Android tutorial for the latest information on that.

Conclusion

Developing for Android opens a depth of possibilities in WearOS, automotive, television, Unity, and eXtended Reality (XR). With our initial testing of FOMO-AD on Android, the results are promising even without hardware acceleration enabled. See for yourself by downloading the GMM test APK or following our Running Inference on Android tutorial to build your own project into an APK.

We are eager to hear about the projects, and hardware you develop for based on the Android and WearOS examples. Would you like to see some examples in Java, or is Kotlin enough as the current recommendation from Android Studio?

Follow the link below to join the discussion on this article in our forum.