Smart camera systems designed to monitor and optimize traffic flow and enhance public safety are becoming more common components of modern urban infrastructure. These advanced systems leverage cutting-edge technologies, such as artificial intelligence and computer vision to analyze and respond to real-time traffic conditions. By deploying these smart camera systems at strategic locations throughout cities, municipalities can address a variety of challenges and significantly improve overall urban efficiency.

One of the primary issues smart camera systems address is traffic congestion. These systems can monitor traffic patterns, identify bottlenecks, and dynamically adjust traffic signal timings to optimize the flow of vehicles. By intelligently managing traffic signals based on the current demand and congestion levels, these systems can reduce delays, shorten travel times, and minimize fuel consumption, thereby contributing to a more sustainable and eco-friendly transportation system.

In addition to alleviating congestion, smart camera systems also play a crucial role in enhancing public safety. They can be equipped with features such as license plate recognition, facial recognition, and object detection to identify and respond to potential security threats or criminal activities. These capabilities enable law enforcement agencies to quickly and proactively address security concerns, providing a force multiplier for urban safety.

Despite the benefits that they can offer, the widespread adoption of smart traffic camera systems has been hindered by some nagging technical issues. In order to be effective, real-time processing of video streams is required, which means powerful edge computing hardware is needed on-site. Moreover, each system generally needs multiple views of the area which further taxes the onboard processing resources. Considering that many of these processing units are needed throughout a city, some just an intersection away from one another, problems of scale quickly emerge.

The Challenge: Real-Time Traffic Analysis

Being quite familiar with the latest in edge computing hardware and current developments at Edge Impulse, engineer Naveen Kumar recently had an idea that could solve this problem. By leveraging BrainChip’s Akida Development Kit with a powerful AKD1000 neuromorphic processor, it is possible to efficiently analyze multiple video streams in real-time. Pairing this hardware with Edge Impulse’s ground-breaking FOMO object detection algorithm that allows complex computer vision applications to run on resource-constrained hardware platforms, Kumar reasoned that a scalable smart traffic camera system could be produced. The low cost, energy efficiency, and computational horsepower of this combination should result in a device that could be practically deployed throughout a city.

Implementation

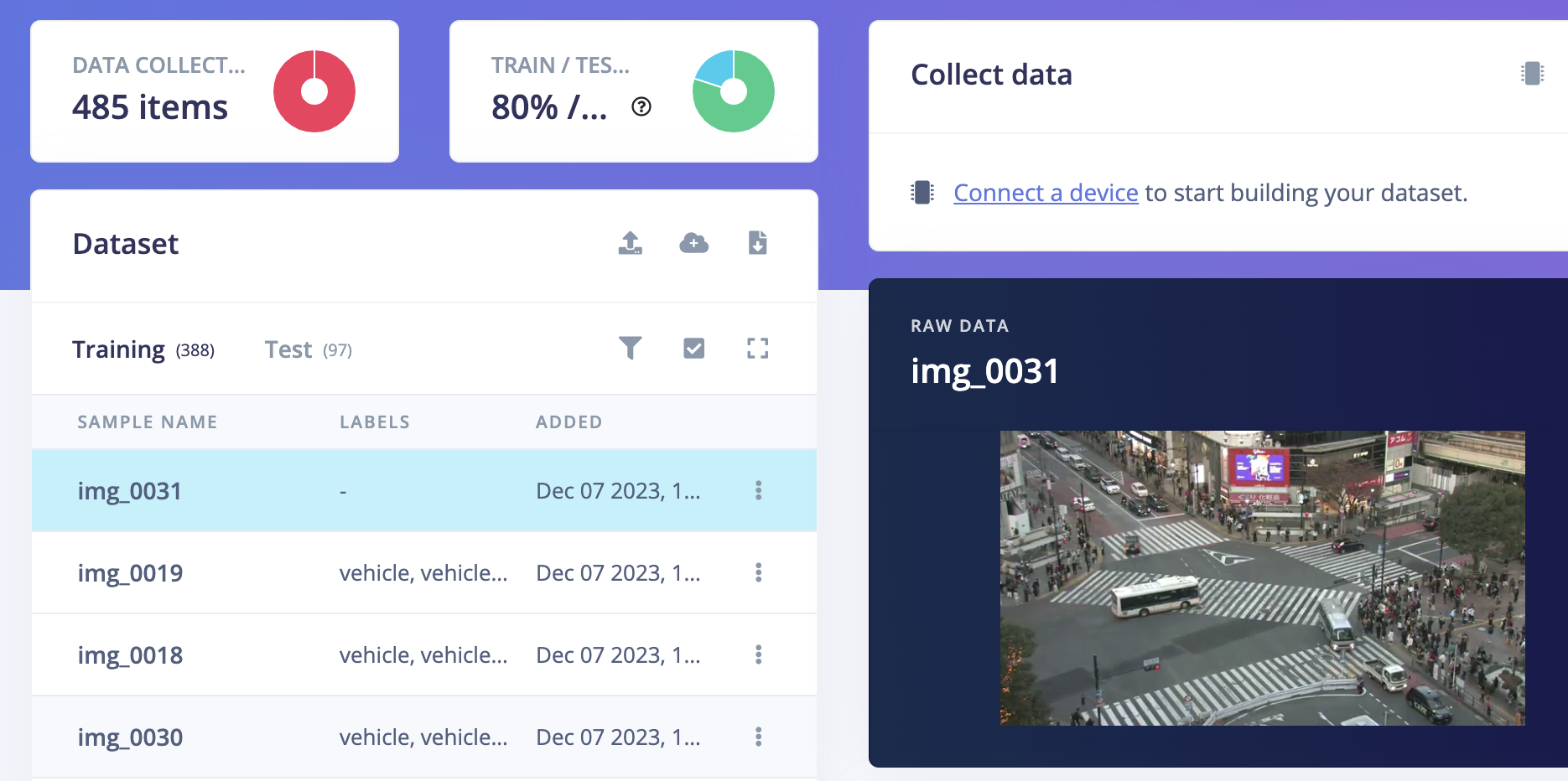

As a first step, Kumar decided to focus on a critical component of any traffic camera setup — the ability to locate vehicles in real-time. After setting up the hardware for the project, it was time to build the object detection pipeline with Edge Impulse. To train the model, Kumar sought out a dataset captured by cameras at Shibuya Scramble Crossing, a busy intersection in Tokyo, Japan. Still image frames were extracted from videos posted on YouTube, and those images were then uploaded to a project in Edge Impulse using the CLI uploader tool.

From there, Kumar pivoted into the Labeling Queue tool to draw bounding boxes around objects of interest — in this case, vehicles. The object detection algorithm needs this additional information before it can learn to recognize specific objects. This can be a very tedious task for large training datasets, but the Labeling Queue offers an AI-assisted boost that helps to position the bounding boxes. Typically, one only needs to review the suggestions and make an occasional tweak.

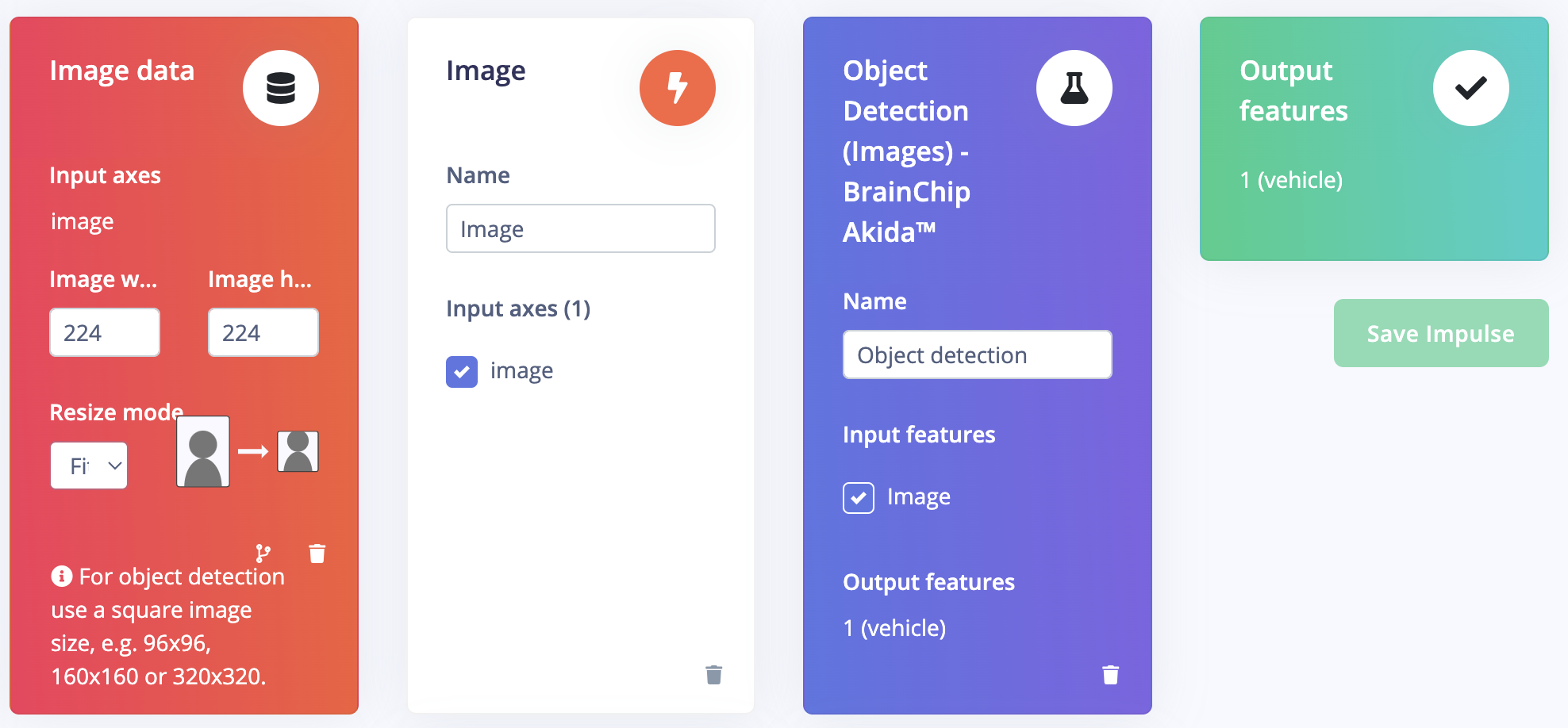

Having squared away the training data, the next step involved designing the impulse. The impulse defines exactly how data is processed, all the way from the time it is produced by the sensor until a prediction is made by the machine learning model. In this case, images were first resized, which is important in reducing the computational complexity of downstream processing steps. Following that, the data was forwarded into a FOMO object detection model that has been optimized for use with BrainChip’s Akida neuromorphic processor. Kumar made a few small adjustments to the model’s hyperparameters to optimize it for use with multiple video streams, then the training process was initiated with the click of a button.

After a short time, the training was complete and a set of metrics was presented to help in assessing how well the model was performing. Right off the bat, an average accuracy score of 92.6% was observed. This is certainly more than good enough to prove the concept, but it is important to ensure that the model has not been overfit to the training data. For this reason, Kumar also leveraged the Model Testing tool, which utilizes a dataset that was left out of the training process. This tool revealed an average accuracy rate of 94.85% had been achieved, which added to the confidence given by the training results.

BrainChip’s Akida Development Kit is fully supported by Edge Impulse, which made deployment a snap. By selecting the “BrainChip MetaTF Model” option from the Deployment tool, a compressed archive was automatically prepared that was ready to run on the hardware. This code is aware of how to utilize the Akida PCIe card, which allows it to make the most of the board’s specialized hardware.

To wrap things up, Kumar used the Edge Impulse Linux C++ SDK to run the deployed model. This made it simple to start up a web-based application that marks the locations of vehicles in real-time video streams from traffic cameras. As expected from the performance metrics, the system accurately detected vehicles in the video streams. It was also demonstrated that the predictions could be made in just a few milliseconds, and while slowly sipping on power.

Are you interested in building your own multi-camera computer vision application? If so, Kumar has a great write-up that is well worth reading. The insights will help you to get your own idea off the ground quickly. Kumar has also experimented with single-camera setups if that is more what you had in mind.

Jump into our free Enterprise Trial to get started building your own professional-grade solutions today.