In the world of manufacturing, product quality inspections play a crucial role in ensuring that the products meet the required standards and specifications. These inspections are conducted at various stages of the manufacturing process to identify any defects, deviations, or inconsistencies that could affect the overall quality and performance of the final product. They are carried out to minimize the risk of faulty products reaching the market, protect the brand reputation, and ultimately enhance customer satisfaction.

The importance of product quality inspections cannot be overstated. By conducting inspections, manufacturers can identify and rectify issues early on, reducing the likelihood of costly rework or product recalls. Inspections also help maintain consistent quality across batches, ensuring that every product leaving the factory meets the desired quality standards. Moreover, inspections provide valuable feedback to manufacturers, enabling them to identify areas for improvement and optimize their production processes.

Statistics highlight the significance of product quality inspections in manufacturing operations. According to a study by the American Society for Quality, a comprehensive quality management program can result in cost savings ranging from 15% to 20% of total sales revenue. This figure underscores the financial implications of inadequate quality control and the importance of robust inspection processes.

Implementing a product quality inspection process can be fraught with difficulties, however. One of the main challenges is establishing a standardized and comprehensive inspection procedure that covers all aspects of the product’s quality. This requires careful consideration of various factors, such as the specific requirements of the industry, the complexity of the product, and the desired level of quality. Another obstacle is integrating the inspection process seamlessly into the existing manufacturing workflow without disrupting production. This involves coordinating with multiple teams, such as production, engineering, and quality assurance, and ensuring clear communication and understanding among them.

But what if there was a drop-in solution that would not interfere with existing processes and could teach itself what good and bad products look like, without requiring complex definitions to be specified manually? As it turns out, there is, and moreover, it is inexpensive and simple to set up. A recent project completed by engineer Solomon Githu describes exactly how he built an inspection device, using computer vision and machine learning, that can watch products as they roll by on an assembly line and determine whether or not they are defective in a few milliseconds.

Githu’s idea involved using an object detection algorithm to rapidly inspect a product and make a determination as to whether or not it looked correct. It would learn to make this decision itself by first being shown a dataset consisting of both good quality products, and those with a defect of some sort. Since designing, training, validating, and deploying this type of machine learning model can be challenging and require a lot of technical expertise, Githu chose to build it with Edge Impulse Studio’s web-based interface that replaces the complexity with a simple, point and click experience.

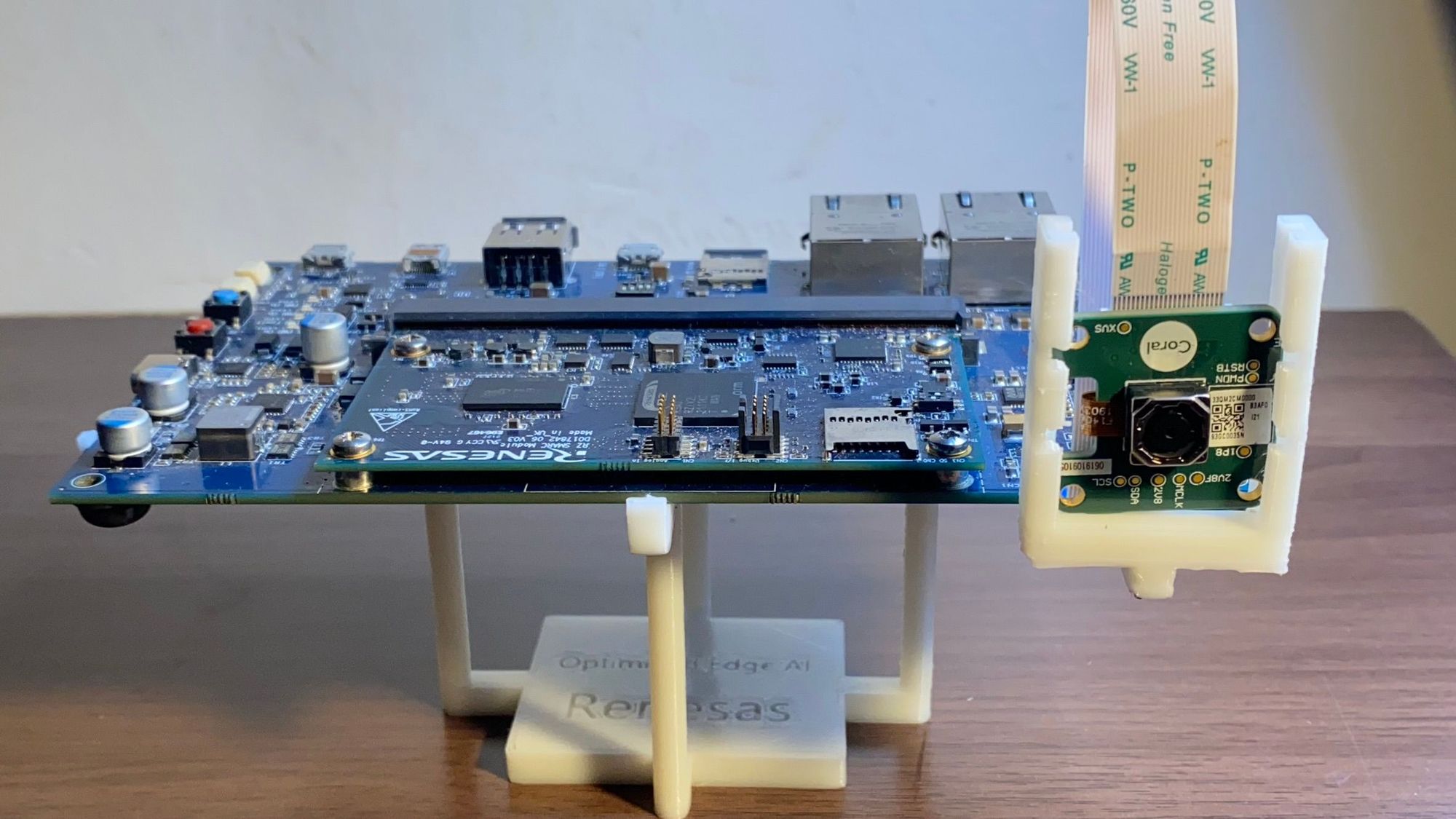

To keep up with a fast moving assembly line, powerful hardware was needed. The Renesas RZ/V2L Evaluation Board, which was purpose-built specifically for computer vision and machine learning applications was selected for this reason. With an Arm Cortex-A55 dual core processor, an Arm Cortex-M33 processor, 2 GB of DDR4 SDRAM, an AI accelerator chip, and plenty of interfaces for connecting external devices, the RZ/V2L can run an object detection model optimized by Edge Impulse for resource-constrained devices at a high frame rate. A Google Coral camera was also included in the build to give the device sight.

While the techniques outlined in this project apply to a wide range of products, Githu chose to focus on inspecting submersible pump impellers because of the availability of a preexisting public dataset. About 1,300 images were extracted from this dataset, consisting of both good impellers, as well as those with all manner of defects. They were then uploaded to a project in Edge Impulse Studio using the data acquisition tool.

If you have worked with object detection algorithms at all in the past, then you know that these images need to be annotated by drawing a bounding box around each object and labeling it as either good or defective — for all 1,300 images. Fortunately, the labeling queue has a number of AI-assisted options to take the pain out of this process. Githu chose an option that tracks an object between images and automatically draws the bounding box and sets the labels in each.

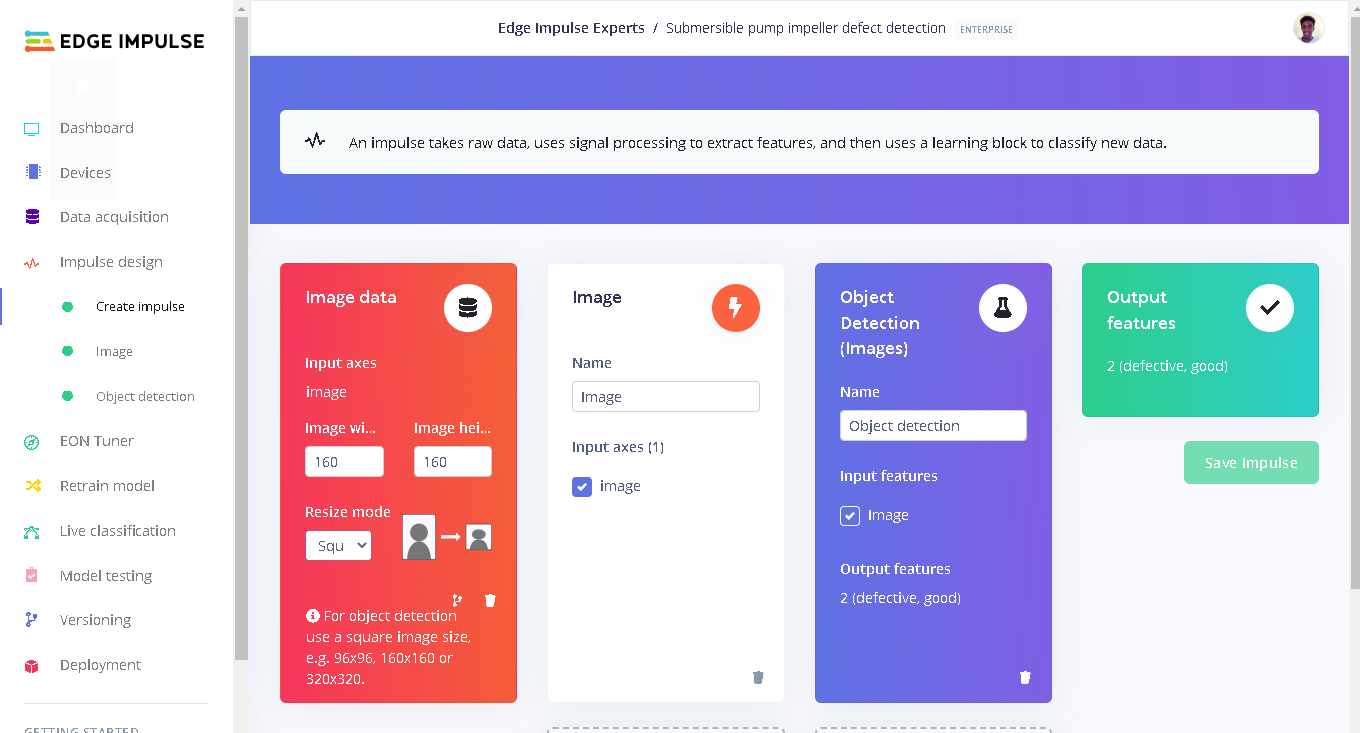

Having made short work of the dataset preparation process, the stage was set for building the project’s impulse. This impulse defines every step of the data processing, all the way from reading in the raw sensor measurements to producing a prediction from the machine learning algorithm. In this case, the impulse began with a step to reduce the size of the raw images — this is very important in reducing downstream resource requirements — then extract the most informative features from them. These features were then passed into a YOLOv5 object detection model (which allows the RZ/V2L’s AI accelerator to be used) configured to recognize “defective” or “good” impellers.

While building the model, Githu used the Feature Explorer tool and noticed that the features were not always clearly separated between the two classes. He believed the reason for this fact was that both good and defective products generally share many of the same characteristics, with only a portion of the damaged product differing from a good one. This meant that the model was going to be a bit more challenged in finding the right parameters that accurately separate the classes.

Because of this, a few different sets of hyperparameters were tested to find those that resulted in the best performing model. Githu settled on 100 training cycles and a learning rate of 0.001. This yielded a precision score of better than 96% on the training data set. It is always wise to confirm testing accuracy scores using a second dataset that was not included in the training process. That can be achieved using the model testing tool. After running this tool a precision score of 98% was observed. An excellent result like this is more than good enough to prove the concept, so Githu moved on to deployment of the model.

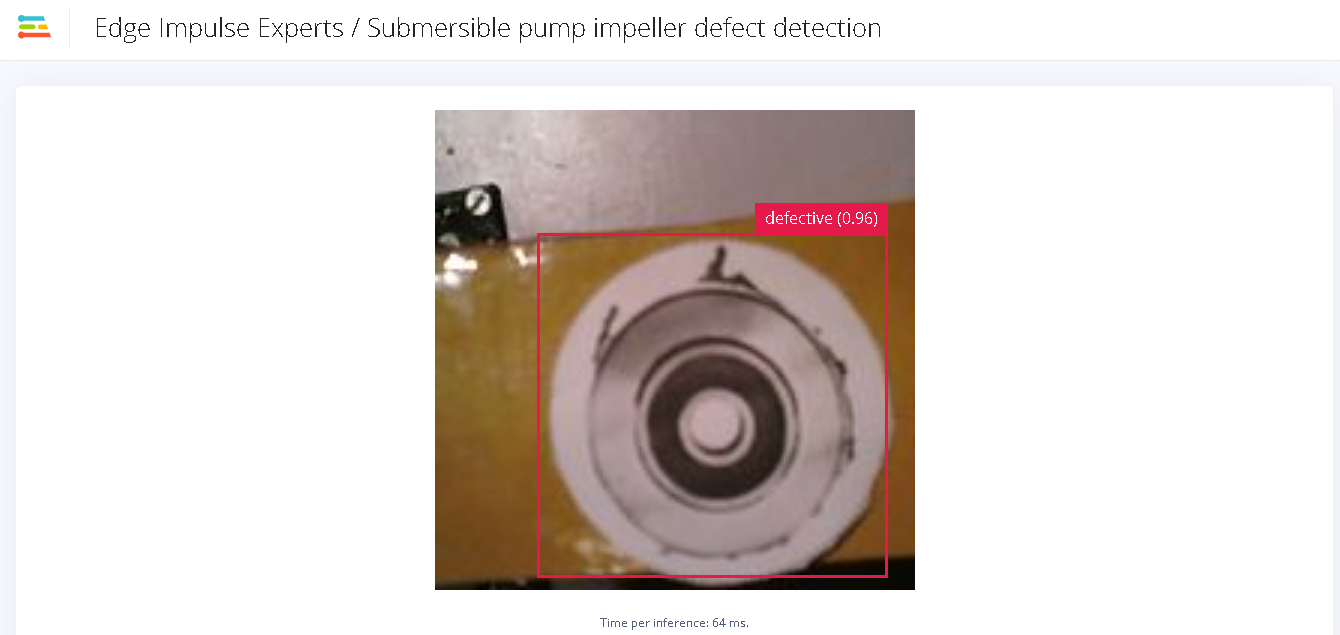

After setting up the RZ/V2L Evaluation Board and installing Edge Impulse for Linux, it only takes a few commands to link the board to an Edge Impulse Studio project and download the trained model file. Edge Impulse also provides an application that allows the user to quickly test the model. By loading a page in a web browser, one can see a video stream from the device’s camera along with boxes defining the detected objects and their predicted labels.

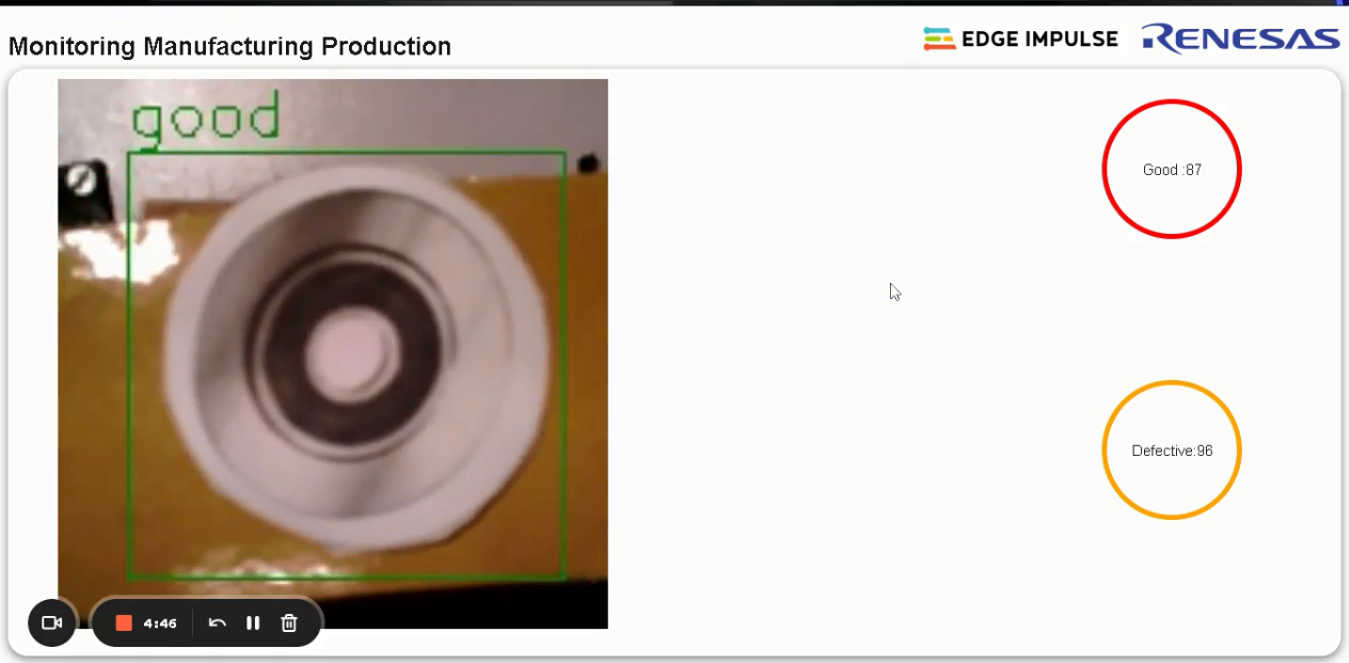

To complete the project, Githu built his own miniature conveyor belt and printed a number of impeller images from the dataset to travel down it. The camera from the inspection device was positioned above the conveyor belt. He then built a custom web application that shows the products as they roll by, and maintains a count of the good and bad impellers it has seen. Testing with this setup proved the system to be highly accurate, which was unsurprising given the excellent performance seen during model training and testing.

Submersible pump impellers are not everyone’s cup of tea, but by cloning the public Edge Impulse Studio project and uploading your own data, this method would be capable of assessing the quality of just about whatever you want. Plenty more details are available in the project write-up, and Githu even made the source code for his web application available for those that are interested.

Want to see Edge Impulse in action? Schedule a demo today.