Edge Impulse is growing in popularity in industry as well as academic circles as the go-to online tool for creating edge and embedded machine learning applications. While white papers and press releases are great for announcing features and use cases, we wanted to formally outline how our suite of tools can help solve many problems in the embedded ML world. We partnered with Colby Banbury and Prof. Vijay Janapa Reddi from Harvard’s School of Engineering and Applied Sciences to write an academic paper showcasing how Edge Impulse provides such solutions.

You can read the full paper on arXiv here.

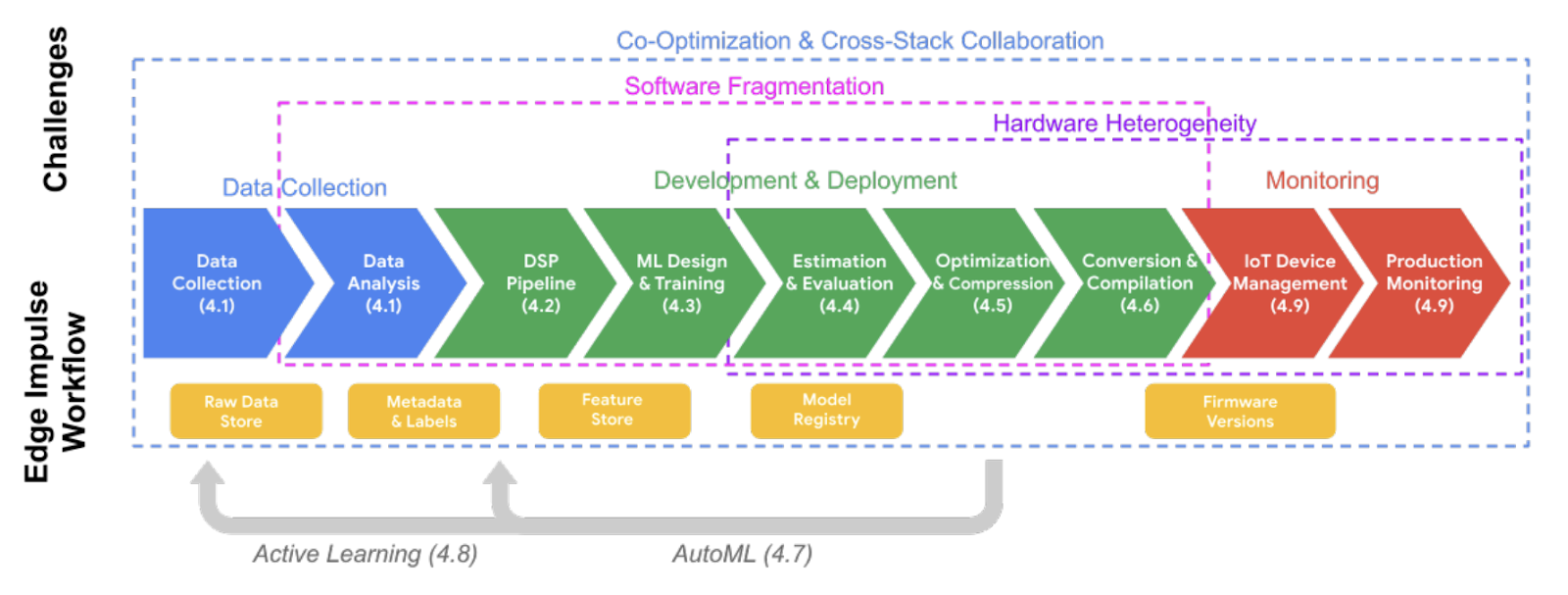

In the paper, we detail the steps taken during a typical edge ML project, which includes data collection, data analysis, preprocessing (DSP), model training, evaluation, optimization, deployment, and monitoring.

A number of challenges arise from these steps:

- Device resource constraints: most embedded devices have limited resources to perform necessary calculations. As a result, hardware and software optimizations are required to run computationally-heavy applications like machine learning.

- Hardware heterogeneity: Most desktops, servers, and smartphones generally operate with a few different hardware architectures, and operating systems help abstract any necessary drivers to help make application development easier and portable. Microcontrollers, on the other hand, are vast and varied. While most embedded applications can be written with standard languages (such as C/C++), understanding the unique architecture of each controller is important for working with timers, interrupts, hardware peripherals, etc. Vendors will often provide some level of abstraction through driver libraries, but these libraries are usually unique to a particular family of controllers from a vendor.

- Software fragmentation: Along with vendor-unique libraries, the current pipeline of edge ML development requires a stack of various tools and techniques to collect data, train a model, and deploy it to a target system.

- Co-optimization and cross-stack collaboration: for optimal performance in an edge ML application, hardware and software optimizations depend on each other, which creates a separate set of challenges. Additionally, maintaining data and preprocessing consistency between model training and deployment can be difficult, as these tasks are often done on separate machines (e.g. server vs. microcontroller).

The paper provides a tour of Edge Impulse’s most salient features and how they help tackle each of these challenges. For example, the Edge Impulse data pipeline ensures that data input and preprocessing is kept consistent between training and deployment. The available deployment options for a model target a number of popular hardware and software optimizations (e.g. CMSIS-NN) while falling back on plain C++ to ensure maximum operability. Edge Impulse offers a number of model optimizations, such as fully quantized TensorFlow Lite for Micro models to provide faster inference times.

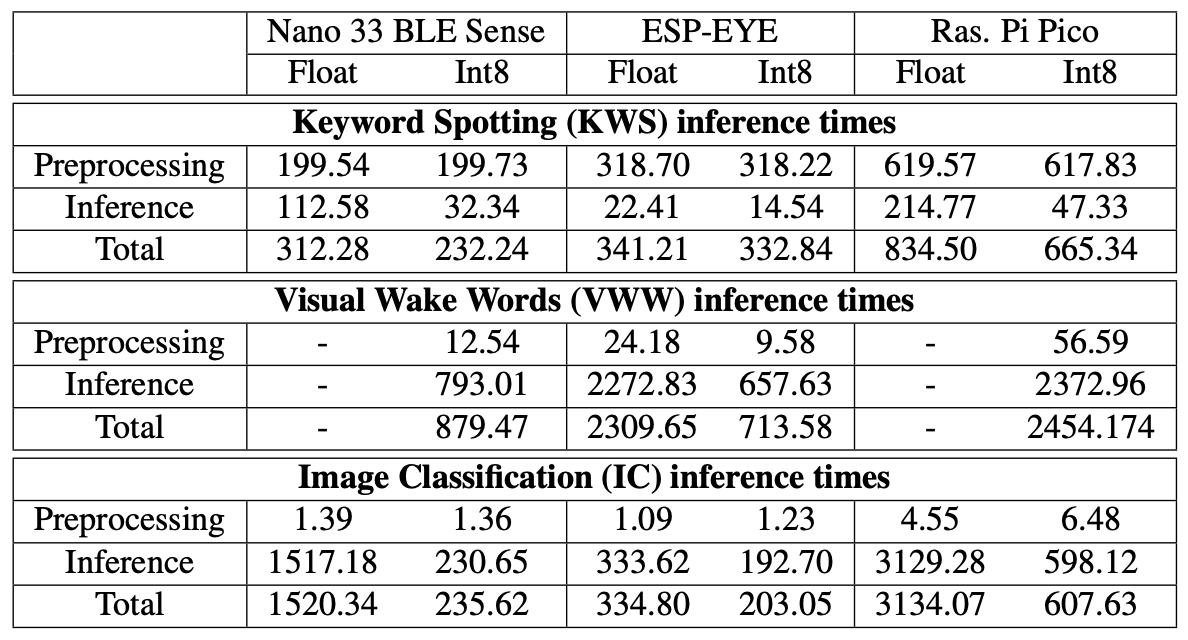

In addition to outlining these features, we test various hardware platforms to demonstrate how well these various optimization techniques perform. In Edge Impulse, we replicated three tasks from MLPerf™ Tiny: keyword spotting, visual wake words, image classification. From there, we deployed each project to the Nano 33 BLE Sense, ESP-EYE, and Raspberry Pi Pico. The preprocessing and inference times are given in the following table.

While the table is a fun look at how hardware compares at edge ML tasks, it demonstrates how Edge Impulse provides extensible and portable embedded libraries for tackling the hardware and software optimization as well as cross-stack challenges. Edge Impulse helps to provide a streamlined experience for developing edge ML applications, which helps developers and companies bring such applications to market much faster. It also helps developers maintain and version control datasets and pipelines.

While Edge Impulse was built primarily for industry, academic institutions are finding our toolset useful in the classroom as well as for research. In case you have not heard, we have a university program that helps schools teach embedded ML, complete with teaching materials. You can learn more about the program here.