Running machine learning (ML) models on microcontrollers is one of the most exciting developments of the past years, allowing small battery-powered devices to detect complex motions, recognize sounds, or find anomalies in sensor data. To make building and deploying these models accessible to every embedded developer we’re launching first-class support for the Arduino Nano 33 BLE Sense and other 32-bit Arduino boards in Edge Impulse.

The trend to run ML on microcontrollers is sometimes called Embedded ML or Tiny ML. TinyML has the potential to create small devices that can make smart decisions without needing to send data to the cloud - great from efficiency and privacy perspective. Even powerful deep learning models (based on artificial neural networks) are now reaching microcontrollers. Over the past year great strides were made in making deep learning models smaller, faster and runnable on embedded hardware through projects like TensorFlow Lite for Microcontrollers, uTensor, and Arm’s CMSIS-NN; but building a quality dataset, extracting the right features, training and deploying these models is can still be complicated.

Using Edge Impulse you can now quickly collect real-world sensor data, train ML models on this data in the cloud, and then deploy the model back to your Arduino device. From there you can integrate the model into your Arduino sketches with a single function call. Your sensors are then a whole lot smarter, being able to make sense of complex events in the real world. The built-in examples allow you to collect data from the accelerometer and the microphone, but it’s easy to integrate other sensors with a few lines of code.

Excited? This is how you build your first deep learning model with the Arduino Nano 33 BLE Sense (there’s also a video tutorial here: setting up the Arduino Nano 33 BLE Sense with Edge Impulse):

Sign up for an Edge Impulse account - it’s free!

Install Node.js and the Arduino CLI (instructions here).

Plug in your development board.

Download the Arduino Nano 33 BLE Sense firmware - this is a special firmware package (source code) that contains all code to quickly gather data from its sensors. Launch the flash script for your platform to flash the firmware.

Launch the Edge Impulse daemon to connect your board to Edge Impulse. Open a terminal or command prompt and run:

$ npm install edge-impulse-cli -g

$ edge-impulse-daemonYour device now shows in the Edge Impulse studio on the Devices tab, ready for you to collect some data and build a model. We’ve put together two end-to-end tutorials: detect gestures with the accelerometer or detect audio events with the microphone.

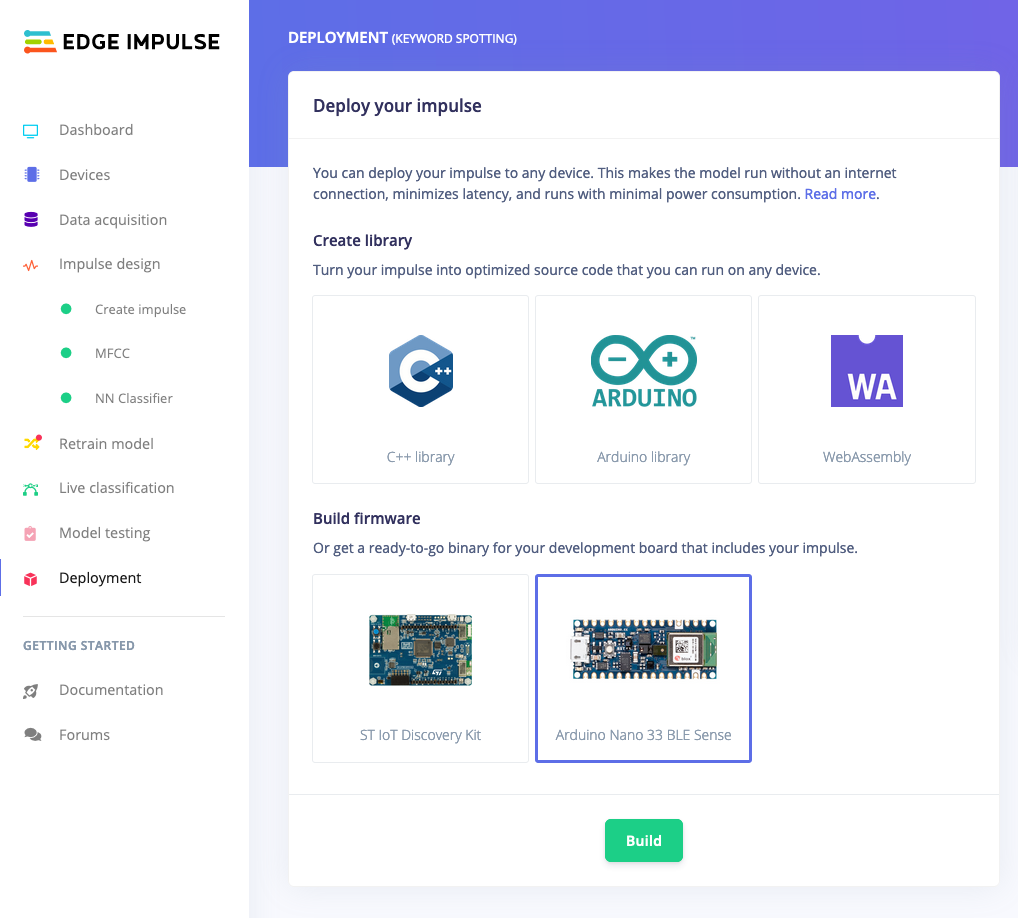

Deploy your model back to the Arduino Nano 33 BLE Sense. Either as a binary which includes your full ML model or as an Arduino library which you can integrate into any sketch.

Deploy to Arduino from Edge Impulse

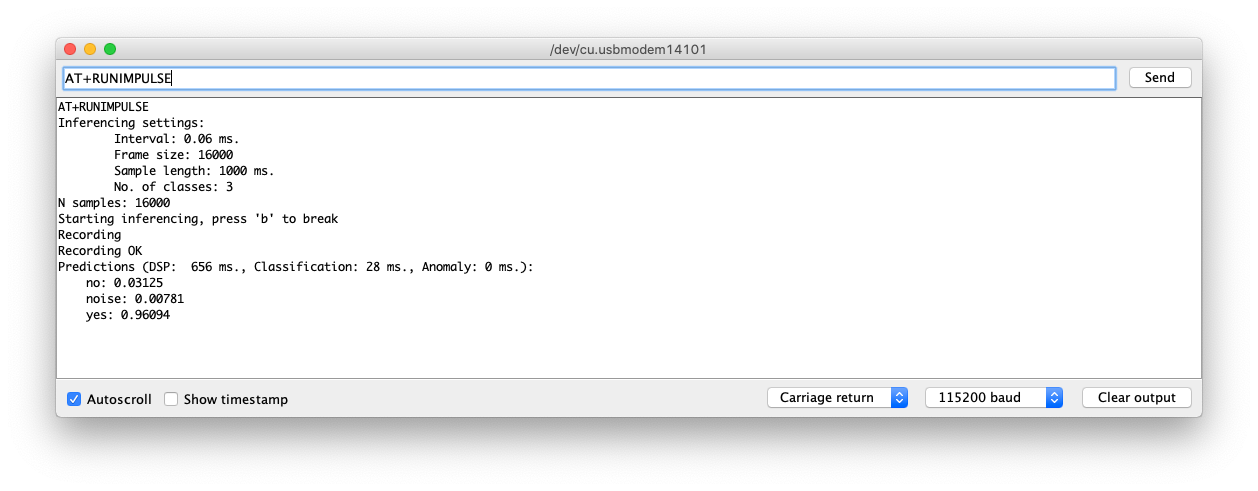

- Open the serial monitor and run

AT+RUNIMPULSEto start classifying real-world data.

Keyword spotting on the Arduino Nano 33 BLE Sense

Integrates with your favorite Arduino platform

We’ve launched with the Arduino Nano 33 BLE Sense, but you can also integrate Edge Impulse with your favorite Arduino platform. You can easily collect data from any sensor and development board using the Data forwarder. This is a small application that reads data over serial and sends it to Edge Impulse. All you need is a few lines of code in your sketch (here’s an example).

After you’ve built a model you can easily export your model as an Arduino library. This library will run on any Arm-based Arduino platform including the Arduino MKR family or Arduino Nano 33 IoT, providing it has enough RAM to run your model. You can now include your ML model in any Arduino sketch with just a few lines of code. After you’ve added the library to the Arduino IDE you can find an example on integrating the model under Files > Examples > Your project - Edge Impulse > static_buffer.

To run your models as fast and energy-efficiently as possible we automatically leverage the hardware capabilities of your Arduino board - for example, the signal processing extensions available on the Arm Cortex-M4 based Arduino Nano BLE Sense or more powerful Arm Cortex-M7 based Arduino Portenta H7. We also leverage the optimized neural network kernels that Arm provides in CMSIS-NN.

A path to production

This release is the first step in a really exciting collaboration. We believe that many embedded applications can benefit from ML today, whether it’s for predictive maintenance (‘this machine is starting to behave abnormally’), to help with worker safety (‘fall detected’), or in health care (‘detected early signs of a potential infection’). Using Edge Impulse with the Arduino MKR family you can already quickly deploy simple ML-based applications combined with cellular, WiFi or LoRa connectivity. Over the next months, we’ll also add integrations for the Arduino Portenta H7 on Edge Impulse, making higher performance industrial applications possible.

On a related note: if you have ideas on how TinyML can help to slow-down or detect the COVID-19 virus, then join the UNDP COVID-19 Detect and Protect challenge. For inspiration, see Kartik Thakore’s blog post on cough detection with the Arduino Nano 33 BLE Sense and Edge Impulse.

We can’t wait to see what you’ll build! ????

--

Jan Jongboom is the CTO and co-founder of Edge Impulse, he built his first IoT projects using the Arduino Starter Kit.

Dominic Pajak is VP Business Development at Arduino.