For more than 50 years, hard hats have been required gear for construction workers per Occupational Safety and Health Administration regulations. It is hard to argue with the wisdom of these regulations when taking into account that there were 2,200 traumatic brain injury-related fatalities at construction sites between 2003 and 2010. A survey conducted by the Bureau of Labor Statistics revealed that, among all occupational head injuries, in 84% of cases, head protection was not being worn. It seems clear that if we could ensure workers in potentially dangerous environments were always wearing their hard hats, many injuries and fatalities could be prevented.

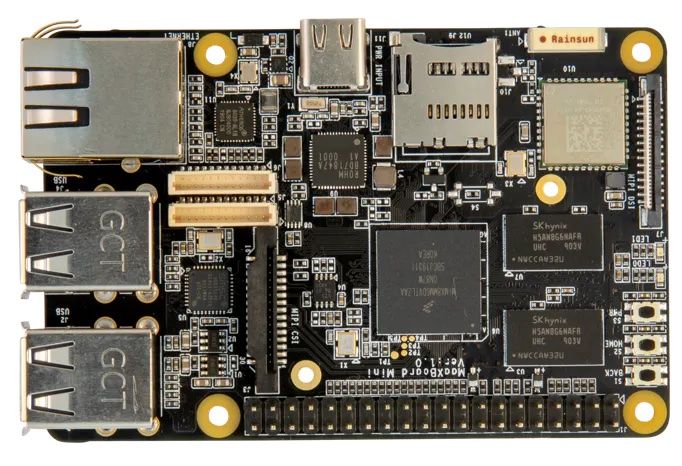

That was the motivation behind machine learning enthusiast Monica Houston’s latest solution, which uses Edge Impulse to determine if construction workers are wearing their hard hats. Houston deployed a machine learning classifier to Avnet’s powerful MaaXBoard Mini single-board computer, which features four Cortex-A53 CPU cores clocked at 1.8 GHz, 2 GB of RAM, Bluetooth Low Energy 4.0, Wi-Fi, and a MIPI camera interface. Based on previous benchmark comparisons, it was found that the MaaXBoard Mini outperforms other single-board Linux computers, such as the NVIDIA Jetson Nano and Raspberry Pi 4 when running inferences against MobileNet SSD V1 and V2 models, making it a great choice for this application. A Logitech HD Pro Webcam C920 was connected via USB for image acquisition.

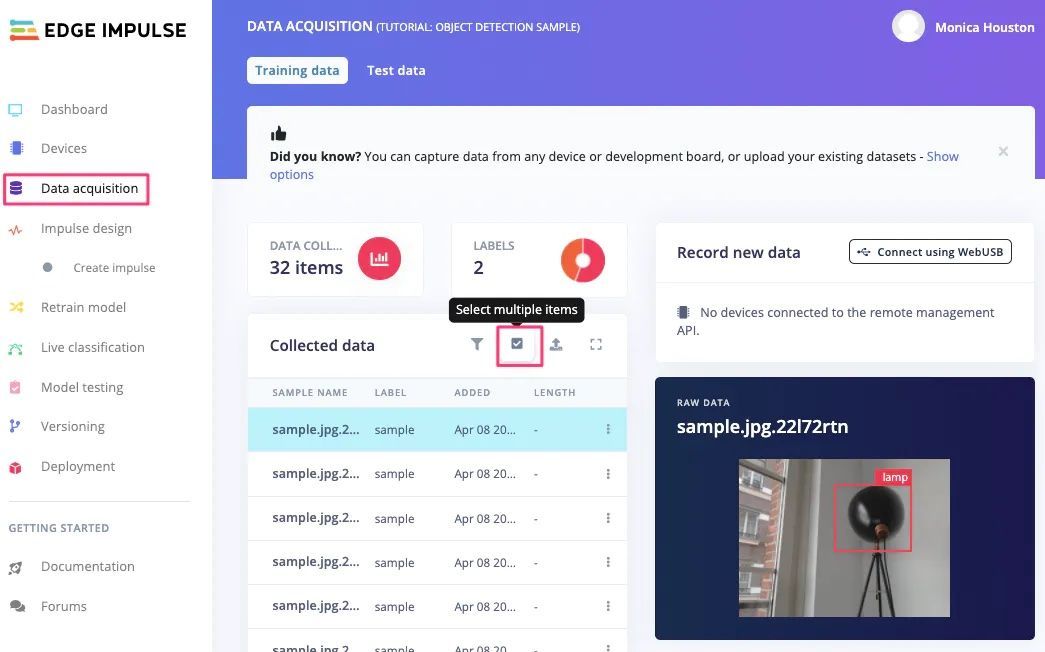

After a quick setup process to get the hardware up and running, the next step was to install the Edge Impulse command line interface (CLI) on the board. The CLI allows, among other things, the ability to capture images directly from the MaaXBoard Mini board within the Edge Impulse web interface. With the board connected to Edge Impulse via the CLI, the “Data acquisition” tab of the web application was used to collect and label a training dataset, consisting of images of people with and without a hard hat, and hard hats that were not being worn. It is also possible to upload existing datasets using the “upload existing data” tool if the data has previously been collected, or if a public dataset is being used.

After collecting the data, the next step was to design an impulse. This began by generating features for the input data, which reduces the dimensionality of the images and, accordingly, reduces model complexity and the computational resources needed. The feature explorer enables one to assess the quality of the features visually, to ensure that they do a good job of clustering the classes into distinct groups. Poorly separated classes may indicate a problem with the input data, which should be corrected before moving on.

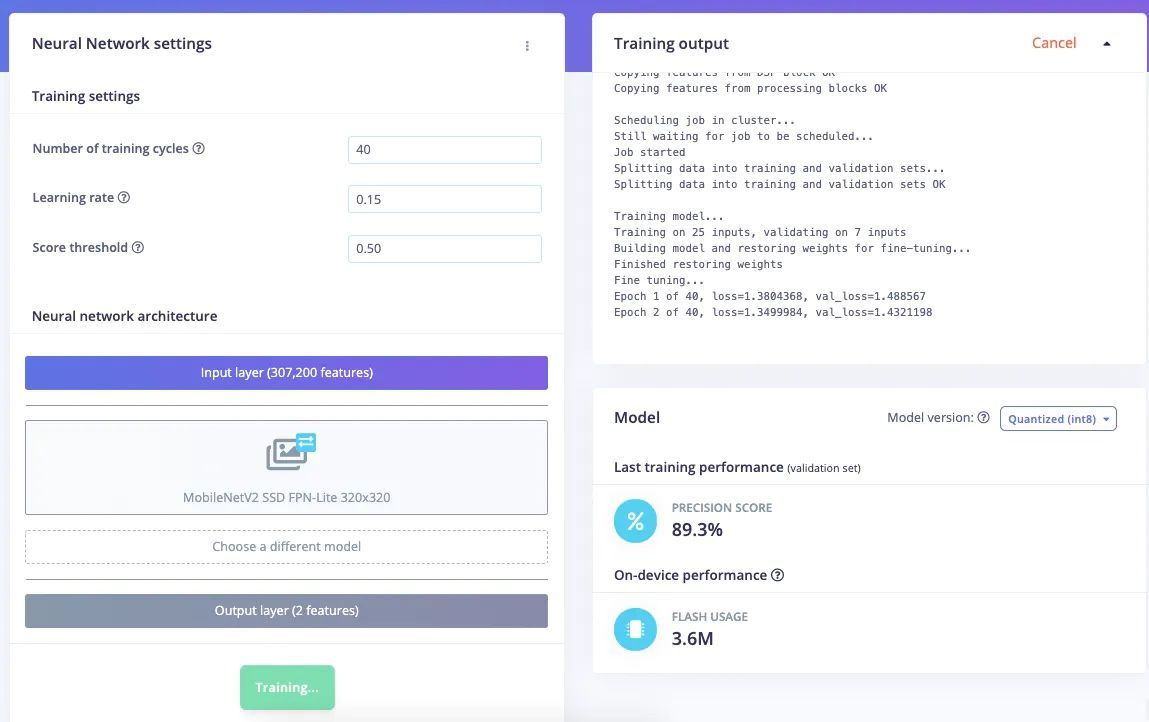

The next step in the impulse is the learning block, which consists of a MobileNet V2 SSD neural network. After tuning a few hyperparameters, the training process can be started with a button click. Once training completes, metrics related to model and device performance are presented for evaluation. There is the option to perform live classifications as well, in which images stream from the device and are classified in Edge Impulse in real-time to allow validation of the full pipeline. If everything is looking good, the CLI can be used to deploy the model to the MaaXBoard Mini board so that it can run on-device by entering just a few commands in the terminal.

To close the loop, Houston developed an app that uses Twilio to send an SMS message when a person without a hard hat is recognized by the device. In this way, it is possible to install the device at a worksite, where it can be on the lookout for anyone that forgot to wear their hard hat. The SMS message can be sent to a supervisor such that they can take immediate action.

Check out the project write-up for all the details, along with instructions to build your own. You can also watch Houston’s deep dive into system from Imagine 2021 below.

Want to see Edge Impulse in action? Schedule a demo today.