Worker falls in industrial settings have long been a major concern for employers and employees alike, as they can result in serious injuries and costly accidents. According to the Occupational Safety and Health Administration, falls are one of the leading causes of injuries and deaths in the workplace, accounting for over 15% of all worker fatalities in 2019.

Falls can occur in a variety of industrial settings, such as construction sites, warehouses, and manufacturing facilities. They can happen for many reasons, including lack of proper safety equipment, lack of training on how to use safety equipment, and failure to maintain equipment and facilities.

The injuries that can result from falls may be severe and long-lasting, and can include broken bones, head injuries, spinal cord injuries, and even death. These injuries can lead to extensive medical treatment, lost wages, and long-term disability. In addition, employers may be liable for the costs of these injuries, which can include medical expenses, workers’ compensation claims, and lawsuits.

Ideally, worker falls would be prevented before they ever happened, and many are through the use of proper equipment and by following safety procedures. But despite all of these precautions, the fact of the matter is that some falls will still occur. For this reason, employers must also work to recognize when someone has fallen so that action may be taken immediately. The sooner help can arrive, the better the outcomes will be.

Rolling out an automated solution to instantly detect worker falls may sound both technically challenging and costly, however, that may not be the case. Engineer and serial inventor Nekhil has developed a proof of concept device that shows just how simple it can be to create a fall detector — simple with the right choice of tools, that is. By leveraging a very well supported hardware platform and Edge Impulse Studio, Nekhil was able to tackle the job in less than a day’s time.

The core idea behind this device is to capture images of a workplace, then run a machine learning object detection algorithm to detect people and recognize if any of them appear to have fallen down. That information could then be used to send an alert to a designated person that can provide assistance. Given its low cost, power, and flexibility, a Raspberry Pi 4 single-board computer was chosen as the hardware platform. It was paired with a Raspberry Pi Camera Module to allow for the capture of high quality images.

As previously mentioned, an object detection algorithm was going to serve as the primary fall detection mechanism, so it would first need to learn to recognize what it looks like when a person has fallen down. Nekhil had some options at this point — he could have tracked down an existing dataset, or scraped some relevant images from the web, but in this case, he decided to create his own training dataset.

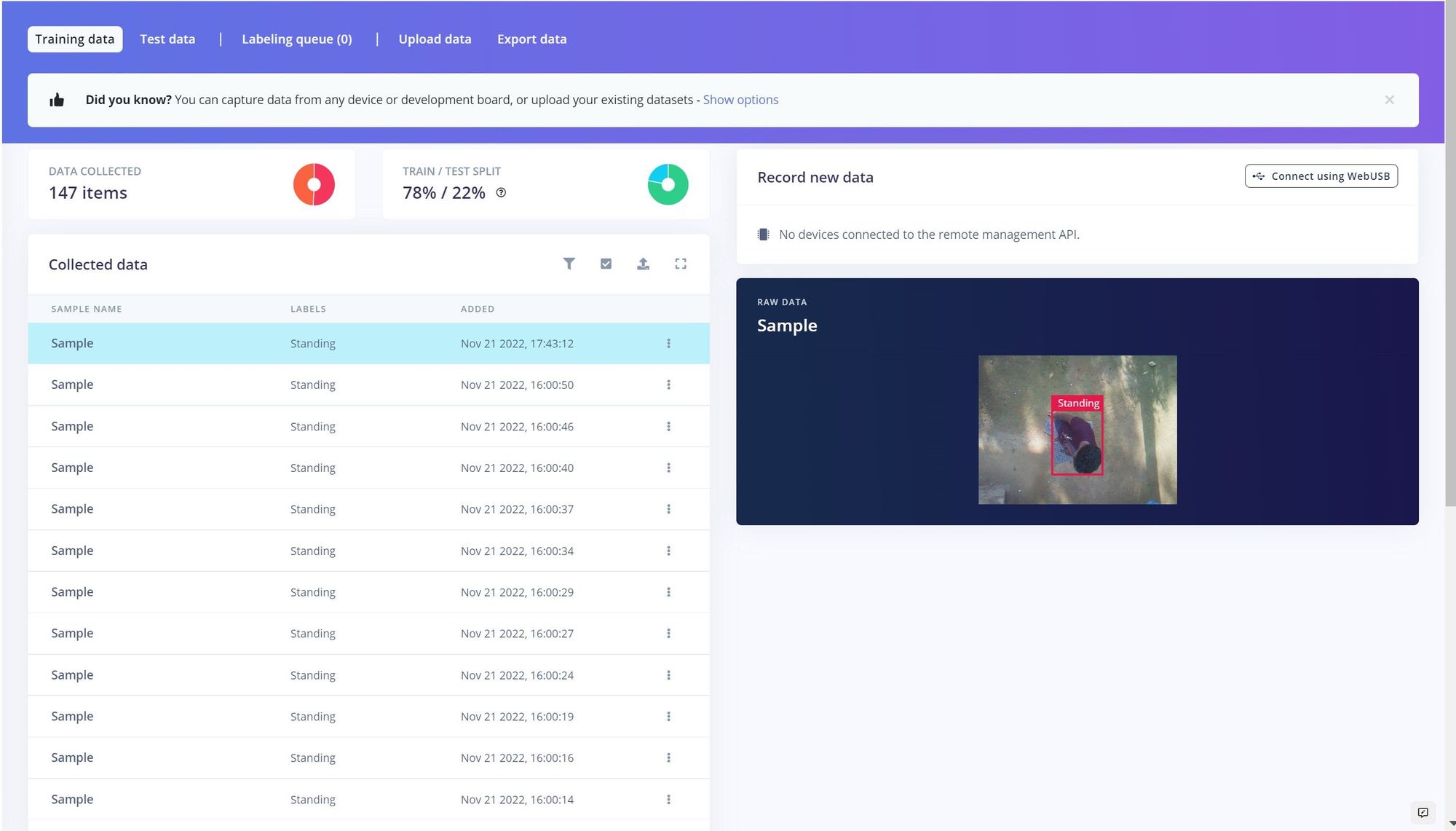

The Raspberry Pi was first linked to an Edge Impulse Studio project using the Edge Impulse CLI. After that initial step was completed, any images captured by the Raspberry Pi Camera Module would be automatically uploaded to Edge Impulse. Sample data was collected for two classes — a person standing, and a person that has fallen down. With just under 200 sample images uploaded, Nekhil pivoted to annotation of the data.

To help an object detection model learn, objects of interest need to be identified by drawing bounding boxes around them. Even for a small dataset such as this one, that could take some time. Fortunately, Edge Impulse Studio’s labeling queue gives an AI-assisted boost that helps to draw the bounding boxes for you. Just verify that each image is annotated properly, occasionally make a small adjustment, then the job is done.

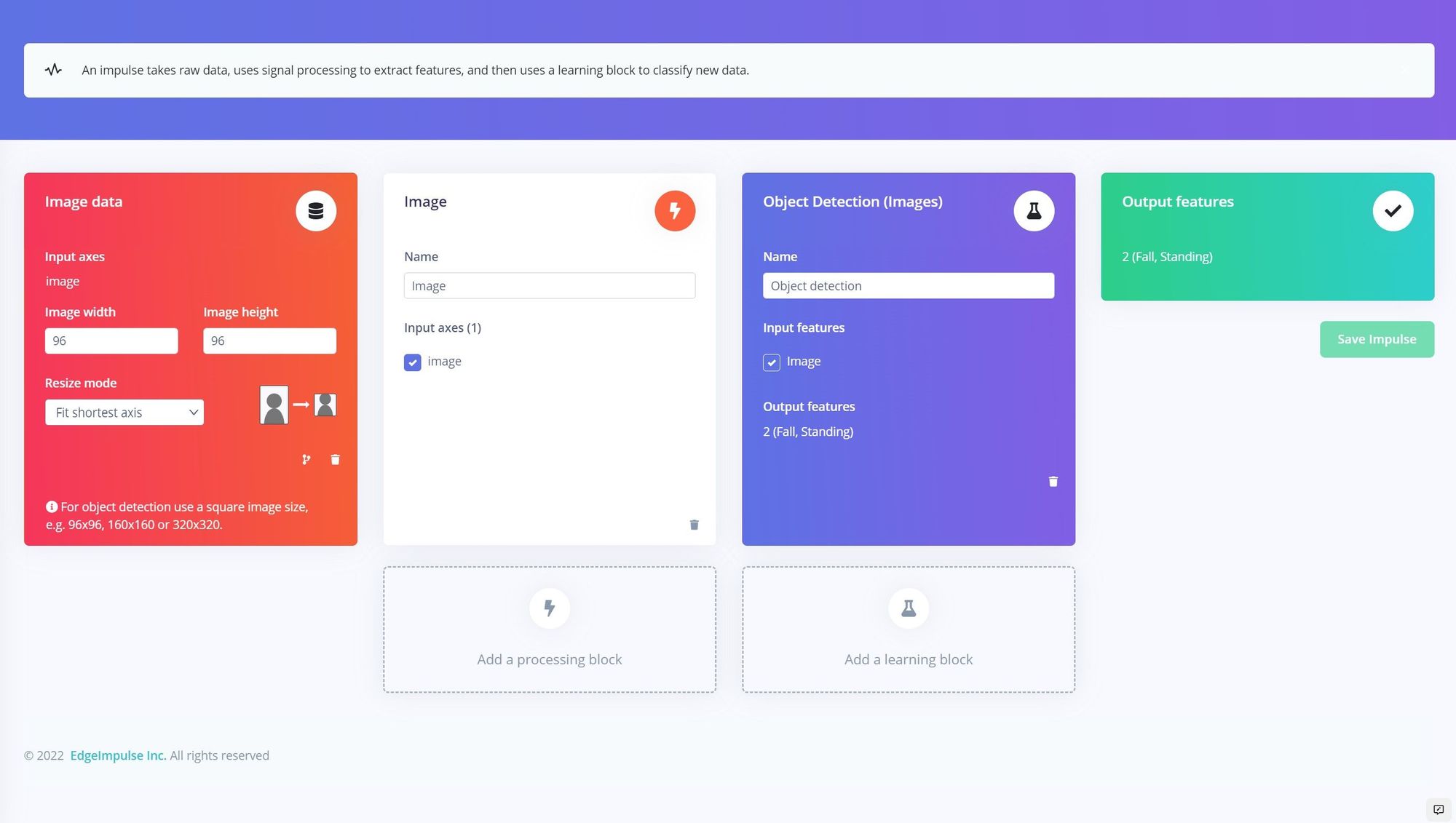

With the preliminary work out of the way, Nekhil was ready to design an impulse to analyze the images captured by the device. This consisted of a preprocessing step to reduce the size of the images, which is essential to minimizing the computational resources required by subsequent steps in the pipeline. This was followed by the object detection model, Edge Impulse’s ground-breaking FOMO algorithm that is ideal for resource-constrained hardware platforms like the Raspberry Pi. After training, this model will be able to recognize people that are either standing, or that have fallen down.

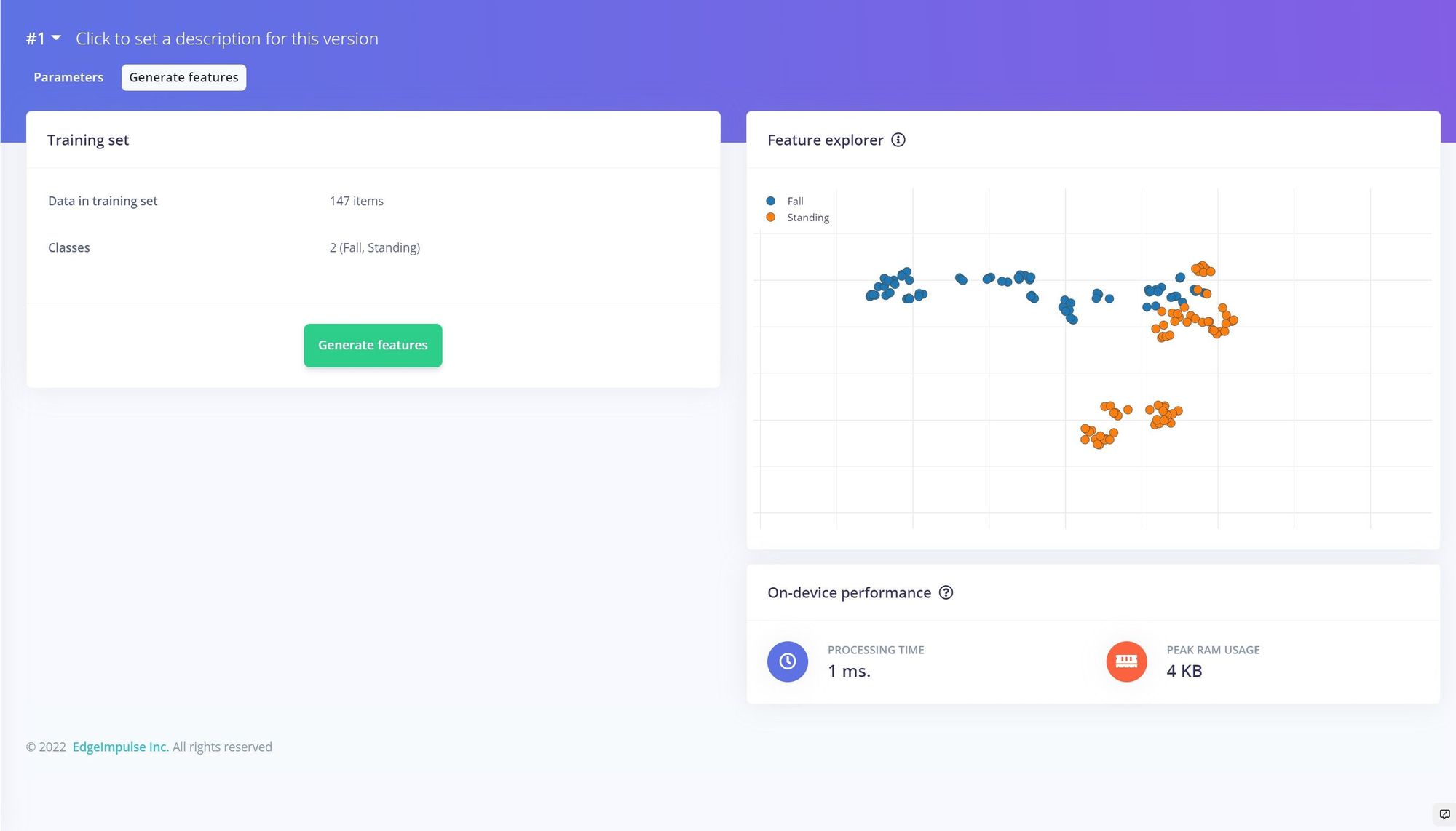

Before kicking off the training process, the Feature Explorer tool was used to assess how well each of the classes is separated from one another. This gives an early indication as to how well the model might ultimately perform, and can also help to pinpoint any issues with the training dataset before continuing. Since class separation looked good, the training process was started after making a few small tweaks to the model’s hyperparameters.

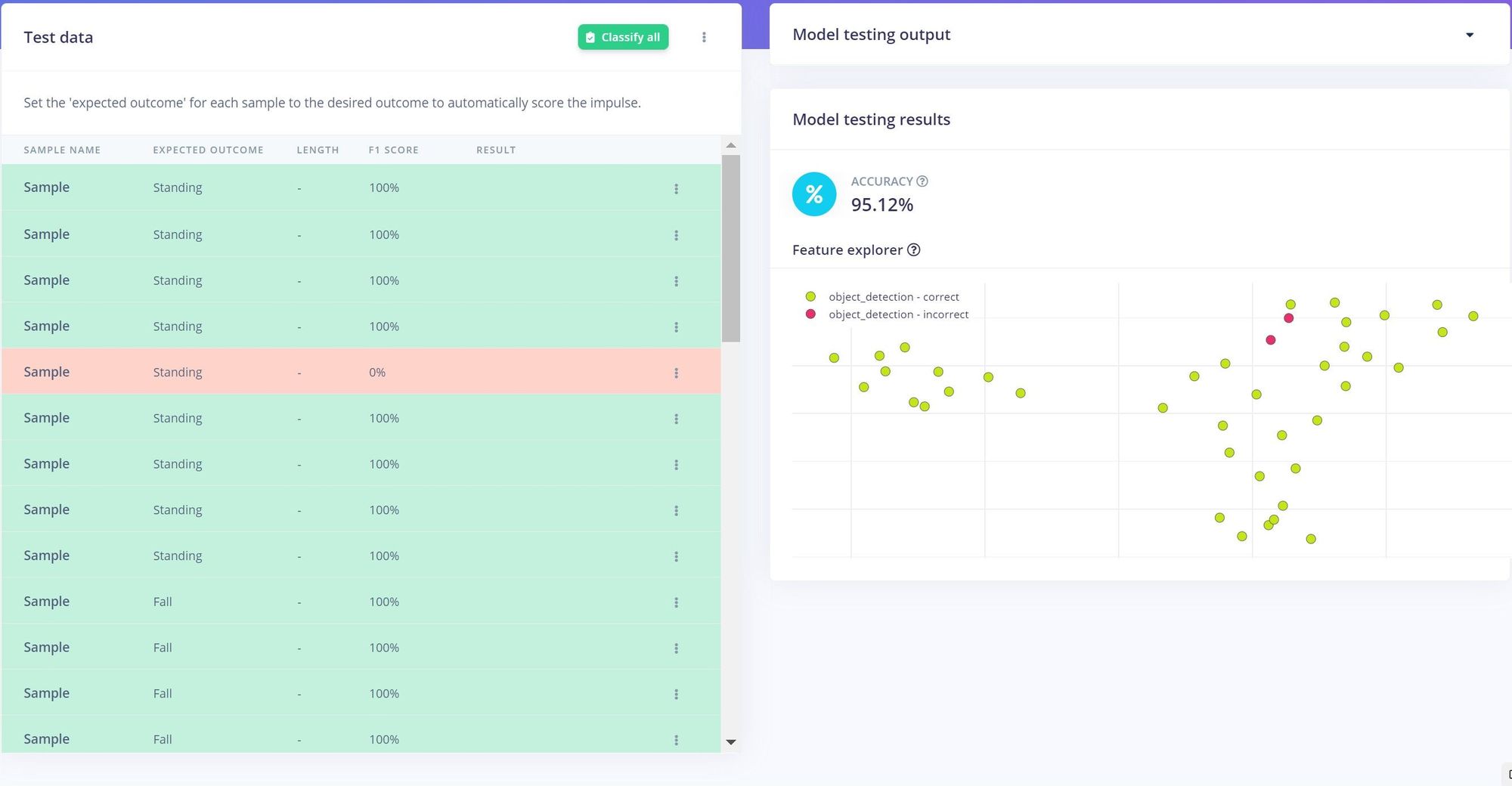

After a short time, the training process had finished, and metrics were presented to help assess how well the model was doing its intended job. It was discovered that the model had achieved an accuracy score of better than 98% on the first try. Not bad at all, but such a great result could be due to overfitting of the model to the training data. To check for that possibility, the more stringent model testing tool was used. This validates the model using only data that was excluded from the training process. This showed an accuracy rate of better than 95% had been achieved.

Everything was checking out great, so as a final test, Nekhil uploaded a few more images that were not a part of the initial dataset he captured. These images were analyzed by the model, and it detected both standing and fallen people correctly, so it was time to deploy the object detection pipeline to the Raspberry Pi. This was done using Edge Impulse’s Linux Python SDK, which makes it easy to download the model to any Linux-based computer system. Going this route also offers a lot of flexibility in adding one’s own logic that is triggered based on the model’s inferences. This could, for example, make it possible to send a text message to a supervisor when a worker falls down.

Do you want to take the first steps towards making your workplace smarter and safer in an afternoon? If so, reading up on Nekhil’s documentation is a great way to get started.

Want to see Edge Impulse in action? Schedule a demo today.