Livestock production is a massive market, with over $120 billion worth of beef having been produced in the US during 2020 alone according to the USDA. Naturally, those who raise livestock have a keen interest in raising as many healthy animals as possible, and minimizing the risk of losses. One way to improve yields is through the use of animal trackers. These devices keep track of animal locations to locate those that may get lost, allow for better pasture utilization, and help to detect concerning behavioral anomalies.

The numerous benefits that trackers provide certainly make them attractive, however, there is a downside in that these devices typically need to be manually installed on each animal. And of course purchasing a separate tracker for each animal, and the installation labor, can really add up. And on top of that, many trackers require enrolling in an annual subscription service to be of any use. This state of affairs should be of concern to all of us, because even those of us that play no role in raising the livestock still have these costs passed on to us at the grocery store. It is exactly these concerns that led engineer Jallson Suryo to develop a new type of animal tracker that sidesteps the issues with current devices.

Suryo developed a proof of concept drone-mounted animal tracker that can survey a large area and count the number of each type of animal that is present in it. Rather than instrumenting each animal, this tracker uses computer vision and machine learning to locate and identify them. A single tracker can keep watch over an entire pasture full of animals. And with the help of Edge Impulse, the algorithm runs entirely on an edge computing device onboard the drone — no cloud connection or subscription service is required.

Keeping with the low-cost theme of the project, Suryo chose the Raspberry Pi 3 Model B+ single board computer (a Raspberry Pi 4 or similar would also work) to serve as the main processing unit. A Raspberry Pi Camera Module gives the tracker its vision capability, and these components are mounted onto a quadcopter drone. Not only is this a low-cost build, but the components are also easy to source and very simple to use — one does not need to be a professional engineer to build this animal tracker.

With a solid platform in place, the Edge Impulse machine learning development platform was leveraged to build a data analysis pipeline that can recognize and count animals in images. Object detection is traditionally a computationally-intensive problem to solve, so Suryo chose to use Edge Impulse’s ground-breaking FOMO™ object detection algorithm that is 30 times faster than MobileNet SSD, yet requires less than 200 KB of RAM for operation. These characteristics make FOMO the ideal tool for detecting and counting objects on edge computing platforms like the Raspberry Pi.

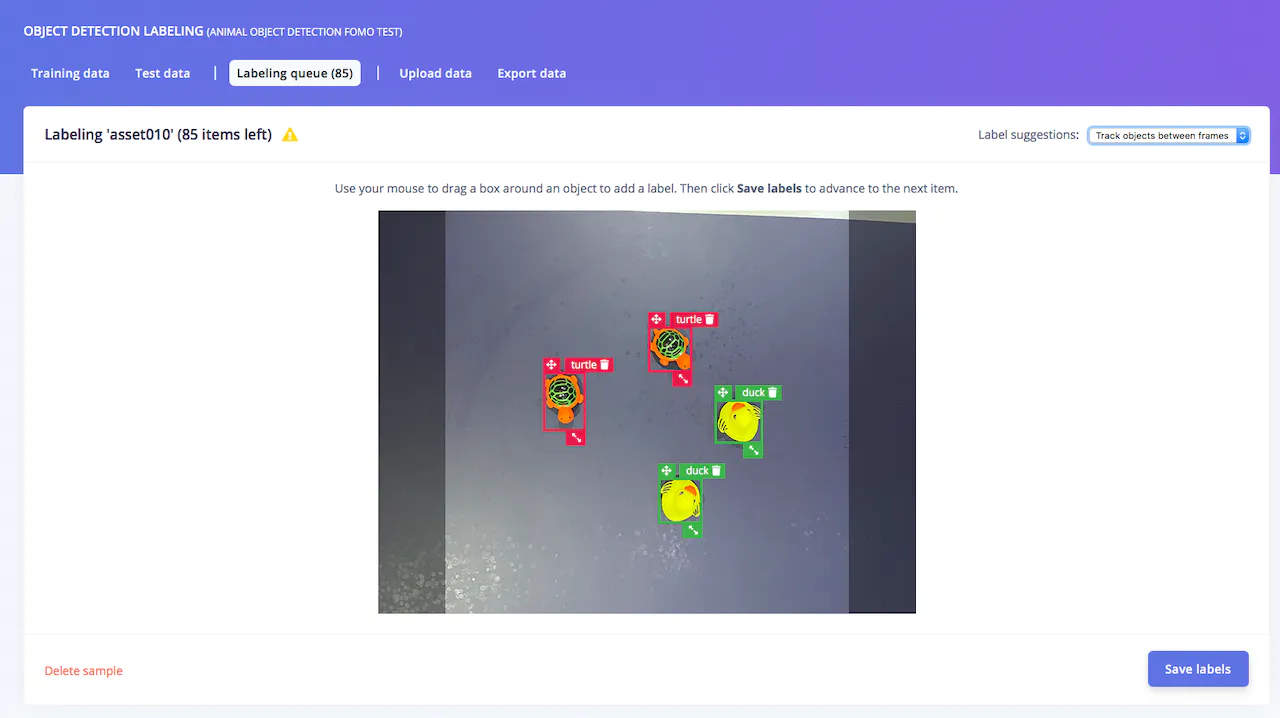

Before that machine learning analysis pipeline could be put to work, it needed to be trained to recognize different types of animals. Suryo got busy collecting a training dataset of animal images — to test the concept, he decided to use images of toy turtles and ducks, with the idea being that if this works, the same basic principles should be able to be translated to real world animal tracking scenarios with little added effort.

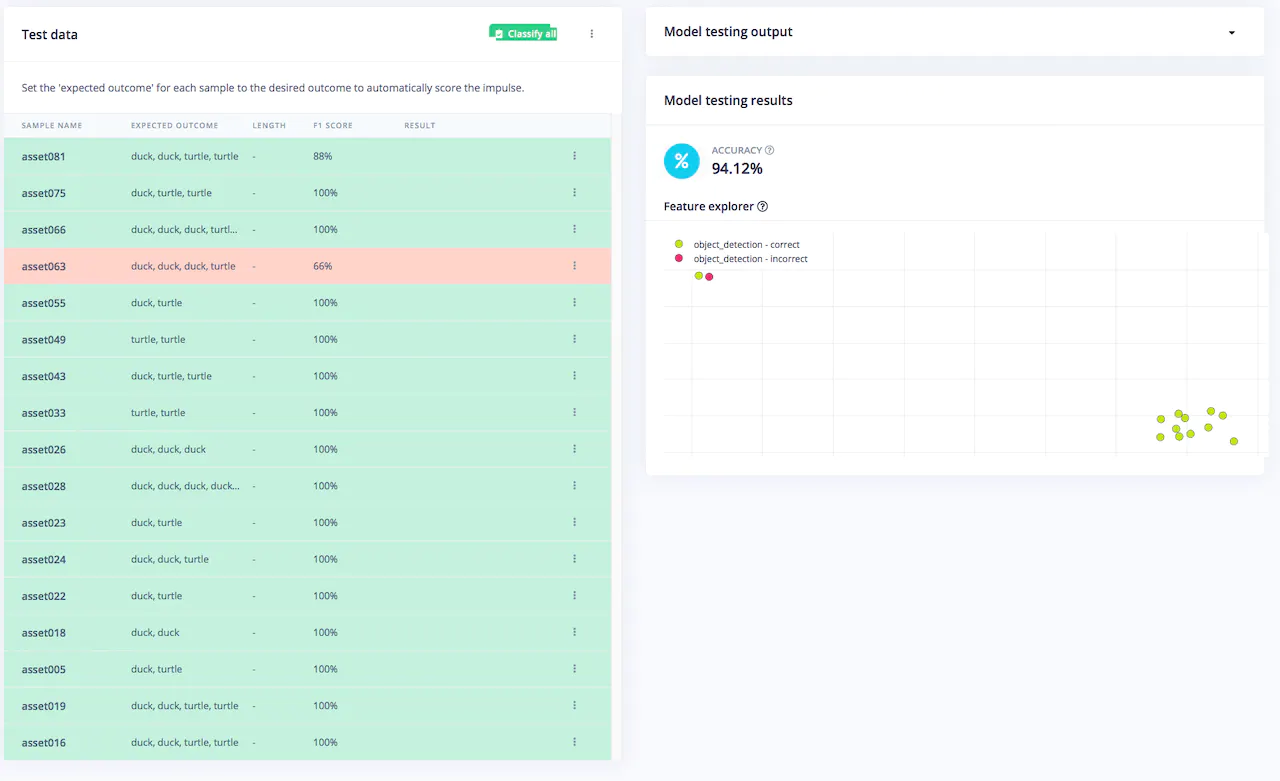

After collecting these images, they were uploaded to Edge Impulse Studio. The labeling queue tool enabled bounding boxes to be drawn around the animals in the images quickly by using machine learning (go figure!) to suggest where the boxes should be. This labeled data was then used to train the FOMO algorithm. The resulting model was then validated on a set of images that it had not previously seen. It was found to be better than 94% accurate in detecting the animals present in the images. Not bad for an afternoon’s work.

To get that drone up in the sky where it belongs, the model needed to be deployed to the physical Raspberry Pi hardware. Using the Edge Impulse for Linux CLI, this could be done by entering a single command in the terminal of the Raspberry Pi. This launched a live classification tool that can be accessed in a web browser to validate that the device is working as expected. Suryo ran some additional tests and found the animal tracker to be performing even better than expected.

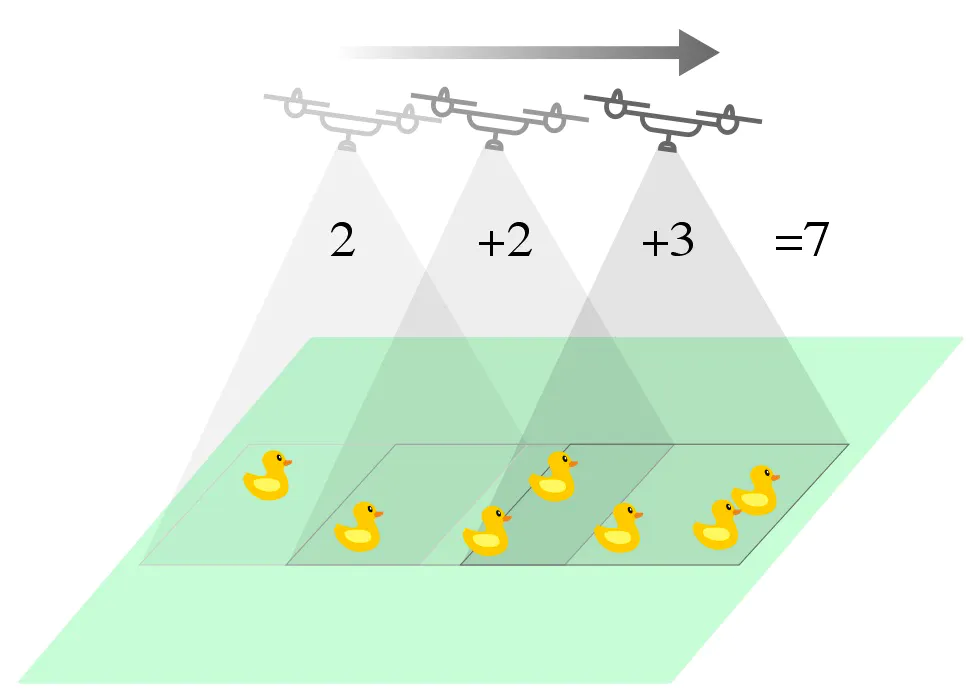

As a final step, the Edge Impulse model was incorporated into a script developed by Suryo to give the drone the ability to count animals, without double-counting the same animal as it travels along its flightpath. Considering how well this project performed on a Raspberry Pi 3, Suryo believes that the Arduino Nicla Vision or ESP32-CAM would also be up to the task. Make sure you do not miss the excellent write-up — there are lots of tips and tricks to get you started on your own machine learning-powered devices.

Want to see Edge Impulse in action? Schedule a demo today.