The global population is increasing, and as it does, urban centers will continue to swell. More than half of all people presently live in an urban area, and if the United Nations' estimates are correct, 68 percent of people will live in a city by the year 2050. These densely-packed population centers will be a source of many problems that we will need to solve. In fact, many large cities are already dealing with a number of problems stemming from their large populations today.

Anyone that has spent some time in a major city will likely immediately point to traffic as a major concern. Not only are traffic snags a source of frustration for residents, but poor management of the situation can also lead to negative economic consequences and a whole host of environmental problems like poor air quality.

Fixing these problems can be very complex, and may require changes in traffic patterns, light management, and even infrastructure improvements. But before any of these things can happen, city planners need raw data to help drive an informed decision making process. The most basic, and one of the most critical, data points is a measure of how many vehicles travel through different parts of the city, and when. Unfortunately, this type of data is either spotty or nonexistent in many cases, leaving decision makers with little hope of adequately addressing the problem.

Engineer Naveen Kumar realized that recent advances in edge machine learning have presented an opportunity to make collecting this type of data both simple and inexpensive. So, with the help of Edge Impulse, Kumar developed a proof of concept system that could be deployed to toll plazas, traffic signals, and other structures throughout a city to monitor the amount of vehicle traffic that passes by. It does this by running a computer vision algorithm that automatically counts every car it sees and keeping a running total.

Having a complex, centralized data processing infrastructure would significantly increase the complexity of such a system, and could also prevent it from operating in real-time, so Kumar designed a hardware platform that could run the machine learning algorithm entirely locally. This platform consisted of a Raspberry Pi 5 single board computer paired with a Raspberry Pi Camera Module 3 Wide. The ultra-wide 120 degree angle of view of this camera enables the device to capture and analyze multiple lanes of traffic simultaneously.

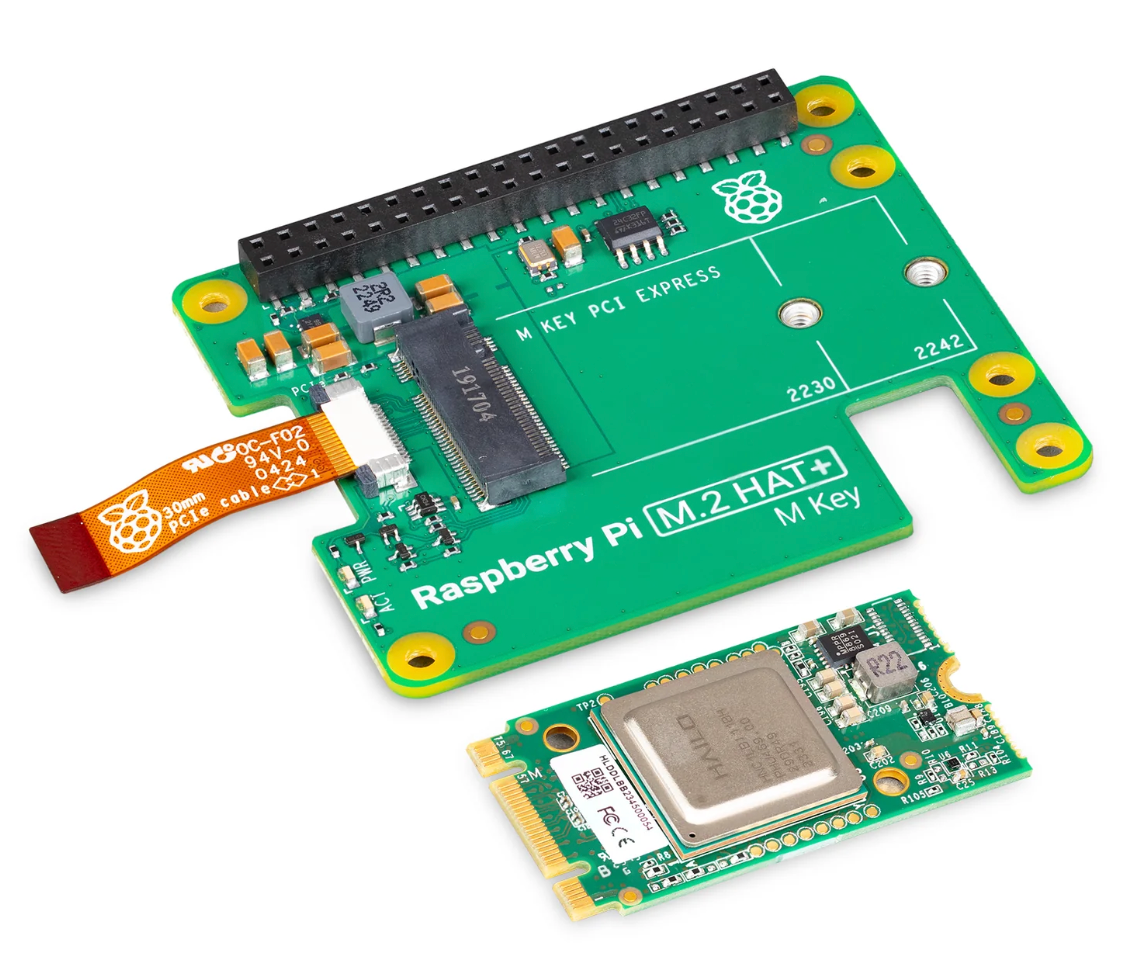

While the Raspberry Pi 5 is a very capable computer, vehicles can zip by pretty fast on the highway, so Kumar chose not to use it for running the machine learning algorithm itself. Instead, a Hailo AI Acceleration Module was connected to a Raspberry Pi M.2 HAT+ so that it could handle the job. The accelerator is built around a Hailo-8L AI processor, which offers a very impressive 13 tera operations per second. This gives the device enough power to easily track multiple cars in real-time, and as a bonus it consumes very little energy as it does so.

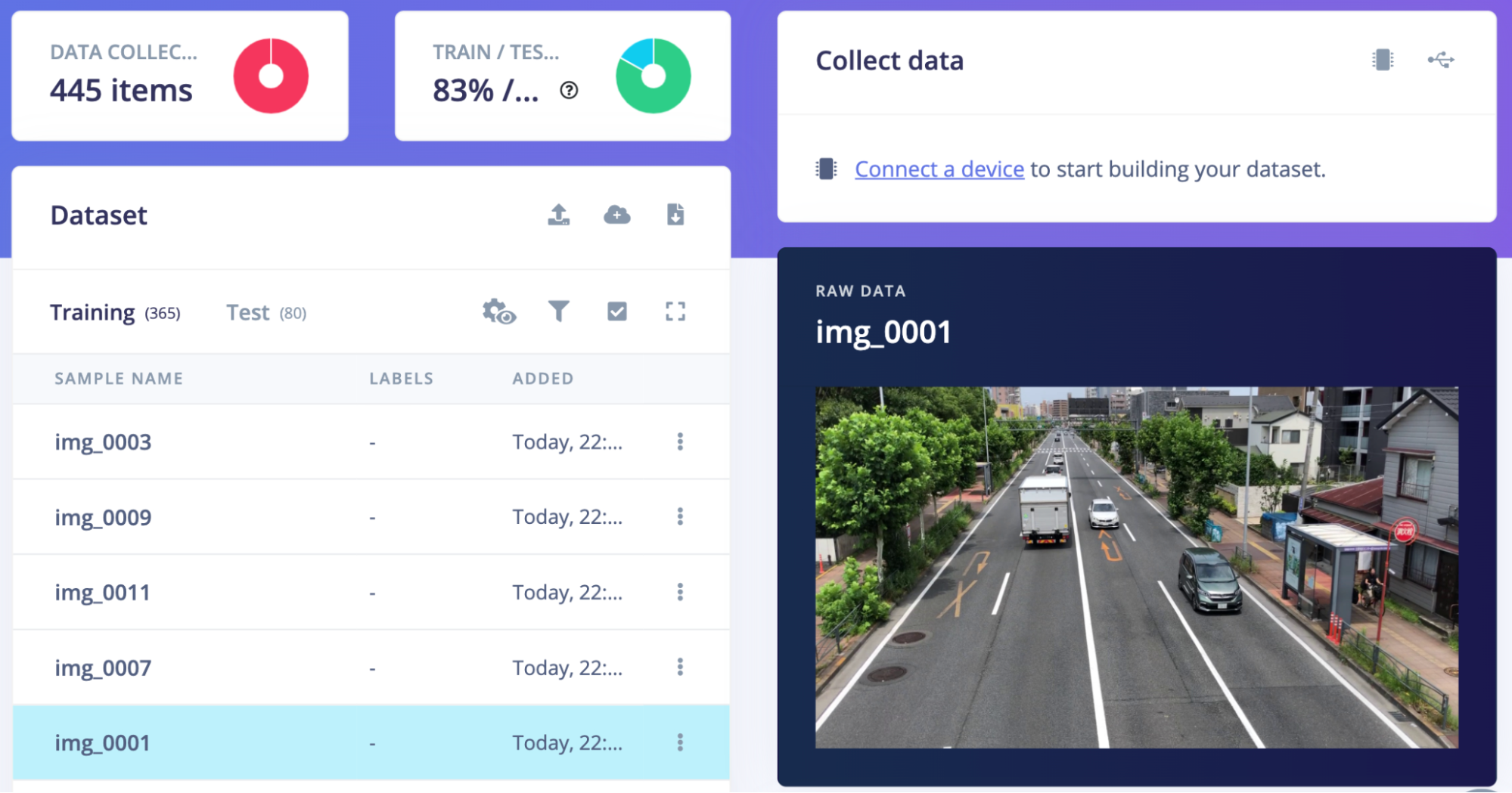

After deciding that an object detection algorithm would be the best tool for the job at hand, Kumar started building a dataset to train it. A number of videos were captured from atop a pedestrian bridge using a smartphone camera. Individual frames were then extracted from the videos before they were uploaded to an Edge Impulse project using the Edge Impulse CLI. In total, about 440 images were collected and uploaded.

Having successfully assembled the raw data, the next step involved using the Labeling Queue tool to draw bounding boxes around each vehicle visible in the images. This additional information is necessary to teach the object detection model how to recognize the objects of interest. This can be a lot of work, especially for large datasets, but fortunately the Labeling Queue tool has some AI-assisted options available to help automate the process and make short work of the task.

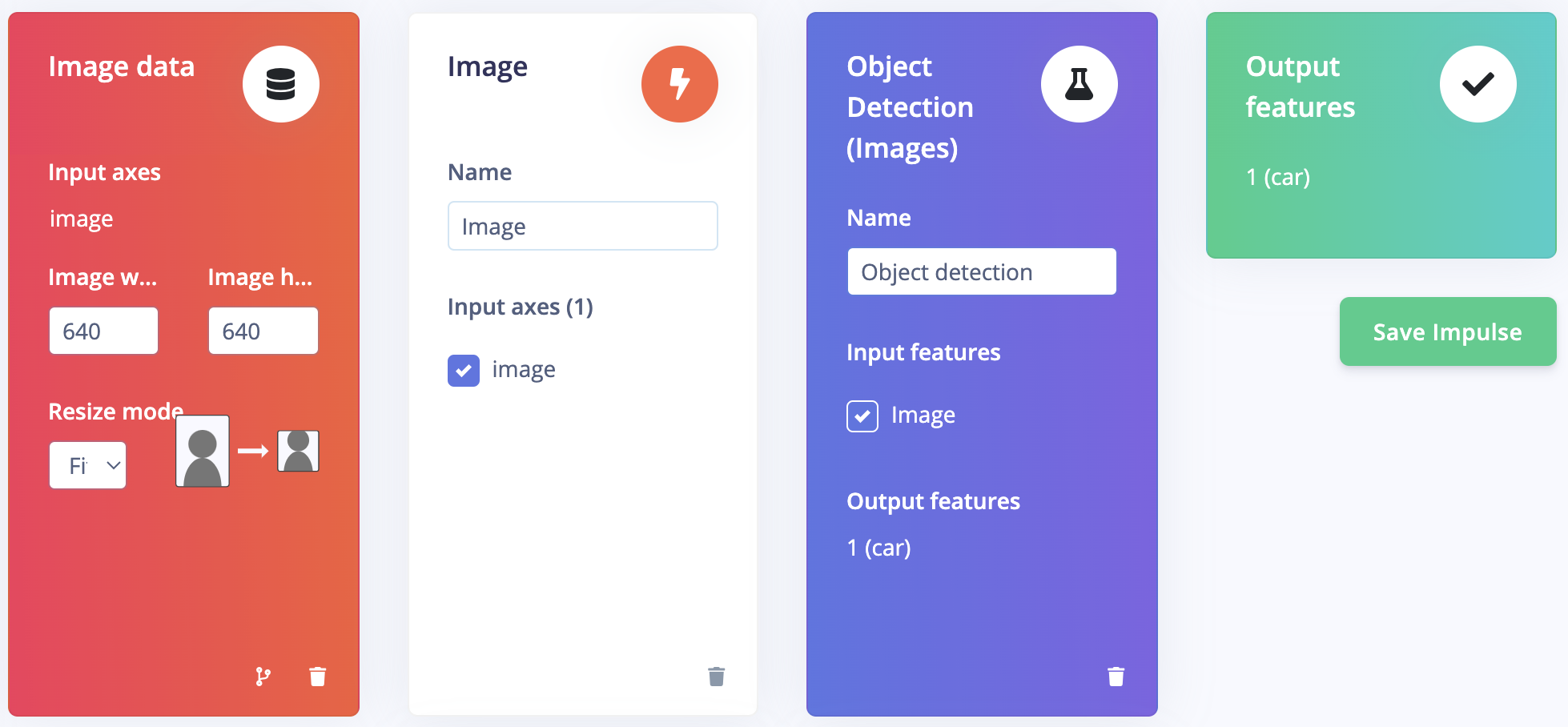

Kumar then designed the impulse, which defines the set of steps that the processing pipeline will take in handling the data. It covers data processing from the moment raw data is collected by the camera until the model predicts the locations of cars in the image. For this project, the impulse first resizes images to reduce their resolution, as this will reduce the computational complexity of downstream steps. Next, the most relevant features are calculated and forwarded into a YOLOv5 object detection algorithm with 7.2 million parameters.

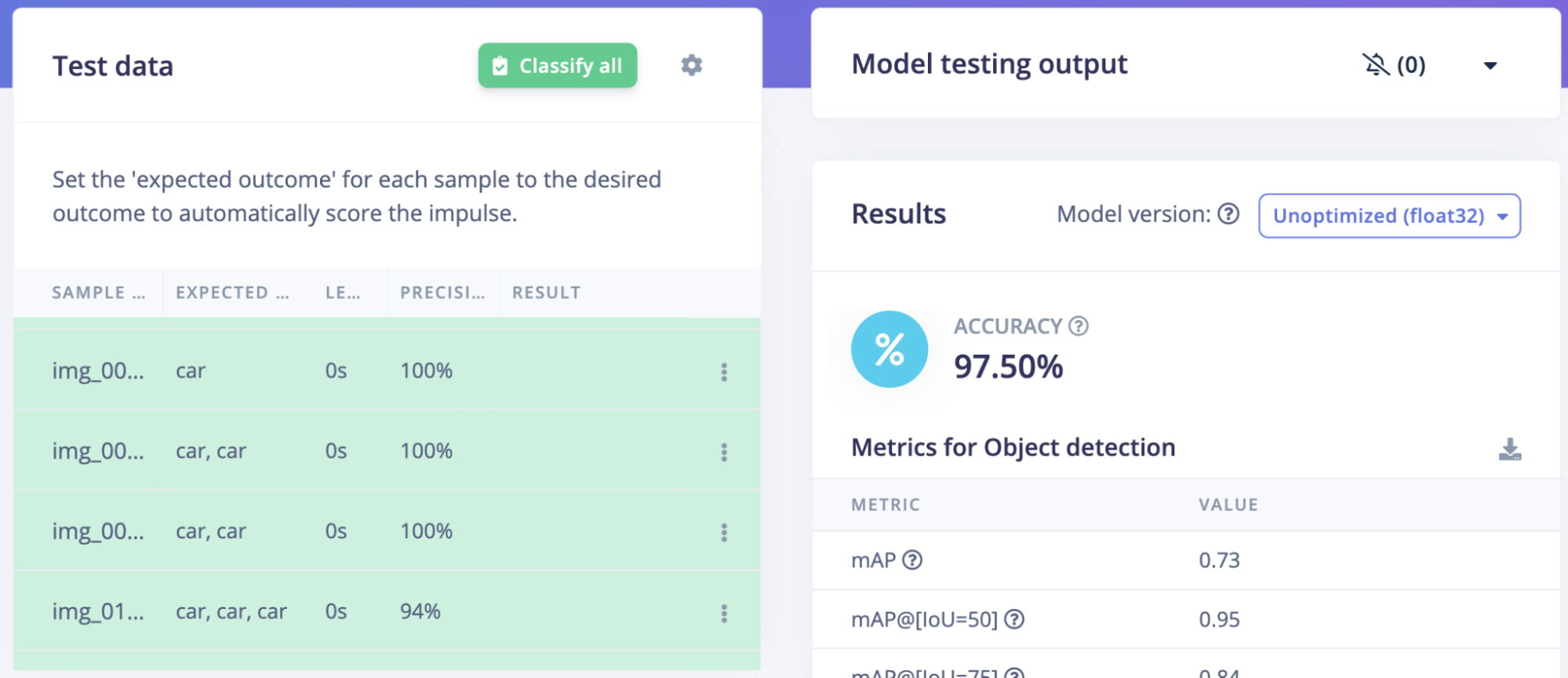

The previously collected dataset was then used to train the model with the click of a button. After a short time, the training process was finished and metrics were shown to help in assessing how well the model was performing. A precision score of 97.2 percent was observed, which is excellent, especially considering that a relatively small amount of training data was utilized. It is always a good idea to also use the Model Testing tool as well to validate that result. Since this tool uses data that was not included in the training process, it can confirm that the model was not simply overfit to the training dataset. In this case, an accuracy score of 97.5 percent was achieved, which closely mirrors the reported training accuracy.

There was little room for improvement, so Kumar was set to deploy the impulse to the physical hardware for real-world use. As a first step, the model was downloaded in the common ONNX format. This was then processed through Hailo’s Dataflow Compiler, which converts the model to the Hailo Executable Format that is required for use with the accelerator module. In the course of this conversion, the model is highly optimized for the AI processor, so some very impressive performance can be obtained. In this case, the accelerator was able to process 63 frames per second.

To wrap things up, Kumar developed a Python application that uses the object detection results to count the number of vehicles that pass by the device over time. The accuracy of this approach demonstrates that it could be used in practical, real-world use cases. And given the low cost and simplicity of the hardware, devices such as these could be placed all throughout a city to collect data for city planners.

Kumar was kind enough to provide a detailed write-up of the project, and also a link to the public Edge Impulse project. With these resources available, reproducing the project should be possible in a matter of a few hours.