When you think about the most prevalent crime problems that communities deal with, vandalism may not be the first that comes to mind. However, with up to 14% of US residents reporting that they experience some form of vandalism — be it to their home, business, car, or some other property — each year, it is a big problem. And it is a costly problem as well — the direct costs of vandalism are in the billions of dollars annually. Unfortunately, it is notoriously difficult to identify the offender in many cases of vandalism, and that leads to underreporting by victims, which only serves to further exacerbate the issue.

Traditional deterrents like security cameras can help to identify perpetrators in some cases of vandalism, however, they may not have a good view of the offender due to a disguise being worn, or their location not being ideal for capturing important details. In any case, if the camera is not being monitored, the crime will not be detected until after the fact. An engineer by the name of Nekhil R. reasoned that if vandalism could be detected as it is happening, the owner of the property could be notified immediately. They could then contact law enforcement without delay and greatly increase the chances of the offender being caught. The hope is that if such devices were common where vandalism frequently occurs, they would serve as an effective deterrent to would-be vandals.

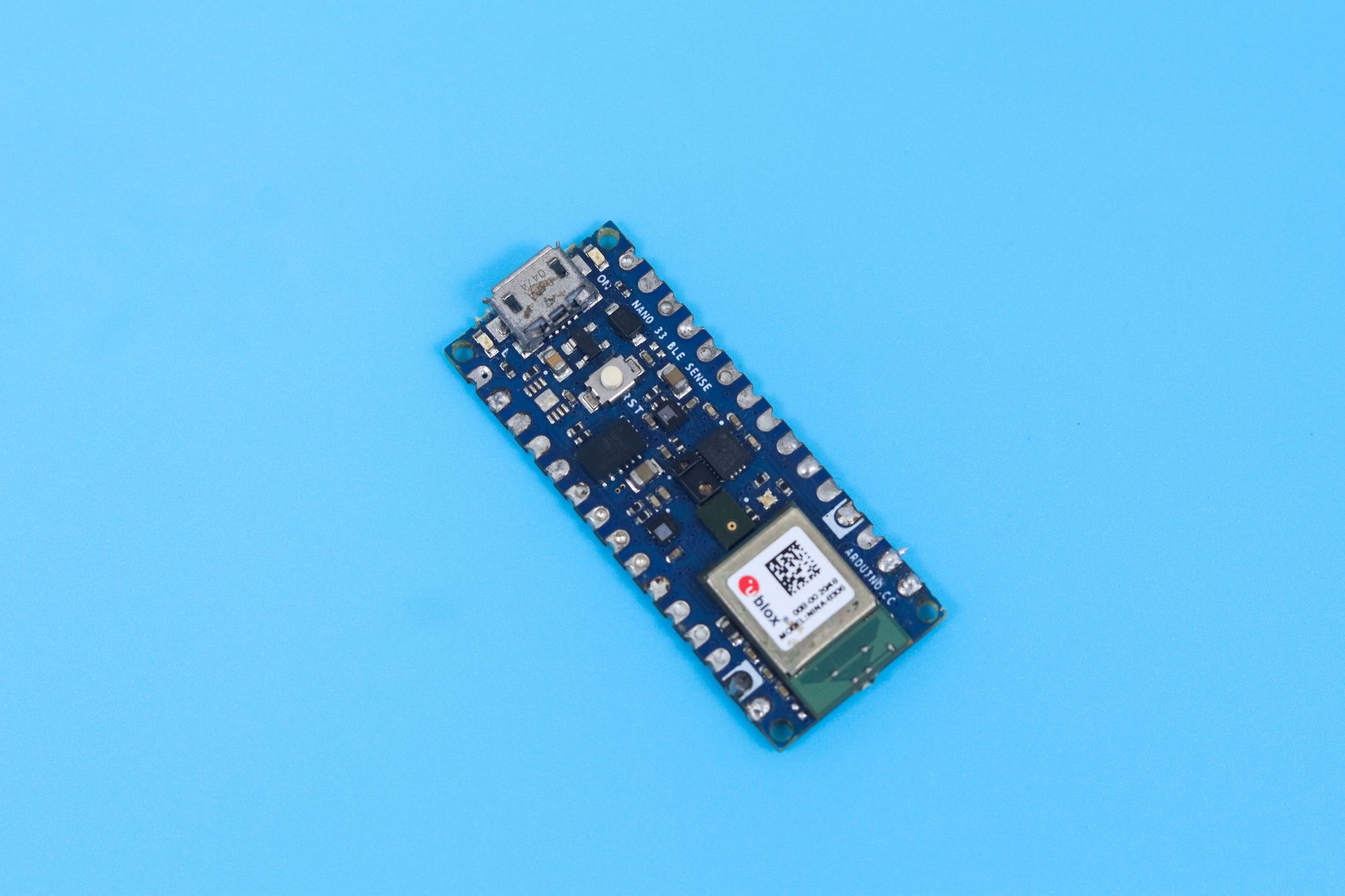

To prove the concept, Nekhil first chose to focus on the sound of breaking glass — a frequent feature of acts of vandalism. When the unique audio signature of breaking glass is detected by a machine learning algorithm, an email alert would be sent to the property owner so that they can take action. An Arduino Nano 33 BLE Sense development board was selected for this device because it has a powerful nRF52840 microcontroller and 256 KB of SRAM that are ideal for running machine learning models optimized by Edge Impulse Studio for tinyML hardware. The Arduino board also comes standard with a microphone that can be used to capture high quality audio samples. An ESP-01 board, with a Wi-Fi-capable ESP8266 microcontroller, completed the build by adding the ability to communicate over the internet via Wi-Fi.

Since an intelligent algorithm was to be used for detecting the sound of breaking glass, sample audio clips were needed to train that algorithm. Fortunately for Nekhil, and the windows in his home, publicly available datasets exist, so he did not need to create his own breaking glass sound samples. The model would also need to be able to recognize normal background noises, so as to not confuse them with breaking glass, so the Microsoft Scalable Noisy Speech Dataset, in conjunction with some additional audio recordings made by Nekhil, were used to represent this class. Approximately ten minutes of total audio recordings were collected.

Data was uploaded to Edge Impulse Studio by linking the Arduino Nano 33 BLE Sense directly to a project using the Edge Impulse CLI and a custom firmware image for the board. The samples were automatically split into training and test datasets. With all of the data collection squared away, Nekhil was all set to build the impulse that defines the machine learning analysis pipeline. First, preprocessing steps were added to slice audio samples into windows of a consistent length for analysis, then an Audio (MFE) block was included to extract the most important features from the data and reduce the computational complexity of downstream steps in the pipeline. Finally, a neural network classifier was added to the impulse to predict if the observed features represent the sound of breaking glass, or just normal background noise.

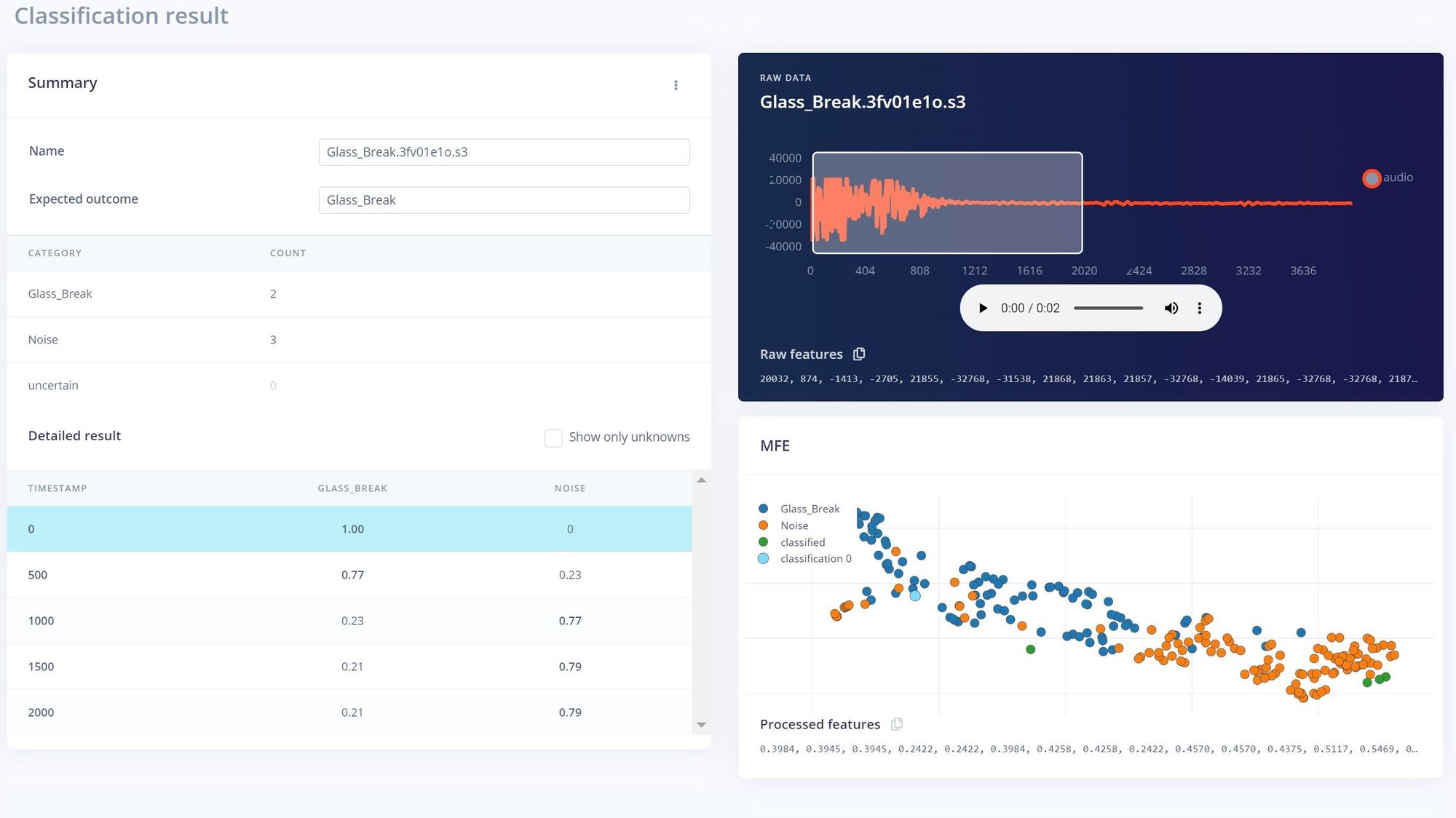

Some tweaks were made to the hyperparameters to optimize the model training process for the task at hand. Since the dataset collected was not very large, Nekhi also turned on the data augmentation feature — this tool makes small, random changes to the training data between cycles to prevent the model from locking in on superficial features in the data that can lead to overfitting. The training process was then started, and after a short time, a confusion matrix was presented to assist in understanding how well the model was performing. An average classification accuracy rate of nearly 98% was observed, so things were looking great.

It is always a good idea to run the model testing tool as well. Since it uses a dataset that was left out of the training process, it provides a more stringent test of the model’s performance. This showed that the classification accuracy exceeded 91%, which is more than good enough to prove the concept. Since the accuracy did drop off a bit more than might be expected as compared with the training data, Nekhil used Edge Impulse Studio’s data exploration tools to investigate. He found that some of the misclassified noise samples sounded very much like breaking glass, and other samples contained both the sound of breaking glass and background noise. With that knowledge, it would be possible to create a more appropriate dataset that does not mislead the model during training, and would result in a more accurate algorithm.

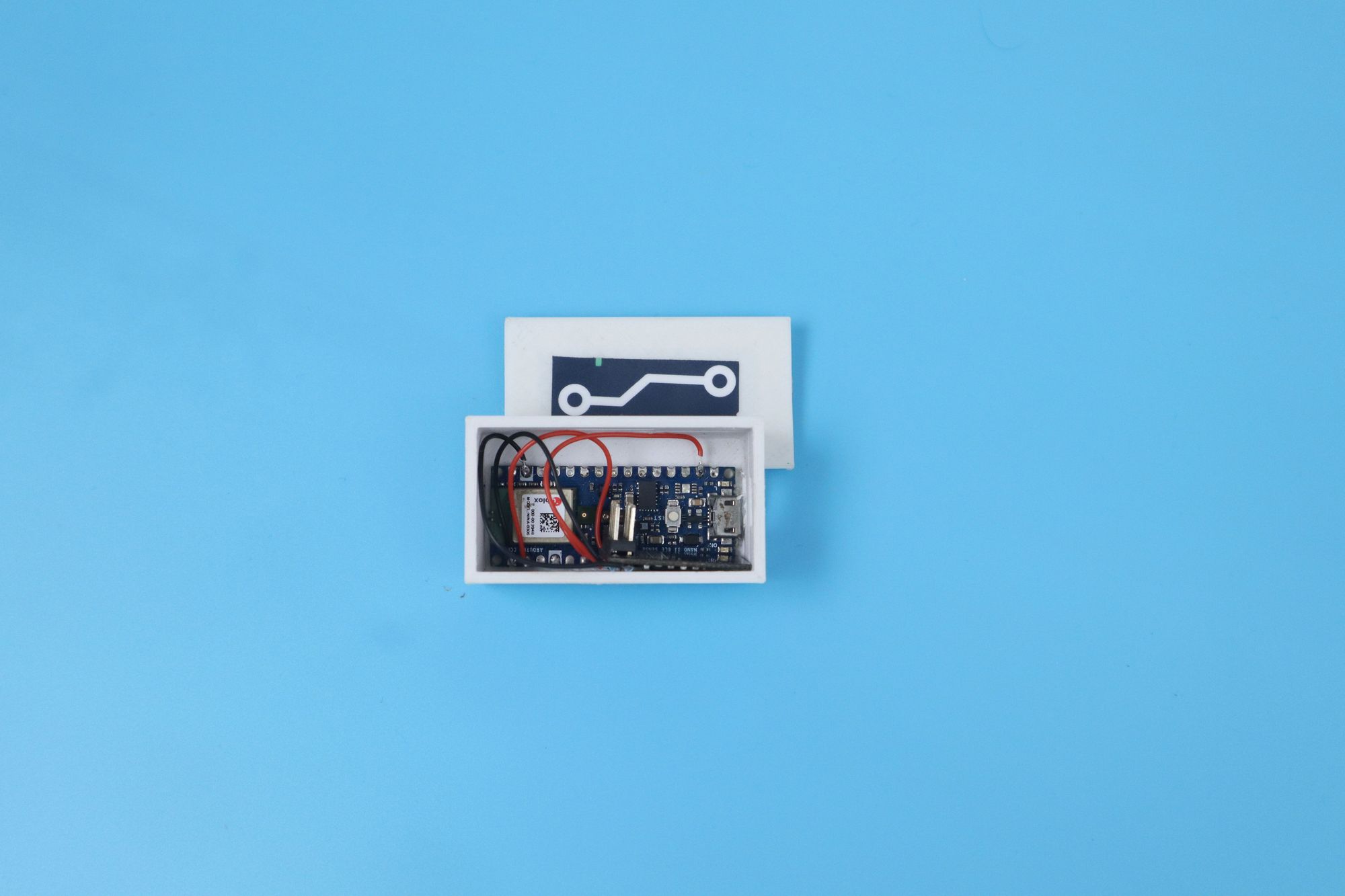

That would be a good area to explore for a future iteration of the device, but the performance was quite good already, so the impulse was deployed to the Arduino. Using the deployment tool, the Arduino library was selected, and a download was prepared that contained the full classification pipeline. This deployment option offers a great deal of flexibility, as it makes it possible to incorporate your own logic into the firmware that can trigger any arbitrary actions based on the results of the model’s inferences. In this case, Nekhil used the IFTTT service to send email alerts when the sound of breaking glass was detected. All of the hardware components were fitted inside of a custom, 3D-printed enclosure to complete the device.

You will not want to miss out on reading the excellent project documentation written up by Nekhil. He has also made his Edge Impulse Studio project publicly available — cloning it would be a great way to get started on any audio classification project.

Want to see Edge Impulse in action? Schedule a demo today.