When building machine learning applications, it’s essential to create tight feedback loops between development and testing. Developers need to see the impact of their changes on real world performance so they can evolve their datasets and algorithms accordingly. For example, if you’re building a keyword spotting application, it’s critical to understand how well keywords are detected in a continuous stream of audio.

This can be a challenge — and it’s especially hard on edge devices. Testing in the field requires integrating an algorithm into a firmware, deploying it to a device, and performing physical testing in real-time. A developer creating a keyword spotting application might have to deploy their algorithm to a device, install it in a physical location, and follow a complicated manual procedure to test performance and quantify the results.

This process costs a lot of time and money, and the results can be inexact. In addition, it can be difficult to separate algorithmic problems from hardware-related issues. These challenges mean that realistic testing is often confined to the final stages of the development workflow — which can mean that algorithmic performance issues are not detected until they’re too late to fix.

Immediate feedback on real-world performance

To bring realistic testing within reach for all projects, Edge Impulse is excited to announce Performance Calibration. This new feature allows you to simulate your model’s performance in the field, providing immediate insight into real-world performance at the click of a button.

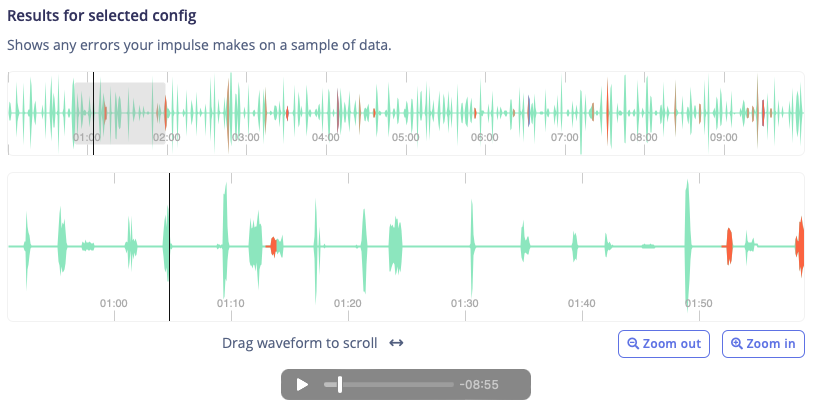

Performance can be measured using either recordings of real-world data, or with realistic synthetic recordings generated using samples from your training dataset. This allows you to easily test your model’s performance under various scenarios, such as varying levels of background noise, or with different environmental sounds that might occur in your deployment environment.

Turning streams into events

In addition to providing performance insights, Performance Calibration makes it easy to configure a post-processing algorithm that will transform a model’s raw output into specific events that can be used in an application. This essential task is often left as an afterthought, but it has a profound impact on the performance of a machine learning system.

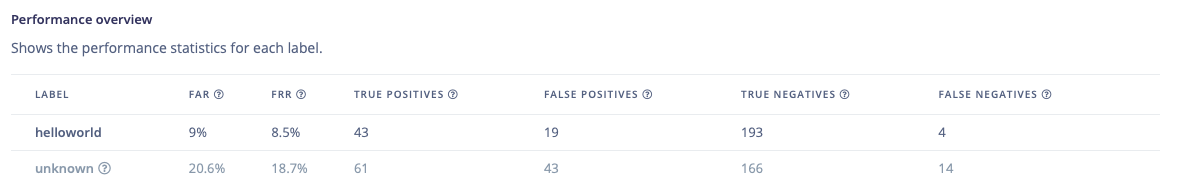

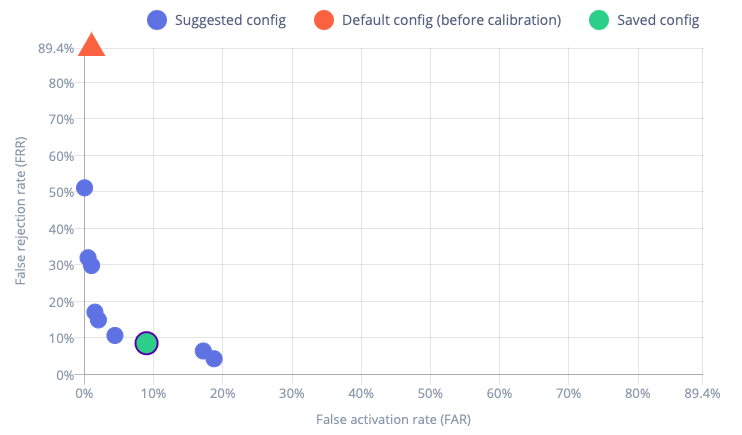

After running Performance Calibration, developers are presented with a set of potential post-processing configurations that represent the ideal trade-offs between false positives and false negatives.

Since every application is unique, a developer can select the specific trade-off that works best for their product. The output of each trade-off can be explored and compared, using industry standard metrics like False Activation Rate (FAR) and False Rejection Rate (FRR).

Building successful projects

Machine learning development can be tough, and there are many pitfalls that can result in poor real-world performance. By providing tools like Performance Calibration, Edge Impulse enables every developer to access the insights that experienced ML engineers use to deliver successful projects — with massive cost savings versus building custom tools.

Performance Calibration is currently available for audio event detection projects. We’ll soon be expanding support to cover every type of sensor data. We firmly believe that proper understanding of performance in the field is essential to success.

Try an example

The best way to understand the impact of Performance Calibration is to give it a try. Visit the documentation to learn how the feature can be used to measure and tune performance for an audio event detection project, and try it with your own model.

We can’t wait to see what you build with Performance Calibration, so try it out today! We’re standing by to answer any questions you might have, and we’d love to see any projects you would like to share. Get in touch via our forum — or tag @EdgeImpulse on our social media channels.