We are happy to announce a new deployment option for machine learning models: Docker Container. This addition offers our users the same approach as traditional cloud deployments, providing greater versatility and ease in deploying AI models.

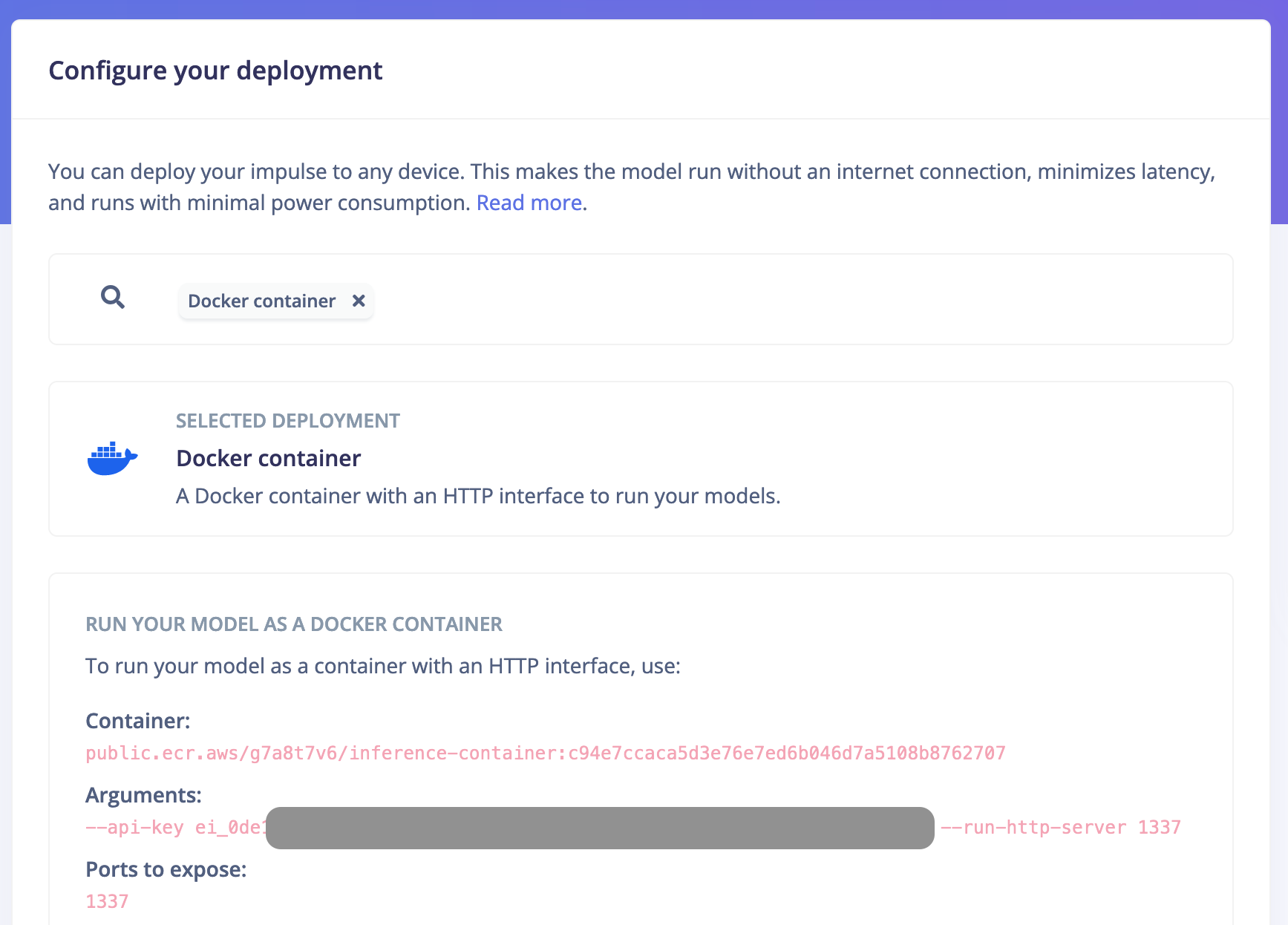

The Docker container deployment encapsulates signal processing blocks, machine learning models, and configurations into a single, deployable container. This container acts as an HTTP inference server, suitable for environments that leverage containerized solutions. Built on top of our Linux EIM executable deployment, it includes full hardware acceleration support on most Linux targets, including NVIDIA GPUs.

For more details on how to deploy your project as a Docker container, visit our documentation.

Test in the cloud, deploy on the edge

After fine-tuning and testing your AI models in the cloud, the next step is to deploy them effectively in production environments. A standard way to deploy AI models in the cloud is through Docker containers. These containers encapsulate your model and its dependencies in a consistent environment, ensuring it runs smoothly regardless of the hardware or OS. This consistency minimizes compatibility issues and simplifies the deployment process.

Our new Docker deployment method extends the familiar cloud deployment workflow to edge environments, making it easier for MLOps and infrastructure engineers to integrate and deploy Edge AI solutions. This ease of integration ensures that businesses can quickly test models in cloud environments to validate approaches before committing to edge deployments.

Building solutions

The docker deployment option leverages hardware acceleration when available. Yet, understanding and optimizing for constrained resources of edge devices is an ongoing journey. Edge Impulse provides the tools and expertise necessary to optimize your solutions, ensuring your AI models are not only effective but also resource-conscious.

With Edge Impulse's powerful capabilities for creating and refining data, building models, pushing to hardware, and now deploying Docker containers, users and enterprises can create seamless solutions for a wide range of uses.

Our team is here to help with all parts of the process, don't hesitate to let us know what you're working on and how we can assist.