Convolutional neural networks (CNNs) have greatly improved tasks like image and sound classification, object detection, and regression-based analysis. Yet, these models often behave as "black boxes," producing accurate but difficult-to-interpret results. For engineers and developers, understanding why a model made a specific decision is critical to identifying biases, debugging errors, and building trust in the system.

Grad-CAM, or Gradient-weighted Class Activation Mapping, addresses this challenge by providing a visual representation of which regions in an image influenced a model’s prediction. This technique works on CNN-based models and can be applied to both classification and regression tasks.

Use cases

Grad-CAM is widely applicable across industries that rely on deep learning for image-based tasks. In healthcare, it can be used to explain predictions made by medical imaging models. For instance, a Grad-CAM heatmap applied to a pneumonia detection model can confirm whether the model is focusing on the correct regions of an X-ray image. This level of transparency is critical for building trust with medical professionals and ensuring accurate diagnoses. See below for an example where a dataset used for a pneumonia detector contain biases.

In manufacturing, Grad-CAM can also be applied to visual inspection systems to identify defective areas in products. The heatmap highlights the regions responsible for lower quality scores, allowing engineers to validate the model’s outputs and pinpoint issues.

How Grad-CAM works

Grad-CAM creates a heatmap that highlights areas in the input image that had the greatest influence on the model’s output. It does so by calculating the gradients of the target output (a specific class score or a regression value) with respect to the feature maps of a convolutional layer — usually the last convolutional layer. These gradients indicate the importance of each feature map, which are then combined to produce the final heatmap.

In simpler terms, Grad-CAM allows us to "see" what the model is focusing on when it makes a prediction. For image classification tasks, this means highlighting the regions associated with the predicted class. For regression tasks, the heatmap shows the areas that contributed most to the output value.

If you want to learn more about Grad-CAM, we invite you to read this paper Grad-CAM: Visual Explanations from Deep Networks via Gradient-based Localization [2].

Identify biases in your datasets

For engineers working with deep learning models, Grad-CAM is a practical tool for visualizing and debugging predictions. It can help identify patterns in model behavior and uncover potential issues.

When developing an image classification model, Grad-CAM can reveal whether the model is relying on irrelevant features, such as background elements, instead of the actual object.

A known example of a dataset bias is when trying to classify cats vs dogs. Often, the datasets contain more cats being indoors and dogs being outdoors. As a result, the model might act like an indoor vs outdoor classifier instead of a cat vs dog classifier:

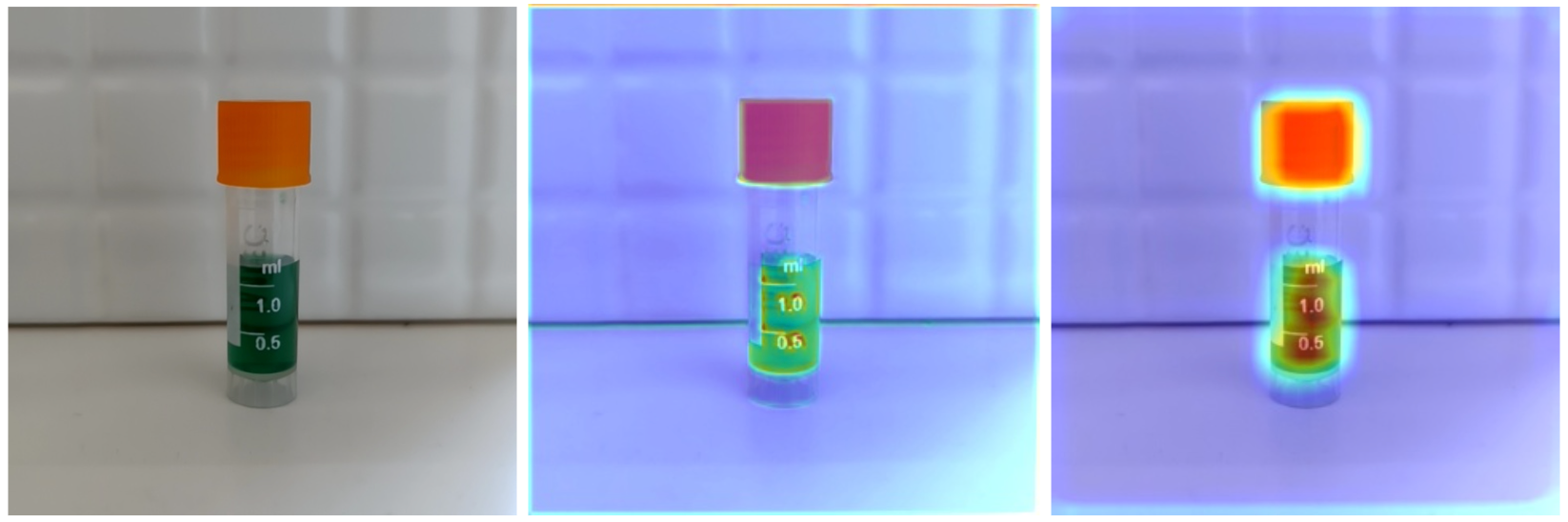

On medical imagery, often images are being annotated or contains a scale. Below in another example of a model focusing on the annotations instead of the actual region of interest.

Using Grad-CAM in Edge Impulse

This custom deployment block (Enterprise Plan) automatically exports test datasets, downloads trained models, and generates Grad-CAM visualizations. This script supports both image classification and visual regression models, making it a versatile tool for model explainability.

For developers who want to test Grad-CAM without setting up a local environment, this Google Colab notebook provides a ready-to-use option. The notebook includes everything you need to visualize Grad-CAM heatmaps in a browser, you just need to provide an Edge Impulse API Key and have an image classification or visual regression model trained.

Have a look at tutorial in Edge Impulse documentation for more information

TL;DR Grad-CAM helps to understand neural network decisions

Grad-CAM is a powerful technique for understanding and interpreting the predictions of convolutional neural networks. By generating heatmaps that highlight important regions in input images, Grad-CAM provides valuable insights into model behavior. This level of explainability is essential for debugging models, building trust, and improving system performance.

Whether you are working on image classification, visual regression, or anomaly detection, Grad-CAM helps bridge the gap between predictions and insights. By fine-tuning parameters like alpha, pooling gradients, and heatmap normalization, developers can adapt Grad-CAM to their specific use cases and gain clearer visibility into their models.

Our implementation using a custom deployment block or the Google Colab notebooks makes it easy to apply Grad-CAM with your Edge Impulse projects. Try it!

If you want to share cool insights you discovered using Grad-CAM, let us know on Edge Impulse forum.

Looking ahead, we’d like to provide a method to work with audio spectrogram.

Happy discovery!

References:

[1] Abedeen, Iftekharul; Rahman, Md. Ashiqur; Zohra Prottyasha, Fatema; Ahmed, Tasnim; Mohmud Chowdhury, Tareque; Shatabda, Swakkhar (2023). FracAtlas: A Dataset for Fracture Classification, Localization and Segmentation of Musculoskeletal Radiographs. figshare. Dataset. https://doi.org/10.6084/m9.figshare.22363012.v6

[2] Ramprasaath R. Selvaraju, Michael Cogswell, Abhishek Das, Ramakrishna Vedantam, Devi Parikh, Dhruv Batra. Grad-CAM: Visual Explanations from Deep Networks via Gradient-based Localization. https://doi.org/10.48550/arXiv.1610.02391