So, how have you been the past couple years? This is a question that cannot be answered without mentioning the COVID-19 outbreak, as we have all been impacted in one way or another during this time. Many mistakes were made as we learned to deal with this disease, but there were also some important lessons learned that may help us to better handle outbreaks of airborne infectious diseases in the future. One area that we need to improve upon is understanding infection risk, so that people can be better informed about the precautions they should consider taking under different circumstances. Towards this end, recent research has shown that measuring CO2 levels in an indoor space can serve as a good proxy for airborne infection risk.

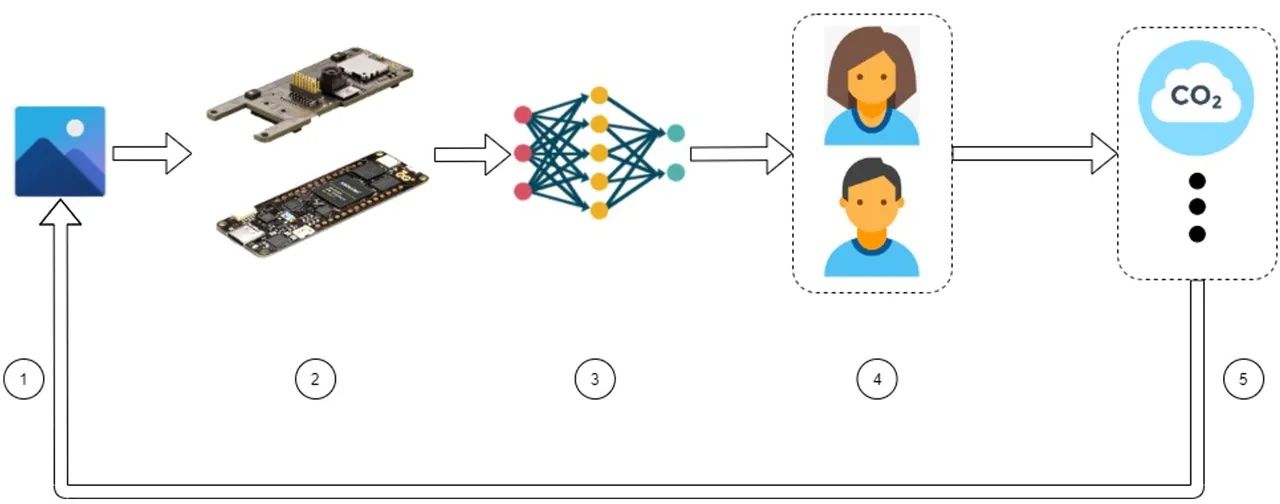

This gave electronics hobbyist Swapnil Verma an idea. He thought that if he could detect the number of people in an indoor space, he could calculate the average amount of CO2 that they exhale, which in turn could be used to estimate infection risk. Verma proudly admits that his solution is overengineered, because the CO2 level of a space can be directly measured with a sensor, but where is the fun in that? We appreciate Verma’s solution that demonstrates some cutting edge technologies with broad applicability. In particular, this project demonstrates the utility of Edge Impulse’s ground-breaking FOMO™ object detection algorithm.

Before a machine learning algorithm can recognize an object, it first needs to be shown examples of that object. Images of people engaged in everyday indoor activities were collected from the PIROPO database and uploaded to Edge Impulse. So that the algorithm can learn to distinguish people from the background of the images, Verma next needed to draw a bounding box around each person to inform the training process. Fortunately Edge Impulse has a labeling tool that uses machine learning to assist in drawing these boxes and speeding up this task.

With FOMO having been designed for performance on resource-constrained devices like microcontrollers, an Arduino Portenta H7 development board was selected to provide the processing power. With an Arm Cortex-M7 running at 480 MHz and 8 MB of SDRAM, this board has horsepower to spare. To round out the bill of materials, an Arduino Portenta Vision Shield was chosen to add the ability to capture images.

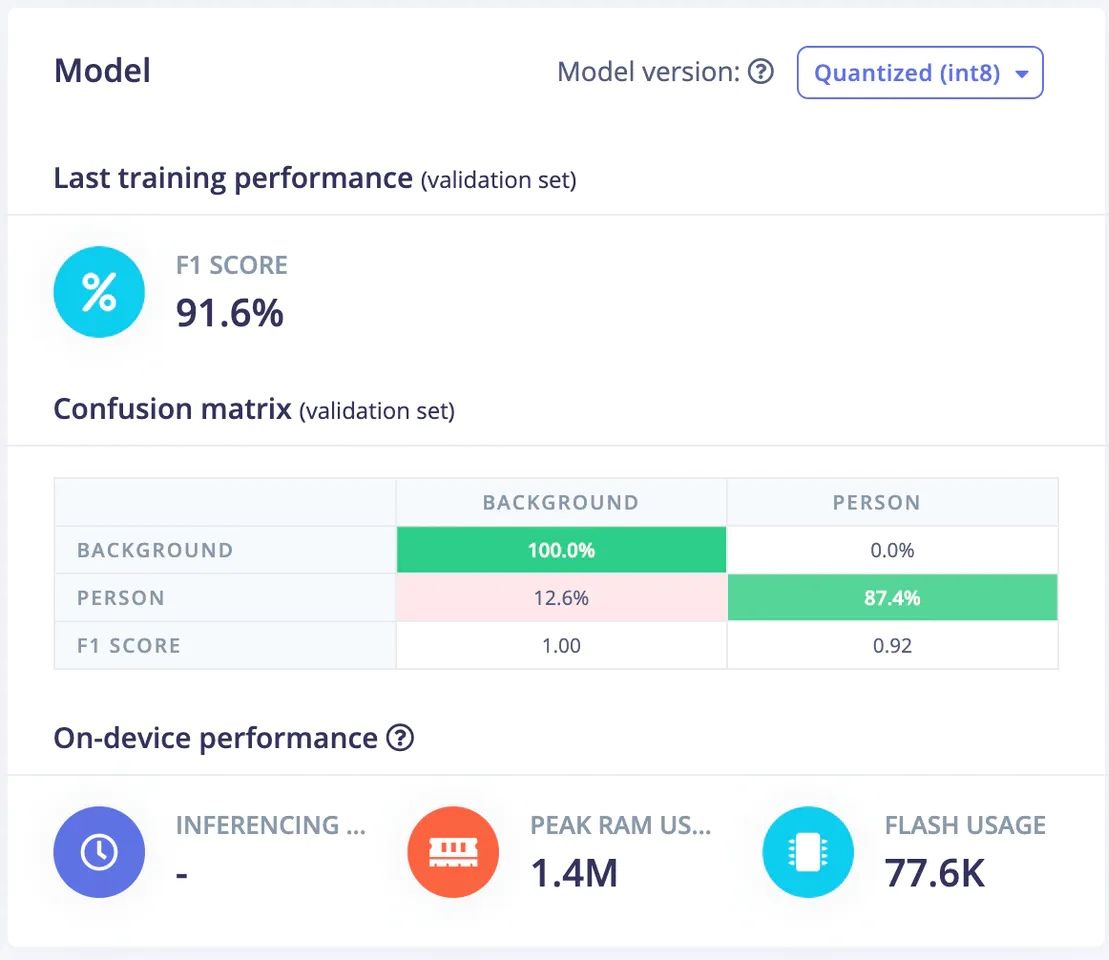

An impulse was created to preprocess images and reduce the downstream computational complexity associated with their analysis. This processed image data was then fed into a FOMO object detection model and trained on the previously uploaded labeled dataset of images from the PIROPO database. The F1 score of the training process was found to reach 91.6%, and a subsequent validation of the model showed that object recognition accuracy had reached 86.42%. The Arduino Portenta H7 and Vision Shield are fully supported by Edge Impulse, so the deployment process can generate firmware that natively runs on the board. Simply flashing the board with this firmware installs the full machine learning pipeline.

By using the OpenMV IDE, Verma was able to connect to the Arduino and observe the object detection results in real time to validate the quality of the results in real world situations. He found it to generally perform very well, even though the model had never seen example images of him or the environment that he was testing it in. He noticed that when he was sitting and facing the camera, sometimes he would not be detected. He recognized that this most likely occurs because the training data did not contain any examples of people that were seated and facing the camera, so he would be able to correct this by collecting more examples and retraining the model.

To accomplish the goal of calculating CO2 levels, it was only necessary to plug the number of persons detected into a simple formula that estimates the ounces of CO2 that are exhaled per minute by those individuals. Verma noted that he could improve this estimate in the future by also factoring in the flow of CO2 out of indoor spaces. For the rest of the details and code samples, take a deep breath and head on over to the project page.

Want to see Edge Impulse in action? Schedule a demo today.