Hard hats are essential tools for protecting workers’ lives and preventing serious injuries in a variety of industries, including construction, manufacturing, mining, and forestry. They are a vital piece of personal protective equipment that can safeguard workers from falling objects, head impacts, and electrical hazards. Hard hats are not just recommended but considered essential for employee safety in these industries.

Hard hats are a common sight in the construction industry, where they protect workers from falling debris, tools, and even accidental head bumps against fixed structures. In manufacturing plants, they shield employees from potential hazards such as machinery malfunctions or product spills. In mining and forestry, hard hats provide vital protection from falling rocks and branches, respectively, while also safeguarding against the harsh weather conditions often faced by workers in these fields.

The use of hard hats significantly reduces the risk of traumatic brain injuries, skull fractures, and concussions in these high-risk environments. Moreover, they play a pivotal role in ensuring compliance with safety regulations and standards set by government agencies and industry-specific bodies. However, enforcing compliance can be a challenging task for employers, especially those with large workforces. Manual inspections to ensure that all employees are wearing their hard hats correctly and consistently can be both time-consuming and inefficient.

Engineers Wamiq Raza and Varvara Fadeeva put their heads together to come up with solutions that might better protect workers’ heads. They wanted a solution that would be effortless to use so that it would be consistently applied, and they also wanted to be sure that it was always monitoring for safety violations to minimize the risk of injuries. To meet these requirements, they designed a computer vision-based approach to look for individuals that are not wearing a hard hat in real-time. A system of this sort could always be monitoring an area where protective equipment is required and send alerts to supervisors the moment a violation is recognized.

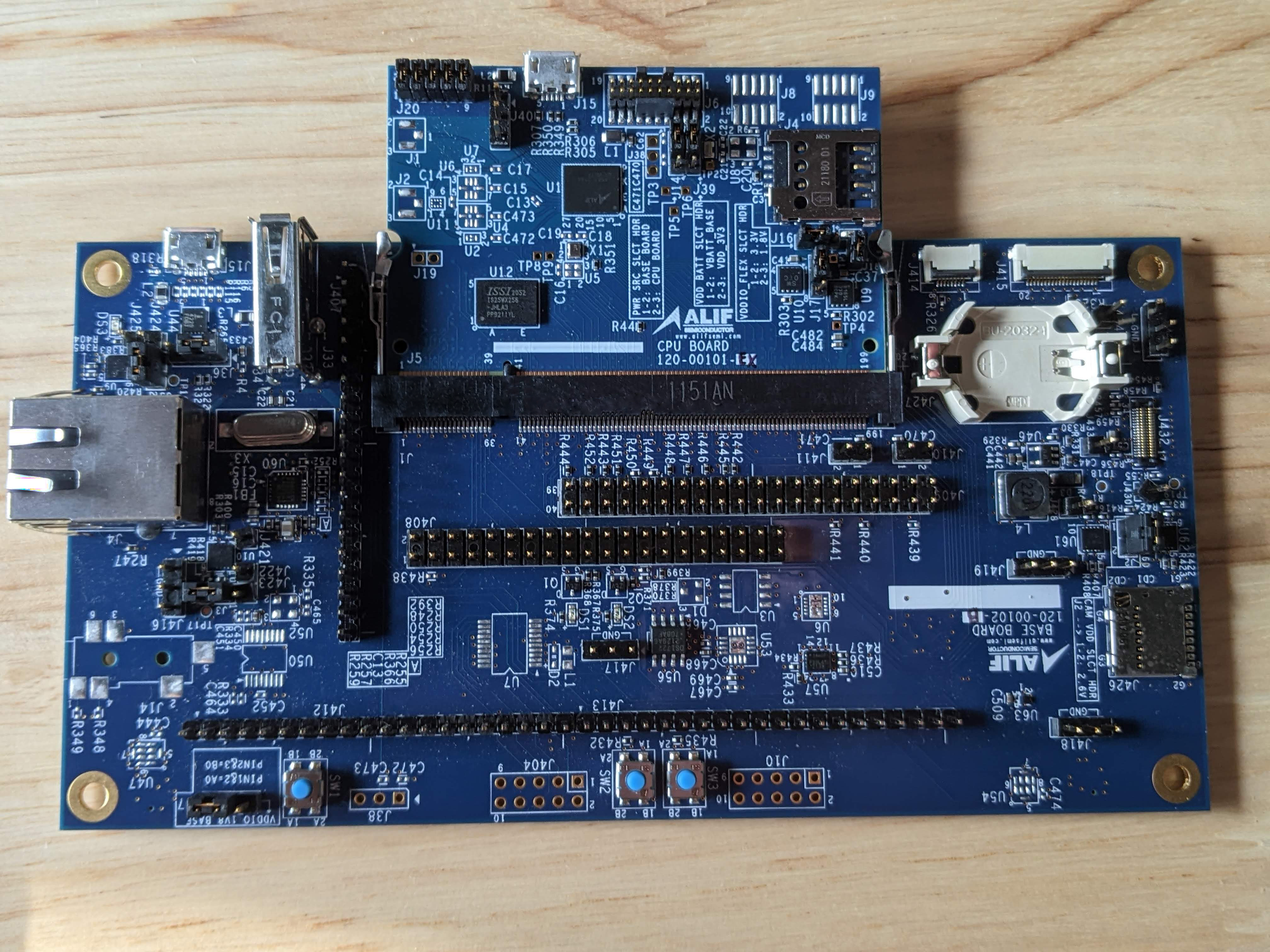

Building a machine learning analysis pipeline and deploying it to a hardware platform can be very challenging, however. So the team leveraged Edge Impulse Studio to help create and train the model, and also package it to run on their chosen hardware platform. They chose the Alif Ensemble E7 development kit to host the software. The Ensemble E7 has a low-power Arm Cortex-M55 CPU with a dedicated Ethos-U55 microNPU to run embedded machine learning workloads quickly and efficiently. A camera attached to the Ensemble E7 allows it to capture images of an area that is being monitored.

Before a system can recognize safety violations, it will need to be trained to understand what people look like both with and without safety helmets. For this purpose, Raza and Fadeeva tracked down a small, publicly available image dataset consisting of 160 images taken in industrial settings. These images were uploaded to Edge Impulse Studio using the Data Acquisition tool, which automatically split them into training and test sets.

Next, the images needed to be annotated so that the algorithm can learn to recognize the difference between hard hats and bare heads. Using the Labeling Queue, one can draw bounding boxes around each object of interest (e.g. a hard hat) to supply the annotation for each image. Even for a small dataset like this that can be an unpleasant chore, but fortunately, the Labeling Queue offers AI-powered assistance in drawing the bounding boxes. Typically, one needs only to verify that the boxes look correct, and make an occasional small tweak, then move on to the next image.

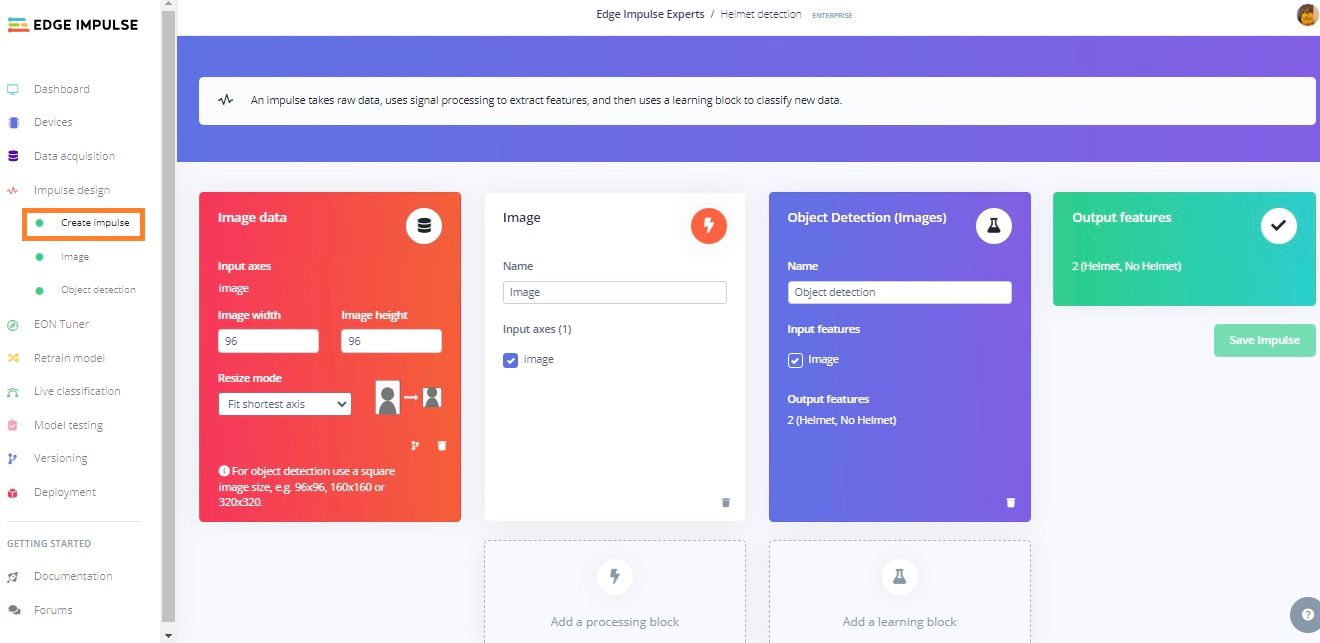

With the data uploaded and annotated, the next step was to design the impulse. An impulse specifies exactly how the data will be treated, all the way from the time that raw data is captured from a sensor until the model makes a prediction. In this case, preprocessing steps were added first to reduce the size of the images, then extract the most informative features from them. This step is crucial in allowing the algorithms to run efficiently on resource-constrained edge computing platforms. The features were then forwarded into a FOMO MobileNetV2 0.35 object detection model that is optimized for highly accurate results on tiny hardware platforms.

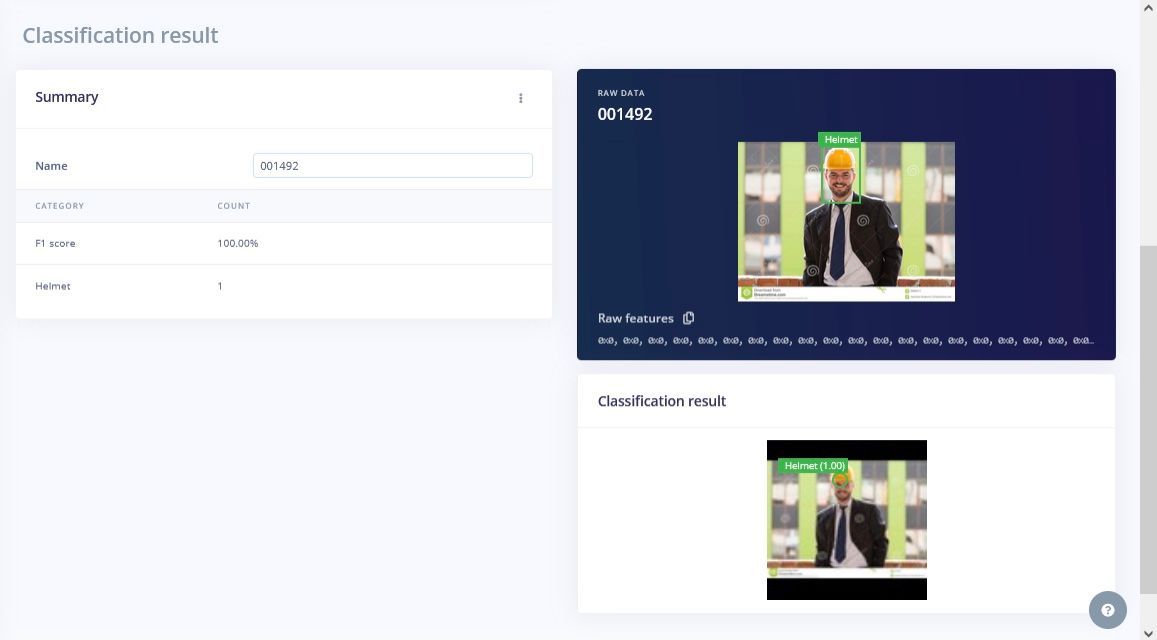

After tweaking a few of the model’s hyperparameters, the training process was kicked off. After it finished, metrics were displayed to help assess the performance of the pipeline. It was found that the model had achieved an average accuracy rate of about 76%. While it was highly accurate when it came to detecting helmets or bare heads, it sometimes inappropriately fired, one way or the other, when no people were in the image frame. This situation could be remedied by supplying more training data, but 76% is sufficient to prove the concept, so the team moved on.

There was nothing more to do other than deploy the impulse to the hardware. The Alif Ensemble E7 development kit is fully supported by Edge Impulse, so that was as simple as choosing this hardware platform in the Deployment tool and downloading the binaries. Once the firmware has been flashed to the board, the impulse can be started up with a single command using the impulse runner.

To test the system, the team used a combination of the test dataset that was set aside by the Data Acquisition tool, and also live images acquired by the development board. It was found that the device worked quite well, however it was noted that the live results could be improved by using a better camera.

With the right tools, designing and deploying even complex computer vision-based solutions can be very easy to do. No special knowledge of machine learning or embedded hardware is necessary. In fact, if you have a need for some type of real-time monitoring system of your own, you could modify Raza and Fadeeva’s project without too much trouble. They have even made their Edge Impulse Studio project publicly available for anyone to clone — most of the work is already complete!

Want to see Edge Impulse in action? Schedule a demo today.