The integration of artificial intelligence (AI) into medical diagnostics presents many new opportunities for healthcare technology. By leveraging machine learning algorithms, AI systems can analyze vast amounts of medical data, including images, genetic information, and patient records, to assist healthcare professionals in diagnosing diseases and predicting outcomes with unprecedented accuracy. This emerging technology holds the promise of transforming healthcare delivery by streamlining diagnostic processes, improving patient outcomes, and reducing medical errors.

One of the key benefits of AI in medical diagnostics is its ability to enhance the speed and accuracy of diagnoses. AI algorithms can rapidly analyze medical images such as X-rays, MRI scans, and CT scans, detecting abnormalities or patterns indicative of disease with a level of precision that surpasses human capabilities. This enables earlier detection of diseases such as cancer, cardiovascular conditions, and neurological disorders, leading to timely interventions and improved patient outcomes.

However, as the field continues to integrate AI into its normal workflows, it poses a number of challenges related to data privacy and security. These applications require access to sensitive patient information. Moreover, the algorithms often require substantial computing resources for operation, which means that the sensitive data must be transferred over networks, perhaps even the public internet, for processing. This transfer of patient data over networks to remote computing environments introduces risks of unauthorized access or data breaches.

Fortunately, advances in edge computing hardware and AI algorithms may soon solve many of these problems. It is now possible to run powerful AI algorithms on the same small, low-power devices that collect the data that is to be analyzed. To demonstrate just how far the field has come, Edge Impulse’s own hacker-in-residence David Tischler built a proof of concept medical diagnostics system. Using a neuromorphic processor from BrainChip and the Edge Impulse platform, Tischler proved that developing a portable device that can diagnose pneumonia from X-ray images is well within reach.

The basic game plan was to use a BrainChip Akida Raspberry Pi Developer Kit in conjunction with a machine learning image classifier that has been finely tuned by Edge Impulse to make the most of the Akida processor. The Developer Kit contains a Raspberry Pi Compute Module with a quad-core ARM Cortex-A72 processor and up to 8 GB of RAM for general purpose computing. But the real key to this device’s success is its Akida AKD1000 neural processing unit that can make short work of heavy AI workloads on a very small energy budget.

Tischler wanted to show that this combination of technologies would not only perform well, but that they would also be quite simple to get up and running. Towards that goal, he set up the Developer Kit by flashing an SD card with an Ubuntu Linux operating system and using it to boot up the computer. After that, it was just a matter of running a few commands to install some Akida drivers and Edge Impulse tools. The specific commands entered are available in the project write-up.

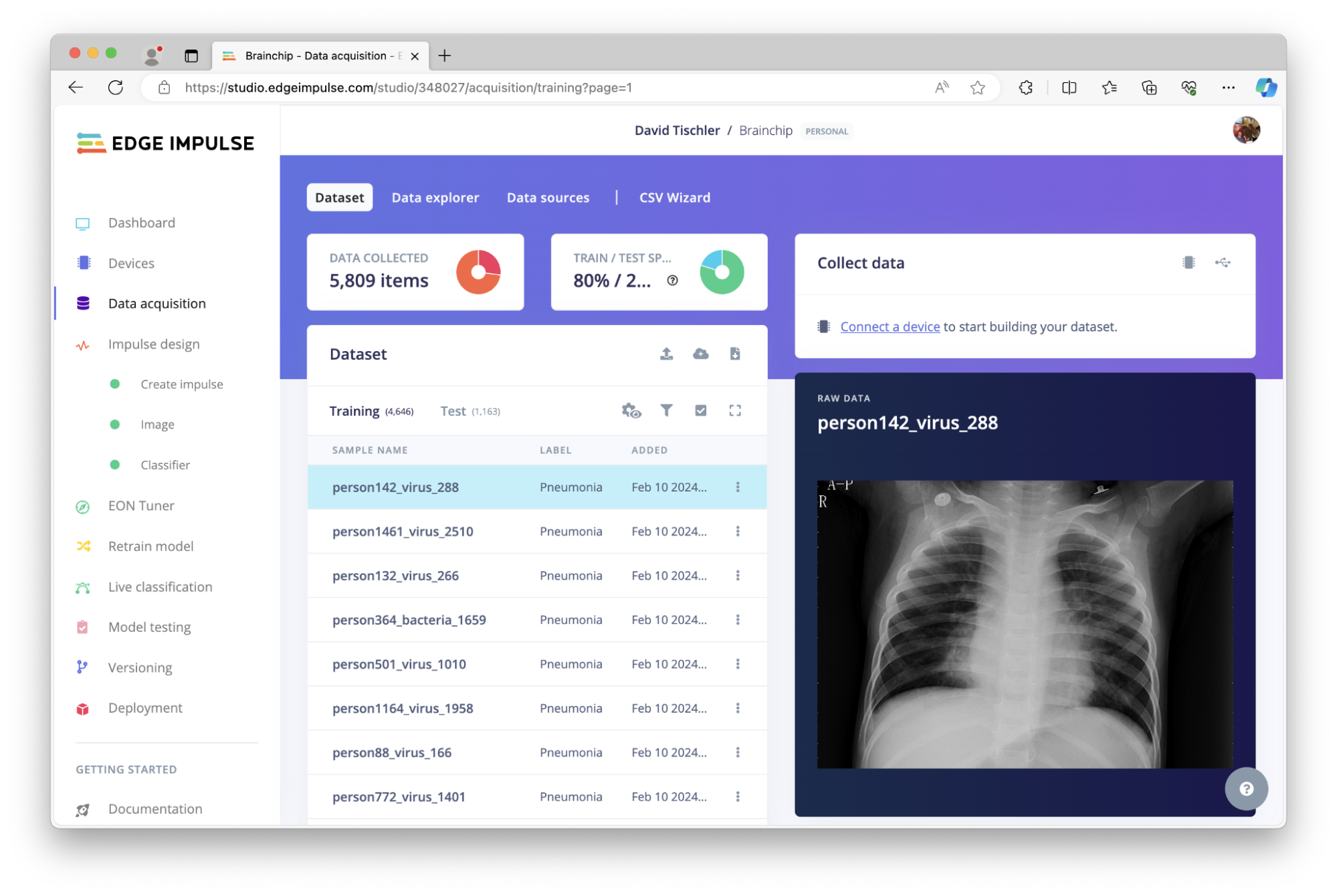

With that out of the way, the hardware was ready to go. That just left the machine learning classifier to be dealt with. A crucial component of building any classifier involves collecting a good dataset to use for training. Collecting chest X-rays is not exactly something that most people can reasonably do, but fortunately Tischler was able to locate a suitable dataset on Kaggle. The nearly 6,000 images contained in this dataset — which were labeled as “normal” or “pneumonia” — were uploaded to an Edge Impulse project. The Data Acquisition tool automatically split these images into training and test sets.

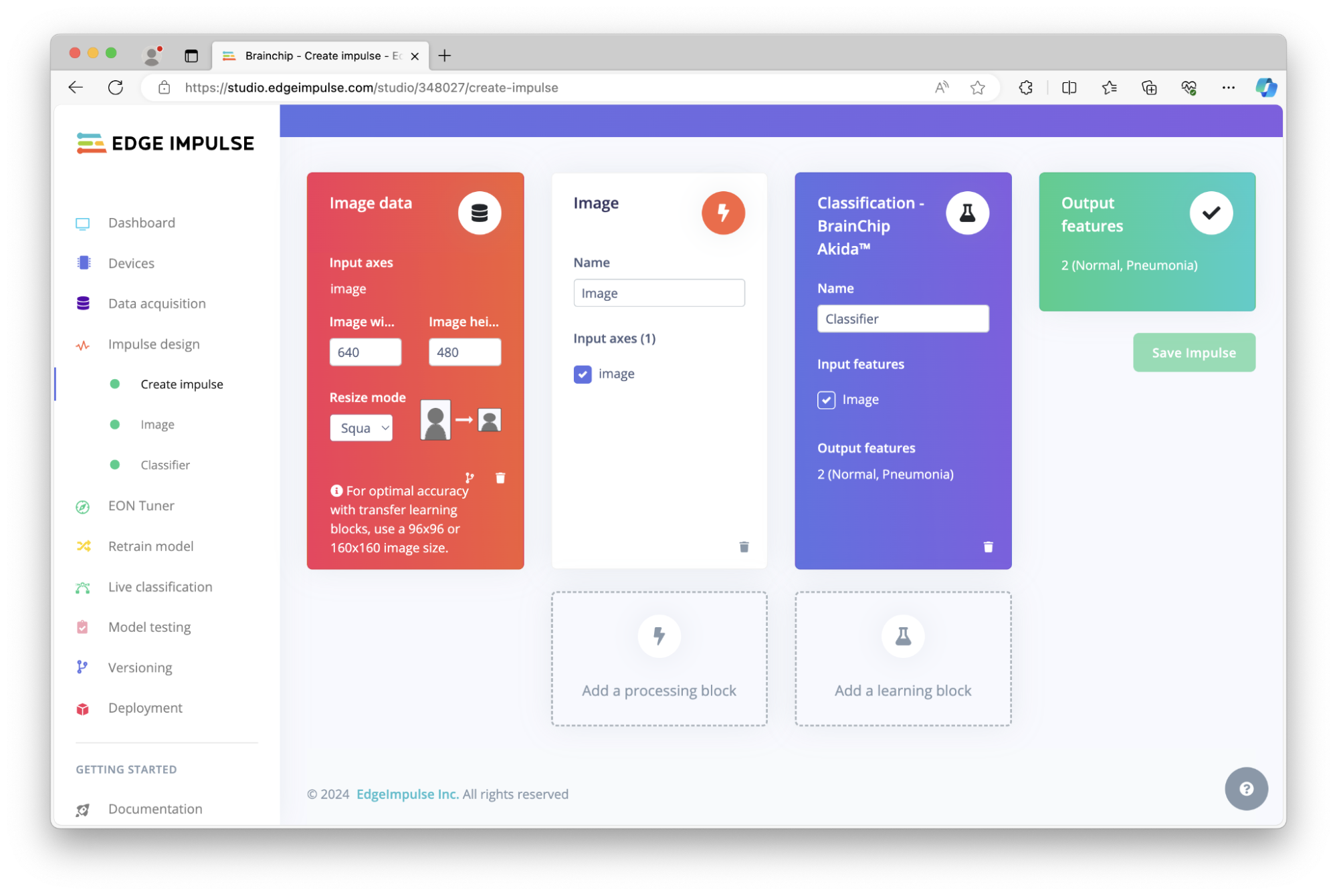

Next up, an impulse was created — this is what defines how data is processed, all the way from the time it is collected up until a classification prediction is made. The first step in the impulse resizes the images to 640x480 pixels to save some compute cycles later in the process, but it was noted that the resolution could be increased if accuracy suffers. An image processing step was then included to extract features from the images, which are then forwarded into a classifier that is tailored to the BrainChip Akida processor. This classifier will predict if an unknown image is either normal, or if it appears that pneumonia is present.

After making a few tweaks to improve the model’s accuracy, the training process was initiated. After learning from the previously uploaded data, a training accuracy rate of 97% was achieved. It is always a good idea to confirm that these findings are not simply the result of overfitting the model to the data, so the more stringent model testing tool was also used. By checking the model against data that was not included in the training process, it was observed that over 94% of the classifications were correct.

With little room left for improvement, Tischler deployed the model to the BrainChip Akida Raspberry Pi Developer Kit. Two different methods for running inferences were then demonstrated. In this case, the first method, which involves using the Edge Impulse Linux SDK to classify an image file, would likely be the best option for a real-world deployment. But for scenarios where real-time classification of a live stream of images is required, Tischler also showed how images from a USB webcam can be classified. When using this method, a built-in web application is available that will display the images captured by the webcam along with the model’s predictions.

After verifying that the device was working as expected, some additional metrics were collected to better assess the system’s performance. It was noted that inference times were only about 100 to 150 milliseconds, which is quite impressive. This is far faster than what is needed for a diagnostic tool, but demonstrates that this setup would also be useful for real-time video analysis. Also of interest was the finding that the Akida chip only required milliwatts of power to run the classifier. This trait would certainly make this processor desirable in mobile applications.

Medical diagnostics may be right up your alley, but the methods outlined by Tischler in the project write-up can easily be adapted to other use cases if that is not your cup of tea. The Edge Impulse project has also been made public if you would like to get a running start on building your own creation.